# http://firecrawl.dev llms-full.txt

## Web Data Extraction

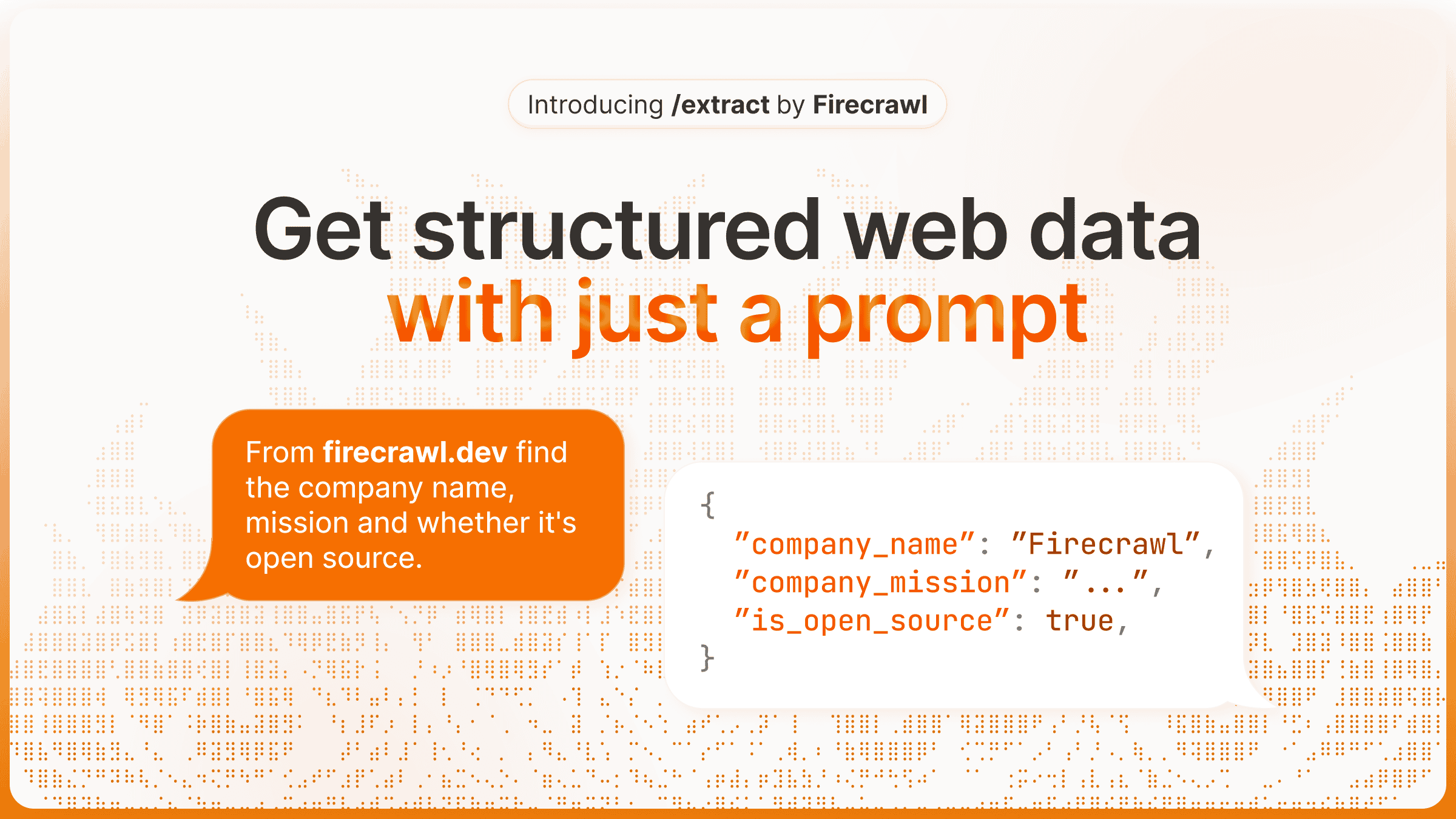

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

[💥 Get 2 months free with yearly plan](https://www.firecrawl.dev/pricing)

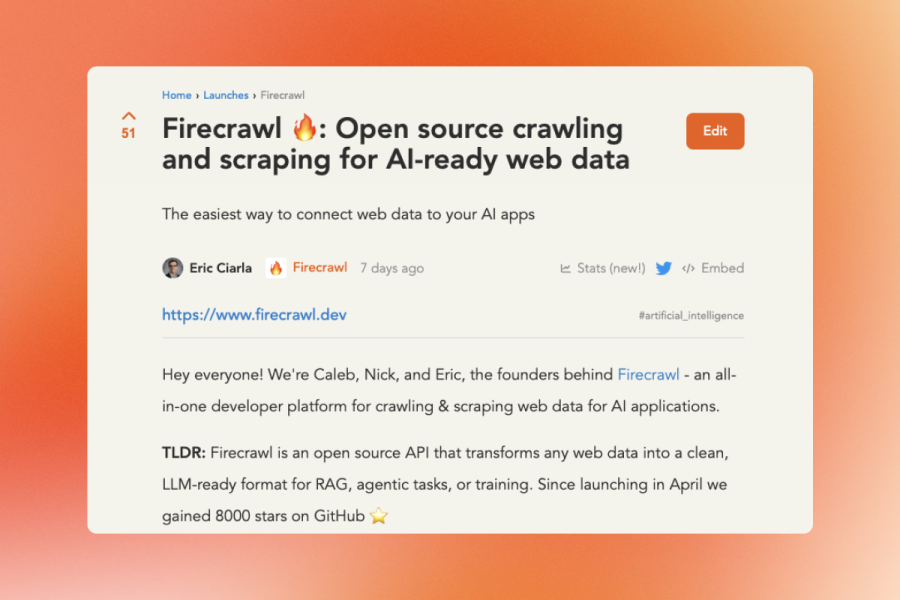

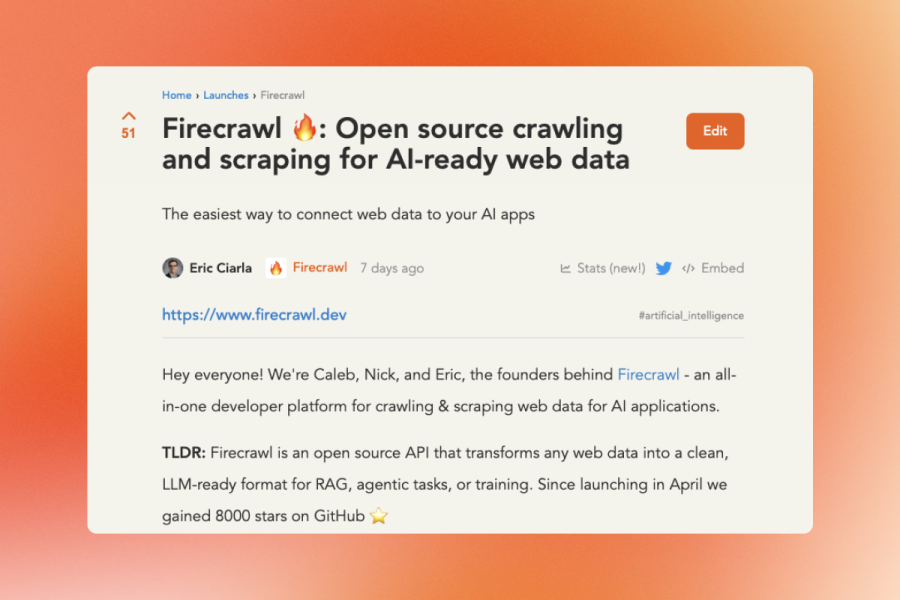

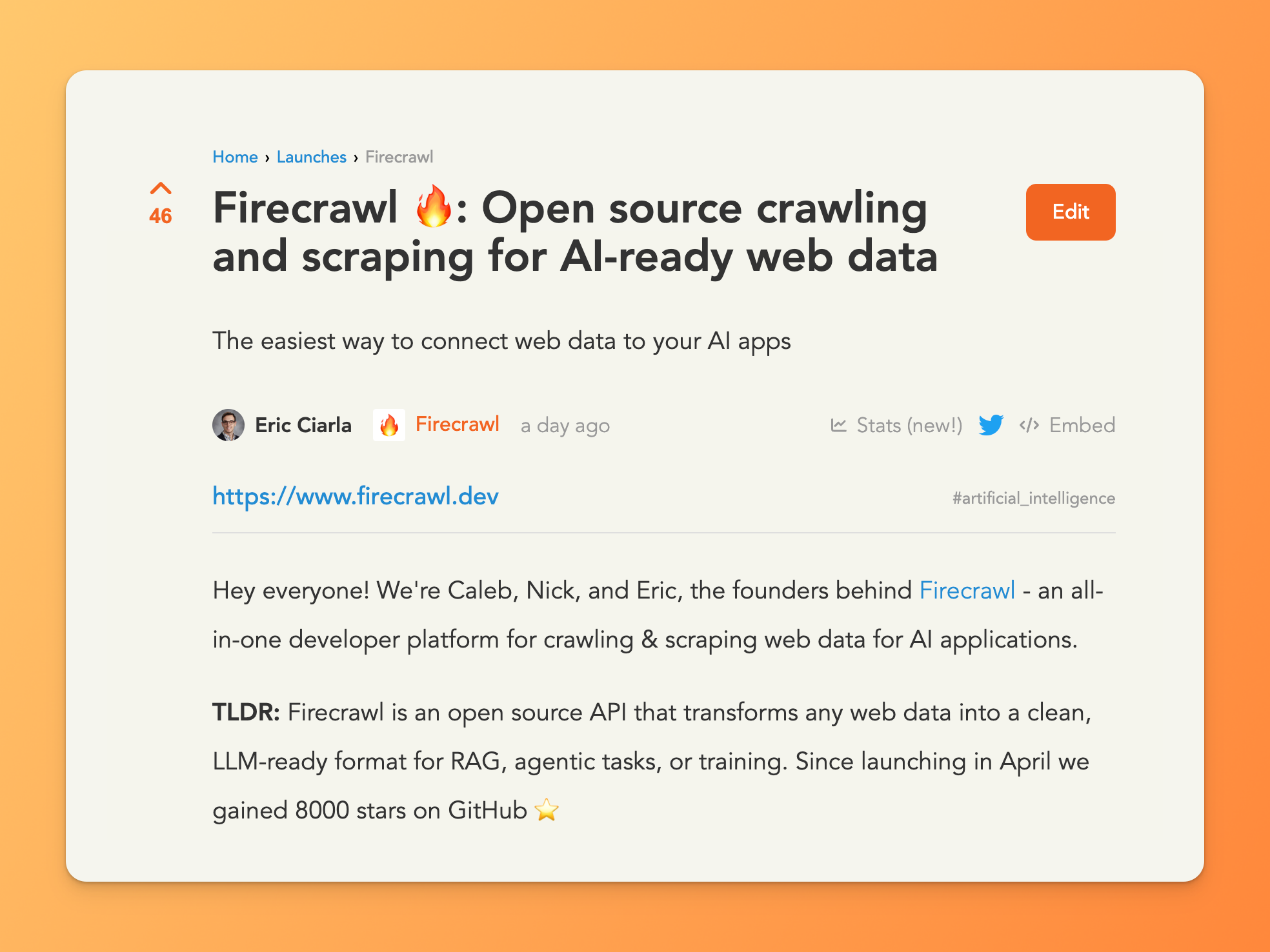

# Turn websites into LLM-ready data

Power your AI apps with clean data crawled from any website. [It's also open source.](https://github.com/mendableai/firecrawl)

https://example.com

Start for free (500 credits)Start for free

200 Response

\[\

\

{\

\

"url": "https://example.com",\

\

"markdown": "# Getting Started...",\

\

"json": {\

"title": "Guide",\

"docs": ...\

},\

\

"screenshot": "https://example.com/hero.png",\

\

}\

\

...\

\

\]

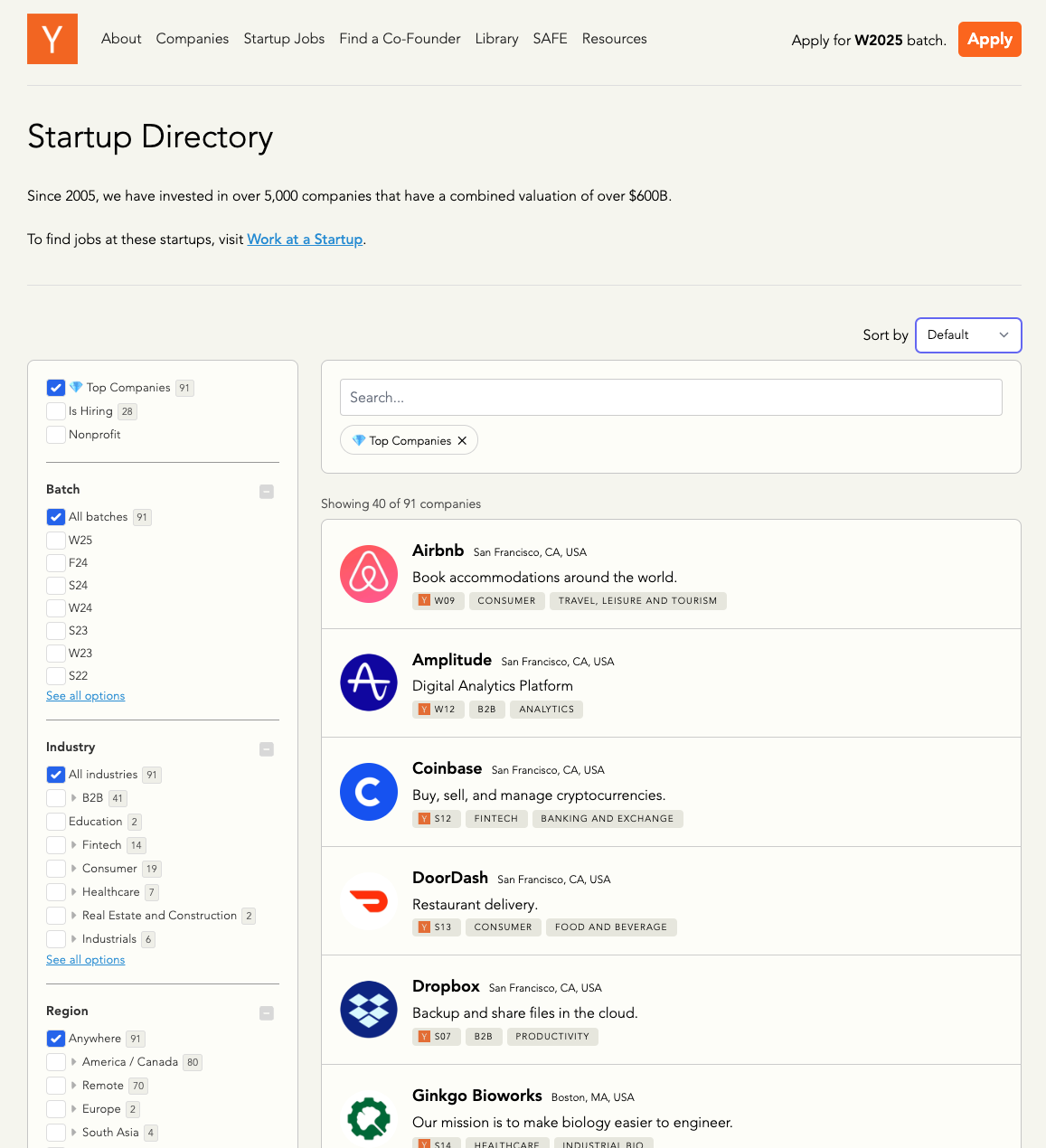

## Trusted by Top Companies

[](https://www.zapier.com/)

[](https://gamma.app/)

[](https://www.nvidia.com/)

[](https://phmg.com/)

[](https://www.stack-ai.com/)

[](https://www.teller.io/)

[](https://www.carrefour.com/)

[](https://www.vendr.com/)

[](https://www.open.gov.sg/)

[](https://www.zapier.com/)

[](https://gamma.app/)

[](https://www.nvidia.com/)

[](https://phmg.com/)

[](https://www.stack-ai.com/)

[](https://www.teller.io/)

[](https://www.carrefour.com/)

[](https://www.vendr.com/)

[](https://www.open.gov.sg/)

[](https://www.cyberagent.co.jp/)

[](https://continue.dev/)

[](https://www.bain.com/)

[](https://jasper.ai/)

[](https://www.palladiumdigital.com/)

[](https://www.checkr.com/)

[](https://www.jetbrains.com/)

[](https://www.you.com/)

[](https://www.cyberagent.co.jp/)

[](https://continue.dev/)

[](https://www.bain.com/)

[](https://jasper.ai/)

[](https://www.palladiumdigital.com/)

[](https://www.checkr.com/)

[](https://www.jetbrains.com/)

[](https://www.you.com/)

Developer first

## Start scraping this morning

Enhance your apps with industry leading web scraping and crawling capabilities

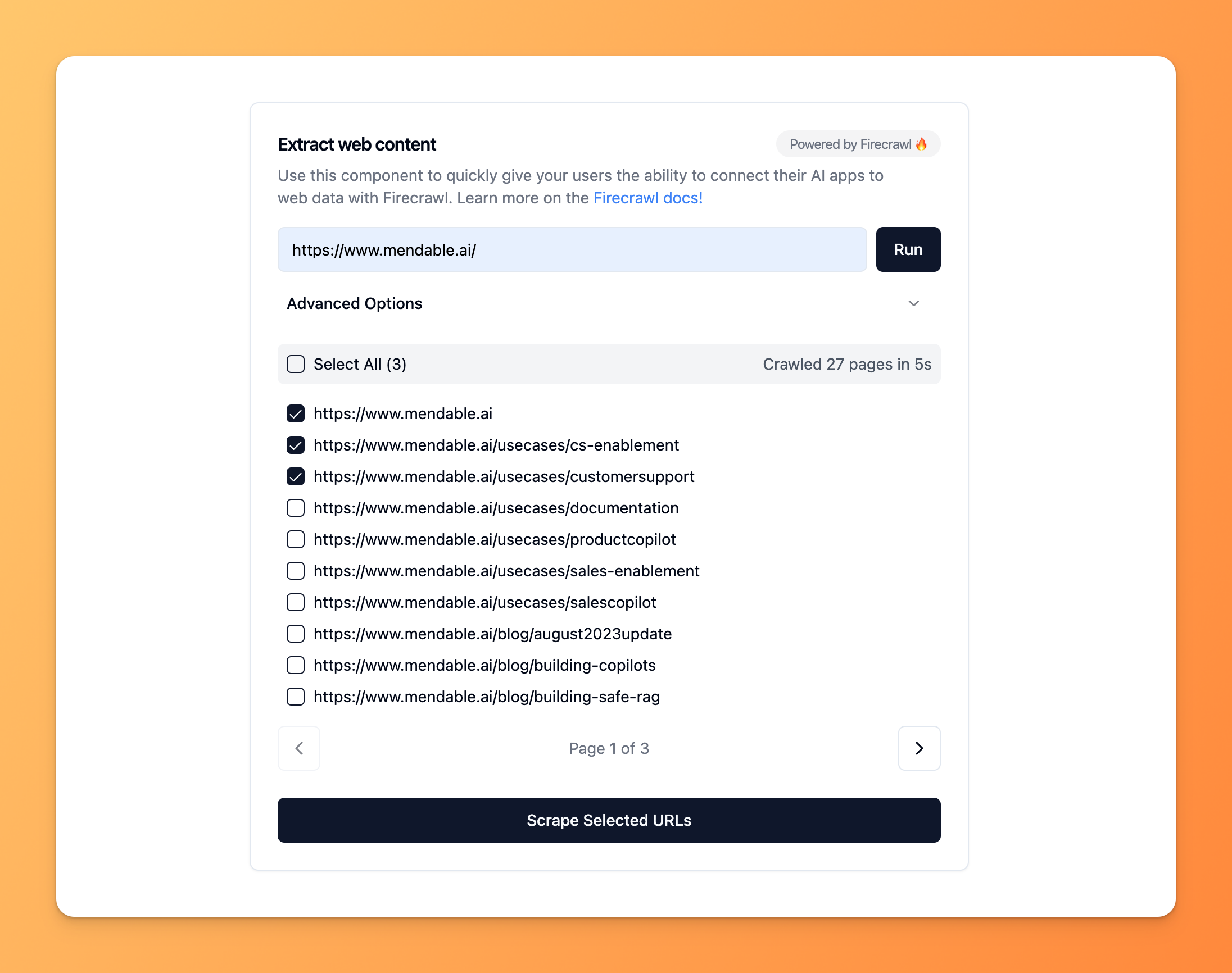

#### Scrape

Get llm-ready data from websites

#### Crawl

Crawl all the pages on a website

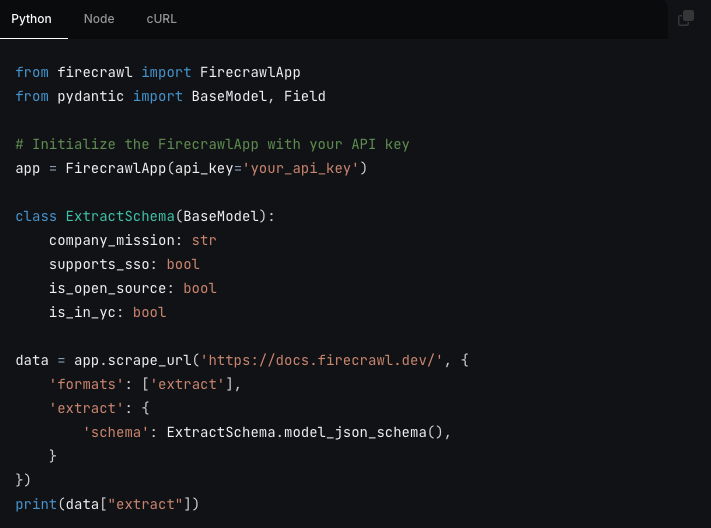

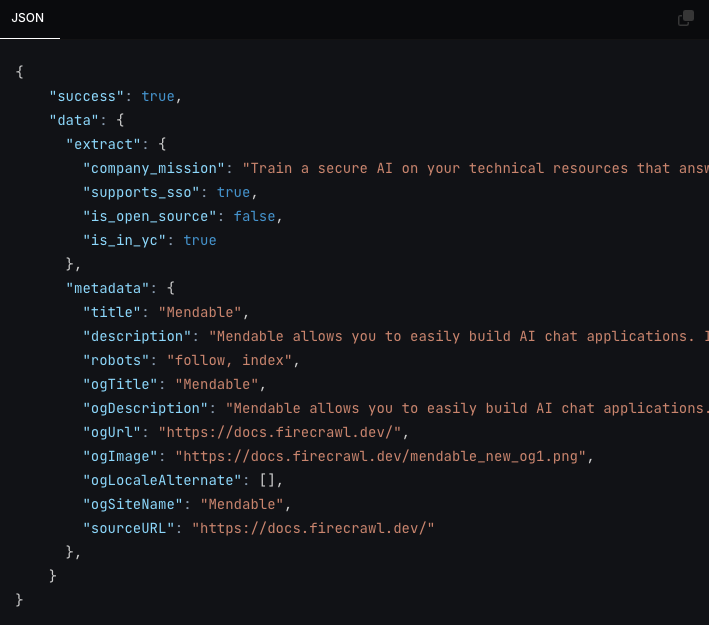

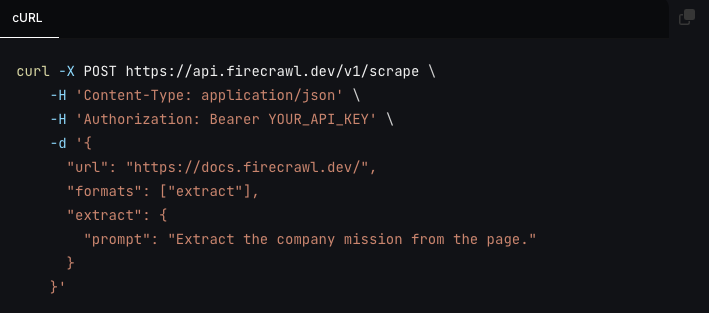

#### Extract

New

Extract structured data from websites

1

2

3

4

5

6

7

8

```

// npm install @mendable/firecrawl-js

import FirecrawlApp from '@mendable/firecrawl-js';

const app = new FirecrawlApp({ apiKey: "fc-YOUR_API_KEY" });

// Scrape a website:

await app.scrapeUrl('firecrawl.dev');

```

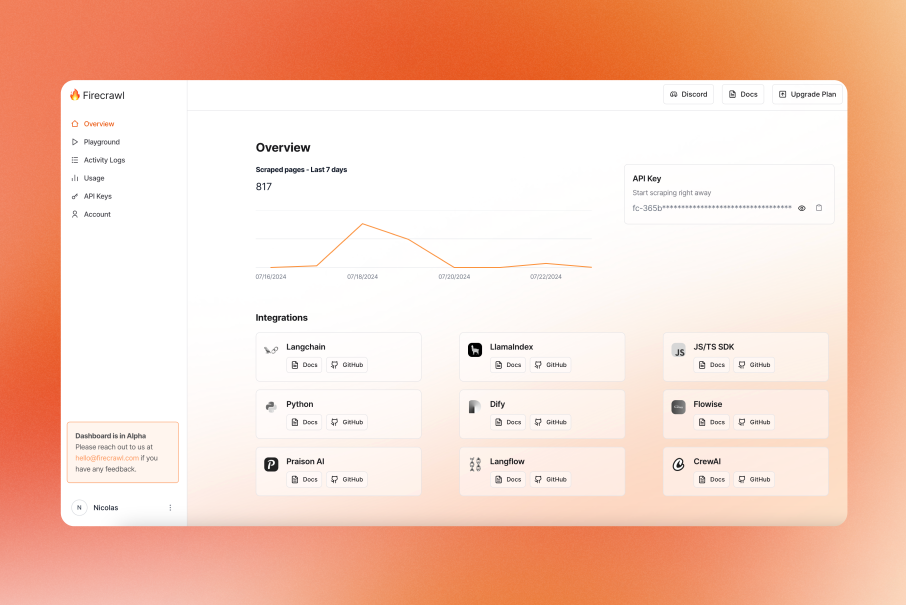

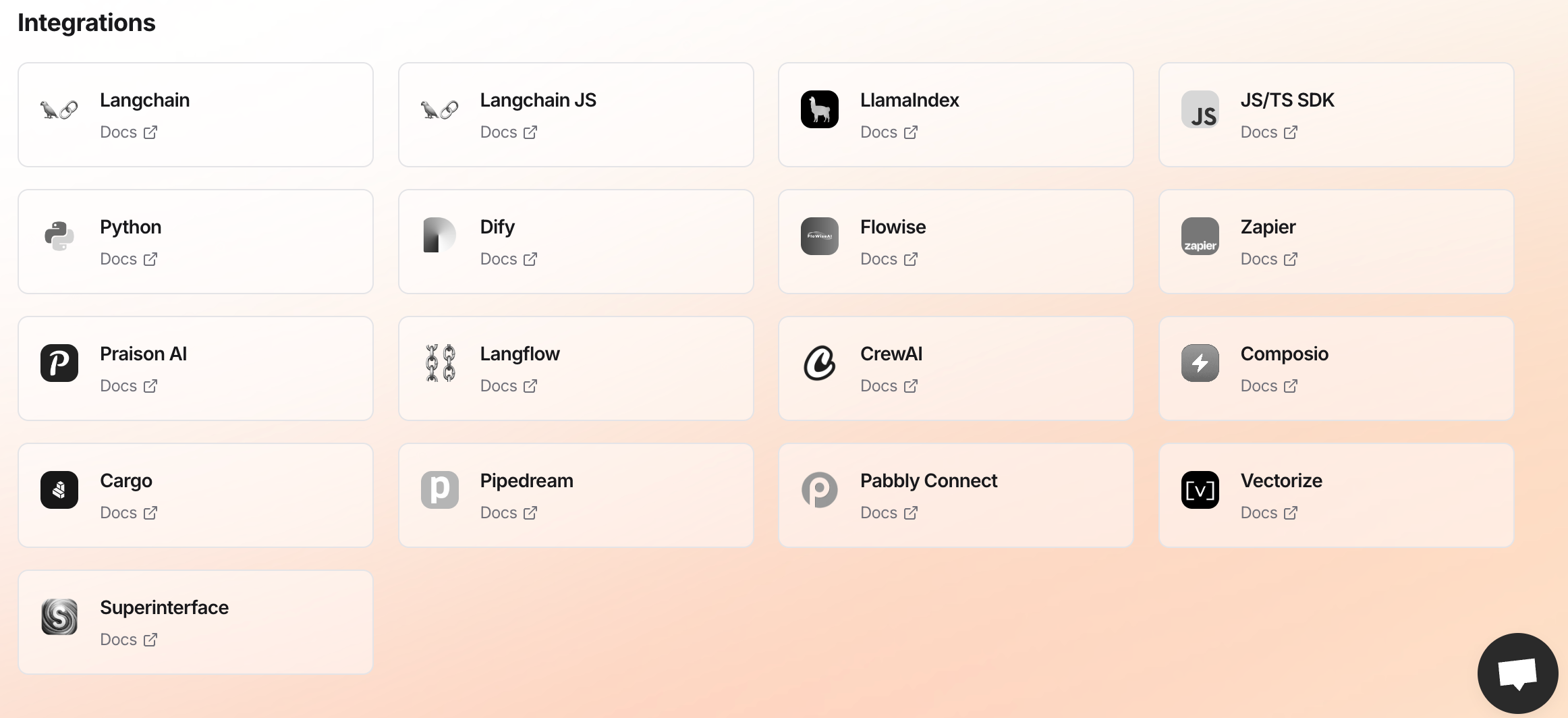

#### Use well-known tools

Already fully integrated with the greatest existing tools and workflows.

[](https://docs.llamaindex.ai/en/stable/examples/data_connectors/WebPageDemo/#using-firecrawl-reader/)[](https://python.langchain.com/v0.2/docs/integrations/document_loaders/firecrawl/)[](https://dify.ai/blog/dify-ai-blog-integrated-with-firecrawl/)[](https://www.langflow.org/)[](https://flowiseai.com/)[](https://crewai.com/)[](https://docs.camel-ai.org/cookbooks/ingest_data_from_websites_with_Firecrawl.html)

#### Start for free, scale easily

Kick off your journey for free and scale seamlessly as your project expands.

[Try it out](https://www.firecrawl.dev/signin/signup)

#### Open-source

Developed transparently and collaboratively. Join our community of contributors.

[Check out our repo](https://github.com/mendableai/firecrawl)

Zero Configuration

## We handle the hard stuff

Rotating proxies, orchestration, rate limits, js-blocked content and more

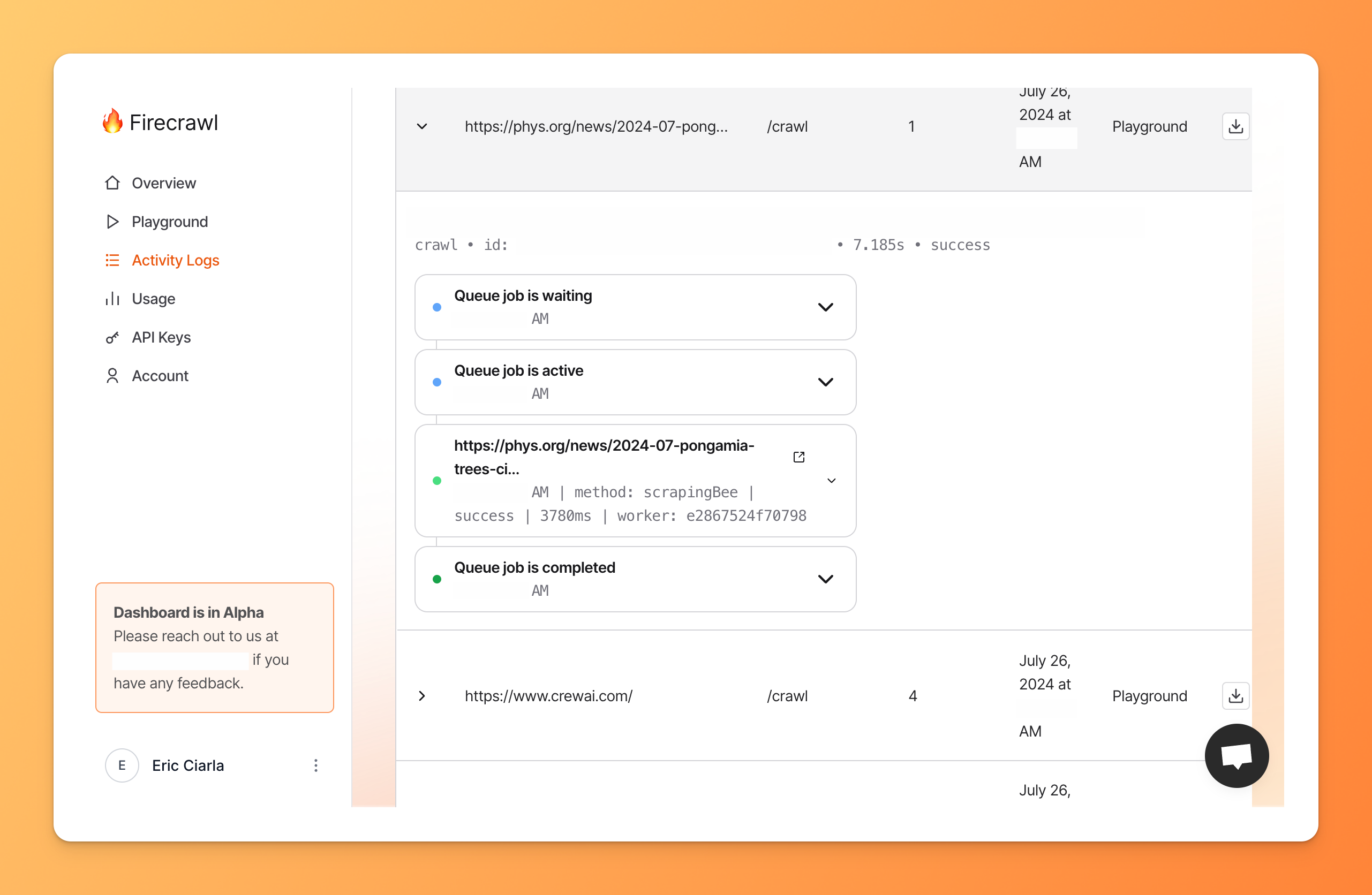

#### Crawling

Gather clean data from all accessible subpages, even without a sitemap.

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

Firecrawl

Extract web data for LLMs

Installation

npm install @mendable/firecrawl-js

# Firecrawl

Extract web data for LLMs

## Installation

\`\`\`bash

npm install @mendable/firecrawl-js

\`\`\`

#### Dynamic Content

Firecrawl handles JavaScript, SPAs, and dynamic content loading with minimal configuration.

#### Smart Wait

Firecrawl intelligently wait for content to load, making scraping faster and more reliable.

#### Reliability First

Reliability is our core focus. Firecrawl is designed to scale with your needs.

#### Actions

Click, scroll, write, wait, press and more before extracting content.

#### Media Parsing

Firecrawl can parse and output content from web hosted pdfs, docx, and more.

Our Wall of Love

## Don't take our word for it

### Morgan Linton

[@morganlinton](https://x.com/morganlinton/status/1839454165703204955)

If you're coding with AI, and haven't discovered @firecrawl\_dev yet, prepare to have your mind blown 🤯

### Chris DeWeese

[@ChrisDevApps](https://x.com/ChrisDevApps/status/1853587120406876601)

Started using @firecrawl\_dev for a project, I wish I used this sooner.

### Bardia Pourvakil

[@thepericulum](https://twitter.com/thepericulum/status/1781397799487078874)

The Firecrawl team ships. I wanted types for their node SDK, and less than an hour later, I got them.

### Tom Reppelin

[@TomReppelin](https://x.com/TomReppelin/status/1844382491014201613)

I found gold today. Thank you @firecrawl\_dev

### latentsauce 🧘🏽

[@latentsauce](https://twitter.com/latentsauce/status/1781738253927735331)

Firecrawl simplifies data preparation significantly, exactly what I was hoping for. Thank you Firecrawl ❤️❤️❤️

### Morgan Linton

[@morganlinton](https://x.com/morganlinton/status/1839454165703204955)

If you're coding with AI, and haven't discovered @firecrawl\_dev yet, prepare to have your mind blown 🤯

### Chris DeWeese

[@ChrisDevApps](https://x.com/ChrisDevApps/status/1853587120406876601)

Started using @firecrawl\_dev for a project, I wish I used this sooner.

### Bardia Pourvakil

[@thepericulum](https://twitter.com/thepericulum/status/1781397799487078874)

The Firecrawl team ships. I wanted types for their node SDK, and less than an hour later, I got them.

### Tom Reppelin

[@TomReppelin](https://x.com/TomReppelin/status/1844382491014201613)

I found gold today. Thank you @firecrawl\_dev

### latentsauce 🧘🏽

[@latentsauce](https://twitter.com/latentsauce/status/1781738253927735331)

Firecrawl simplifies data preparation significantly, exactly what I was hoping for. Thank you Firecrawl ❤️❤️❤️

### Michael Ning

Firecrawl is impressive, saving us 2/3 the tokens and allowing gpt3.5turbo use over gpt4. Major savings in time and money.

### Alex Reibman 🖇️

[@AlexReibman](https://twitter.com/AlexReibman/status/1780299595484131836)

Moved our internal agent's web scraping tool from Apify to Firecrawl because it benchmarked 50x faster with AgentOps.

### Alex Fazio

[@alxfazio](https://x.com/alxfazio/status/1826731977283641615)

Semantic scraping with Firecrawl is 🔥!

### Matt Busigin

[@mbusigin](https://x.com/mbusigin/status/1836065372010656069)

Firecrawl is dope. Congrats guys 👏

### Michael Ning

Firecrawl is impressive, saving us 2/3 the tokens and allowing gpt3.5turbo use over gpt4. Major savings in time and money.

### Alex Reibman 🖇️

[@AlexReibman](https://twitter.com/AlexReibman/status/1780299595484131836)

Moved our internal agent's web scraping tool from Apify to Firecrawl because it benchmarked 50x faster with AgentOps.

### Alex Fazio

[@alxfazio](https://x.com/alxfazio/status/1826731977283641615)

Semantic scraping with Firecrawl is 🔥!

### Matt Busigin

[@mbusigin](https://x.com/mbusigin/status/1836065372010656069)

Firecrawl is dope. Congrats guys 👏

Transparent

## Flexible Pricing

Start for free, then scale as you grow

Standard [Extract](https://www.firecrawl.dev/extract#pricing)

Monthly

Yearly

20% off\- 2 months free

## Free Plan

500 credits

$0 one-time

No credit card requiredGet Started

- Scrape 500 pages

- 10 /scrape per min

- 1 /crawl per min

## Hobby

3,000 credits per month

$16/month

$228/yr$190/yr (Billed annually)

Subscribe$190/yr

- Scrape 3,000 pages\*

- 20 /scrape per min

- 3 /crawl per min

- 1 seat

## StandardMost Popular

100,000 credits per month

$83/month

$1188/yr$990/yr (Billed annually)

Subscribe$990/yr

- Scrape 100,000 pages\*

- 100 /scrape per min

- 10 /crawl per min

- 3 seats

- Standard Support

## Growth

500,000 credits per month

$333/month

$4788/yr$3990/yr (Billed annually)

Subscribe$3990/yr

- Scrape 500,000 pages\*

- 1000 /scrape per min

- 50 /crawl per min

- 5 seats

- Priority Support

## Add-ons

### Auto Recharge Credits

Automatically recharge your credits when you run low

$11/mo for 1000 credits

Enable Auto Recharge

Subscribe to a plan to enable auto recharge

### Credit Pack

Purchase a pack of additional monthly credits

$9/mo for 1000 credits

Purchase Credit Pack

Subscribe to a plan to purchase credit packs

## Enterprise Plan

Unlimited credits. Custom RPMs.

Talk to us

- Bulk discounts

- Top priority support

- Custom concurrency limits

- Improved Stealth Proxies

- SLAs

- Advanced Security & Controls

\\* a /scrape refers to the [scrape](https://docs.firecrawl.dev/api-reference/endpoint/scrape) API endpoint. Structured extraction costs vary. See [credits table](https://www.firecrawl.dev/pricing#credits).

\\* a /crawl refers to the [crawl](https://docs.firecrawl.dev/api-reference/endpoint/crawl) API endpoint.

## API Credits

Credits are consumed for each API request, varying by endpoint and feature.

| Features | Credits |

| --- | --- |

| Scrape(/scrape) | 1 / page |

| with JSON format | 5 / page |

| Crawl(/crawl) | 1 / page |

| Map (/map) | 1 / call |

| Search(/search) | 1 / page |

| Extract (/extract) | New [Separate Pricing](https://www.firecrawl.dev/extract#pricing) |

[🔥](https://www.firecrawl.dev/)

## Ready to _Build?_

Start scraping web data for your AI apps today.

No credit card needed.

Get Started

FAQ

## Frequently Asked

Everything you need to know about Firecrawl

### General

### What is Firecrawl?

### What sites work?

### Who can benefit from using Firecrawl?

### Is Firecrawl open-source?

### What is the difference between Firecrawl and other web scrapers?

### What is the difference between the open-source version and the hosted version?

### Scraping & Crawling

### How does Firecrawl handle dynamic content on websites?

### Why is it not crawling all the pages?

### Can Firecrawl crawl websites without a sitemap?

### What formats can Firecrawl convert web data into?

### How does Firecrawl ensure the cleanliness of the data?

### Is Firecrawl suitable for large-scale data scraping projects?

### Does it respect robots.txt?

### What measures does Firecrawl take to handle web scraping challenges like rate limits and caching?

### Does Firecrawl handle captcha or authentication?

### API Related

### Where can I find my API key?

### Billing

### Is Firecrawl free?

### Is there a pay per use plan instead of monthly?

### How many credits do scraping, crawling, and extraction cost?

### Do you charge for failed requests (scrape, crawl, extract)?

### What payment methods do you accept?

## Flexible Web Scraping Pricing

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

Transparent

## Flexible Pricing

Start for free, then scale as you grow

Standard [Extract](https://www.firecrawl.dev/extract#pricing)

Monthly

Yearly

20% off\- 2 months free

## Free Plan

500 credits

$0 one-time

No credit card requiredGet Started

- Scrape 500 pages

- 10 /scrape per min

- 1 /crawl per min

## Hobby

3,000 creditsper month

$16/month

$228/yr$190/yr(Billed annually)

Subscribe$190/yr

- Scrape 3,000 pages\*

- 20 /scrape per min

- 3 /crawl per min

- 1 seat

## StandardMost Popular

100,000 creditsper month

$83/month

$1188/yr$990/yr(Billed annually)

Subscribe$990/yr

- Scrape 100,000 pages\*

- 100 /scrape per min

- 10 /crawl per min

- 3 seats

- Standard Support

## Growth

500,000 creditsper month

$333/month

$4788/yr$3990/yr(Billed annually)

Subscribe$3990/yr

- Scrape 500,000 pages\*

- 1000 /scrape per min

- 50 /crawl per min

- 5 seats

- Priority Support

## Add-ons

### Auto Recharge Credits

Automatically recharge your credits when you run low

$11/mo for 1000 credits

Enable Auto Recharge

Subscribe to a plan to enable auto recharge

### Credit Pack

Purchase a pack of additional monthly credits

$9/mo for 1000 credits

Purchase Credit Pack

Subscribe to a plan to purchase credit packs

## Enterprise Plan

Unlimited credits. Custom RPMs.

Talk to us

- Bulk discounts

- Top priority support

- Custom concurrency limits

- Improved Stealth Proxies

- SLAs

- Advanced Security & Controls

\\* a /scrape refers to the [scrape](https://docs.firecrawl.dev/api-reference/endpoint/scrape) API endpoint. Structured extraction costs vary. See [credits table](https://www.firecrawl.dev/pricing#credits).

\\* a /crawl refers to the [crawl](https://docs.firecrawl.dev/api-reference/endpoint/crawl) API endpoint.

## API Credits

Credits are consumed for each API request, varying by endpoint and feature.

| Features | Credits |

| --- | --- |

| Scrape(/scrape) | 1 / page |

| with JSON format | 5 / page |

| Crawl(/crawl) | 1 / page |

| Map(/map) | 1 / call |

| Search(/search) | 1 / page |

| Extract(/extract) | New [Separate Pricing](https://www.firecrawl.dev/extract#pricing) |

Our Wall of Love

## Don't take our word for it

### Morgan Linton

[@morganlinton](https://x.com/morganlinton/status/1839454165703204955)

If you're coding with AI, and haven't discovered @firecrawl\_dev yet, prepare to have your mind blown 🤯

### Chris DeWeese

[@ChrisDevApps](https://x.com/ChrisDevApps/status/1853587120406876601)

Started using @firecrawl\_dev for a project, I wish I used this sooner.

### Bardia Pourvakil

[@thepericulum](https://twitter.com/thepericulum/status/1781397799487078874)

The Firecrawl team ships. I wanted types for their node SDK, and less than an hour later, I got them.

### Tom Reppelin

[@TomReppelin](https://x.com/TomReppelin/status/1844382491014201613)

I found gold today. Thank you @firecrawl\_dev

### latentsauce 🧘🏽

[@latentsauce](https://twitter.com/latentsauce/status/1781738253927735331)

Firecrawl simplifies data preparation significantly, exactly what I was hoping for. Thank you Firecrawl ❤️❤️❤️

### Morgan Linton

[@morganlinton](https://x.com/morganlinton/status/1839454165703204955)

If you're coding with AI, and haven't discovered @firecrawl\_dev yet, prepare to have your mind blown 🤯

### Chris DeWeese

[@ChrisDevApps](https://x.com/ChrisDevApps/status/1853587120406876601)

Started using @firecrawl\_dev for a project, I wish I used this sooner.

### Bardia Pourvakil

[@thepericulum](https://twitter.com/thepericulum/status/1781397799487078874)

The Firecrawl team ships. I wanted types for their node SDK, and less than an hour later, I got them.

### Tom Reppelin

[@TomReppelin](https://x.com/TomReppelin/status/1844382491014201613)

I found gold today. Thank you @firecrawl\_dev

### latentsauce 🧘🏽

[@latentsauce](https://twitter.com/latentsauce/status/1781738253927735331)

Firecrawl simplifies data preparation significantly, exactly what I was hoping for. Thank you Firecrawl ❤️❤️❤️

### Michael Ning

Firecrawl is impressive, saving us 2/3 the tokens and allowing gpt3.5turbo use over gpt4. Major savings in time and money.

### Alex Reibman 🖇️

[@AlexReibman](https://twitter.com/AlexReibman/status/1780299595484131836)

Moved our internal agent's web scraping tool from Apify to Firecrawl because it benchmarked 50x faster with AgentOps.

### Alex Fazio

[@alxfazio](https://x.com/alxfazio/status/1826731977283641615)

Semantic scraping with Firecrawl is 🔥!

### Matt Busigin

[@mbusigin](https://x.com/mbusigin/status/1836065372010656069)

Firecrawl is dope. Congrats guys 👏

### Michael Ning

Firecrawl is impressive, saving us 2/3 the tokens and allowing gpt3.5turbo use over gpt4. Major savings in time and money.

### Alex Reibman 🖇️

[@AlexReibman](https://twitter.com/AlexReibman/status/1780299595484131836)

Moved our internal agent's web scraping tool from Apify to Firecrawl because it benchmarked 50x faster with AgentOps.

### Alex Fazio

[@alxfazio](https://x.com/alxfazio/status/1826731977283641615)

Semantic scraping with Firecrawl is 🔥!

### Matt Busigin

[@mbusigin](https://x.com/mbusigin/status/1836065372010656069)

Firecrawl is dope. Congrats guys 👏

## Web Scraping and AI

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

[\\

\\

Feb 26, 2025\\

\\

**LLM API Engine: How to Build a Dynamic API Generation Engine Powered by Firecrawl** \\

\\

Learn how to build a dynamic API generation engine that transforms unstructured web data into clean, structured APIs using natural language descriptions instead of code, powered by Firecrawl's intelligent web scraping and OpenAI.\\

\\

By Bex Tuychiev](https://www.firecrawl.dev/blog/llm-api-engine-dynamic-api-generation-explainer)

## Explore Articles

[All](https://www.firecrawl.dev/blog) [Product Updates](https://www.firecrawl.dev/blog/category/product) [Tutorials](https://www.firecrawl.dev/blog/category/tutorials) [Customer Stories](https://www.firecrawl.dev/blog/category/customer-stories) [Tips & Resources](https://www.firecrawl.dev/blog/category/tips-and-resources)

[\\

**Building a Clone of OpenAI's Deep Research with TypeScript and Firecrawl** \\

Learn how to build an open-source alternative to OpenAI's Deep Research using TypeScript, Firecrawl, and LLMs. This tutorial covers web scraping, AI processing, and building a performant research platform.\\

\\

By Bex TuychievFeb 24, 2025](https://www.firecrawl.dev/blog/open-deep-research-explainer)

[\\

**How to Create Custom Instruction Datasets for LLM Fine-tuning** \\

Learn how to build high-quality instruction datasets for fine-tuning large language models (LLMs). This guide covers when to create custom datasets, best practices for data collection and curation, and a practical example of building a code documentation dataset.\\

\\

By Bex TuychievFeb 18, 2025](https://www.firecrawl.dev/blog/custom-instruction-datasets-llm-fine-tuning)

[\\

**Fine-tuning DeepSeek R1 on a Custom Instructions Dataset** \\

A comprehensive guide on fine-tuning DeepSeek R1 language models using custom instruction datasets, covering model selection, dataset preparation, and practical implementation steps.\\

\\

By Bex TuychievFeb 18, 2025](https://www.firecrawl.dev/blog/fine-tuning-deepseek)

[\\

**How Replit Uses Firecrawl to Power Replit Agent** \\

Discover how Replit leverages Firecrawl to keep Replit Agent up to date with the latest API documentation and web content.\\

\\

By Zhen LiFeb 17, 2025](https://www.firecrawl.dev/blog/how-replit-uses-firecrawl-to-power-ai-agents)

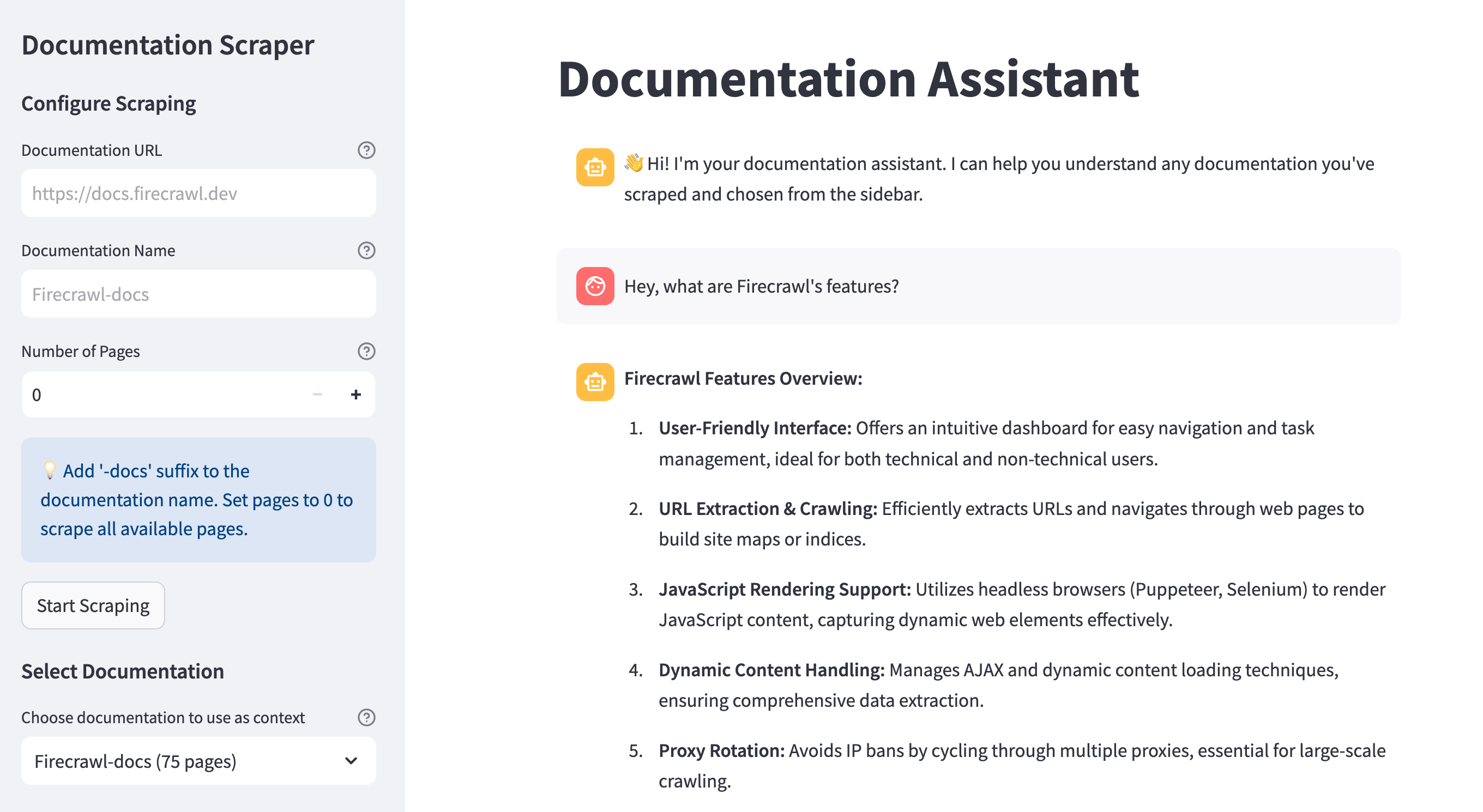

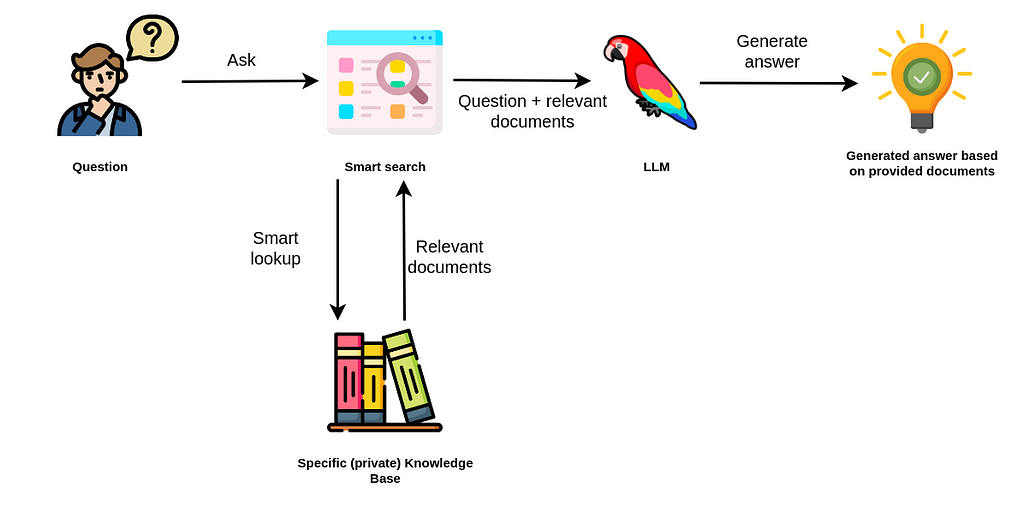

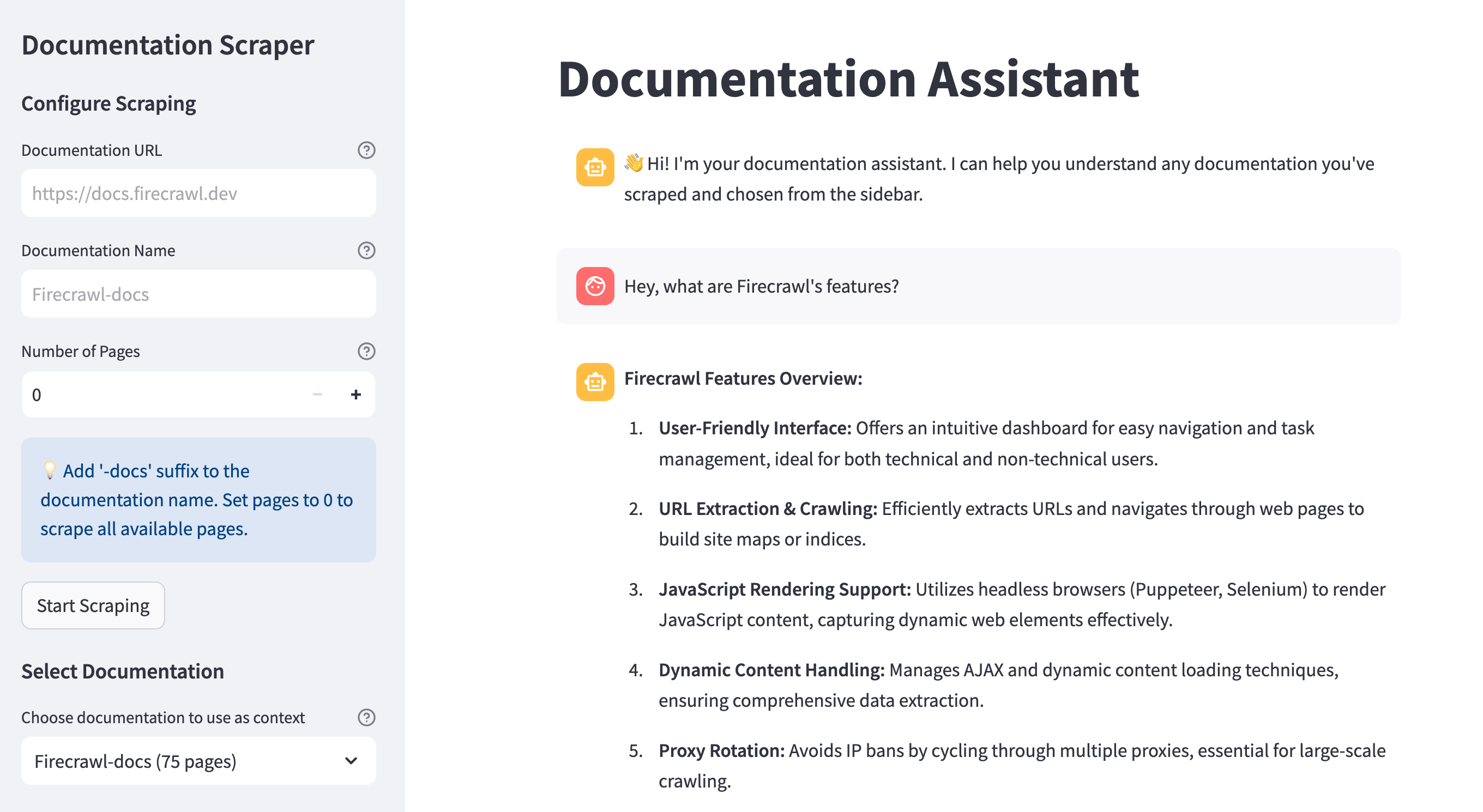

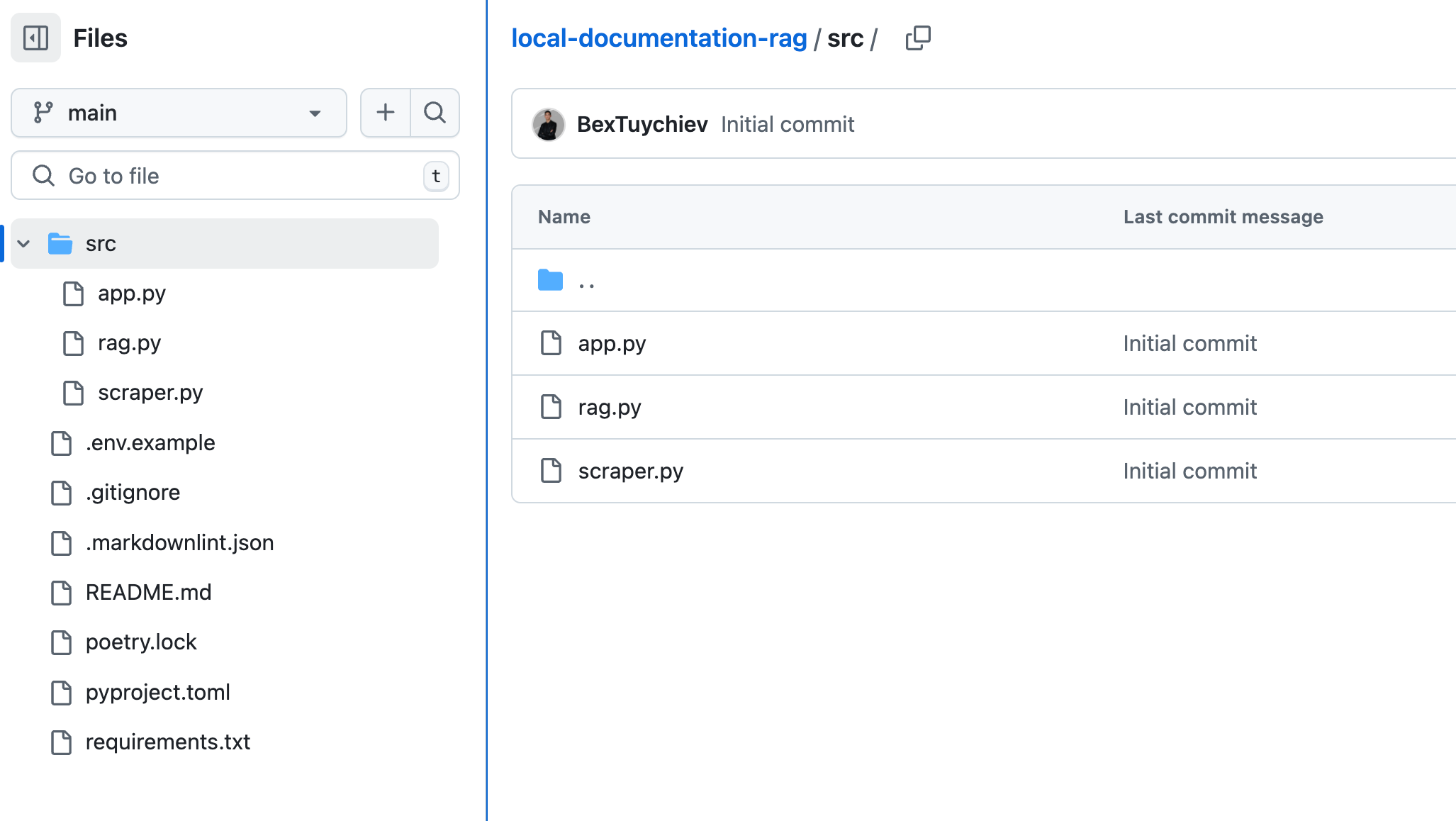

[\\

**Building an Intelligent Code Documentation RAG Assistant with DeepSeek and Firecrawl** \\

Learn how to build an intelligent documentation assistant powered by DeepSeek and RAG (Retrieval Augmented Generation) that can answer questions about any documentation website by combining language models with efficient information retrieval.\\

\\

By Bex TuychievFeb 10, 2025](https://www.firecrawl.dev/blog/deepseek-rag-documentation-assistant)

[\\

**Automated Data Collection - A Comprehensive Guide** \\

Learn how to build robust automated data collection systems using modern tools and best practices. This guide covers everything from selecting the right tools to implementing scalable collection pipelines.\\

\\

By Bex TuychievFeb 2, 2025](https://www.firecrawl.dev/blog/automated-data-collection-guide)

[\\

**Building an AI Resume Job Matching App With Firecrawl And Claude** \\

Learn how to build an AI-powered job matching system that automatically scrapes job postings, parses resumes, evaluates opportunities using Claude, and sends Discord alerts for matching positions using Firecrawl, Streamlit, and Supabase.\\

\\

By Bex TuychievFeb 1, 2025](https://www.firecrawl.dev/blog/ai-resume-parser-job-matcher-python)

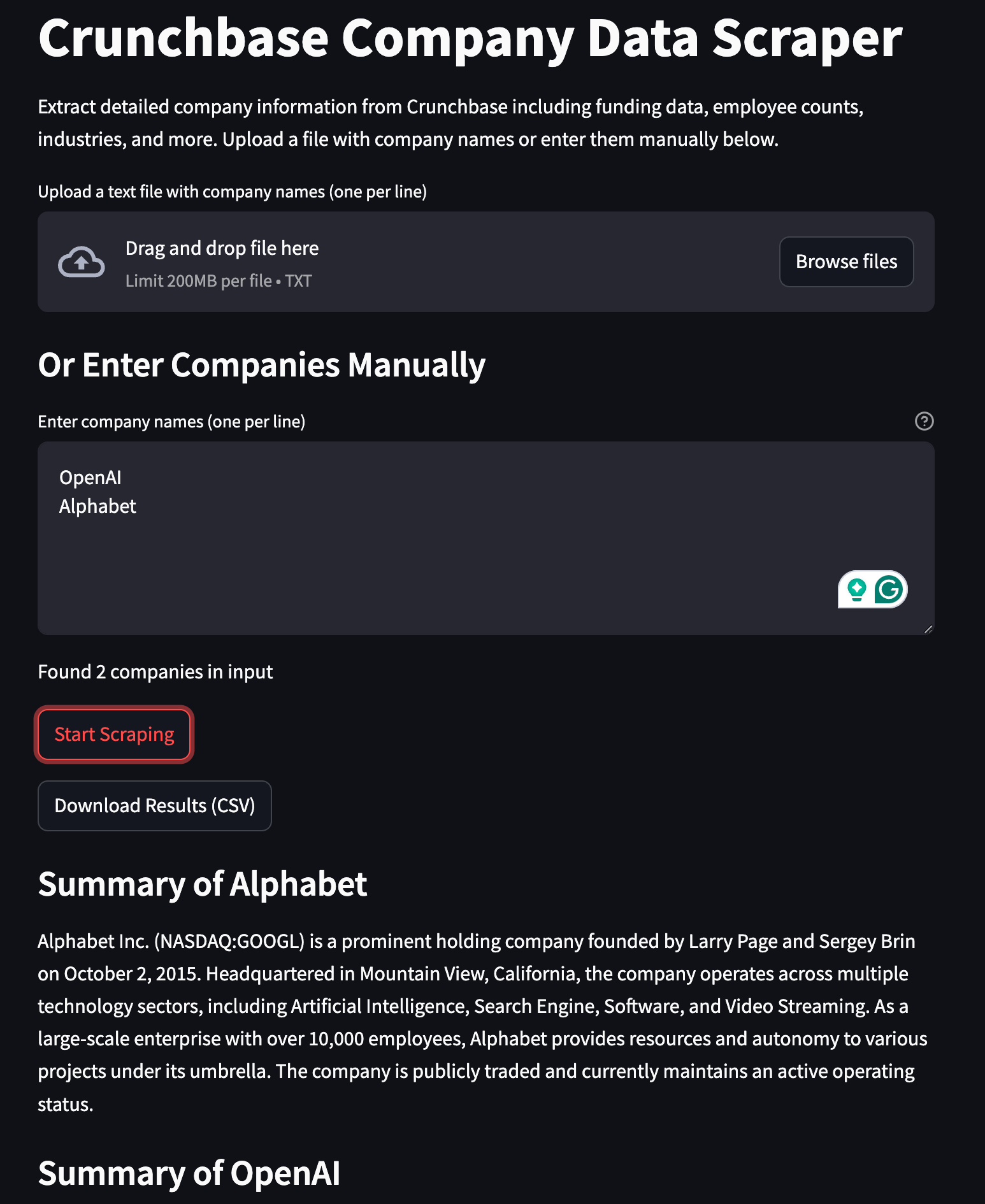

[\\

**Scraping Company Data and Funding Information in Bulk With Firecrawl and Claude** \\

Learn how to build a web scraper in Python that gathers company details, funding rounds, and investor information from public sources like Crunchbase using Firecrawl and Claude for automated data collection and analysis.\\

\\

By Bex TuychievJan 31, 2025](https://www.firecrawl.dev/blog/crunchbase-scraping-with-firecrawl-claude)

[\\

**Mastering the Extract Endpoint in Firecrawl** \\

Learn how to use Firecrawl's extract endpoint to automatically gather structured data from any website using AI. Build powerful web scrapers, create training datasets, and enrich your data without writing complex code.\\

\\

By Bex TuychievJan 23, 2025](https://www.firecrawl.dev/blog/mastering-firecrawl-extract-endpoint)

[\\

**Introducing /extract: Get structured web data with just a prompt** \\

Our new /extract endpoint harnesses AI to turn any website into structured data for your applications seamlessly.\\

\\

By Eric CiarlaJanuary 20, 2025](https://www.firecrawl.dev/blog/introducing-extract-open-beta)

[\\

**How to Build a Bulk Sales Lead Extractor in Python Using AI** \\

Learn how to build an automated sales lead extraction tool in Python that uses AI to scrape company information from websites, exports data to Excel, and streamlines the lead generation process using Firecrawl and Streamlit.\\

\\

By Bex TuychievJan 12, 2025](https://www.firecrawl.dev/blog/sales-lead-extractor-python-ai)

[\\

**Building a Trend Detection System with AI in TypeScript: A Step-by-Step Guide** \\

Learn how to build an automated trend detection system in TypeScript that monitors social media and news sites, analyzes content with AI, and sends real-time Slack alerts using Firecrawl, Together AI, and GitHub Actions.\\

\\

By Bex TuychievJan 11, 2025](https://www.firecrawl.dev/blog/trend-finder-typescript)

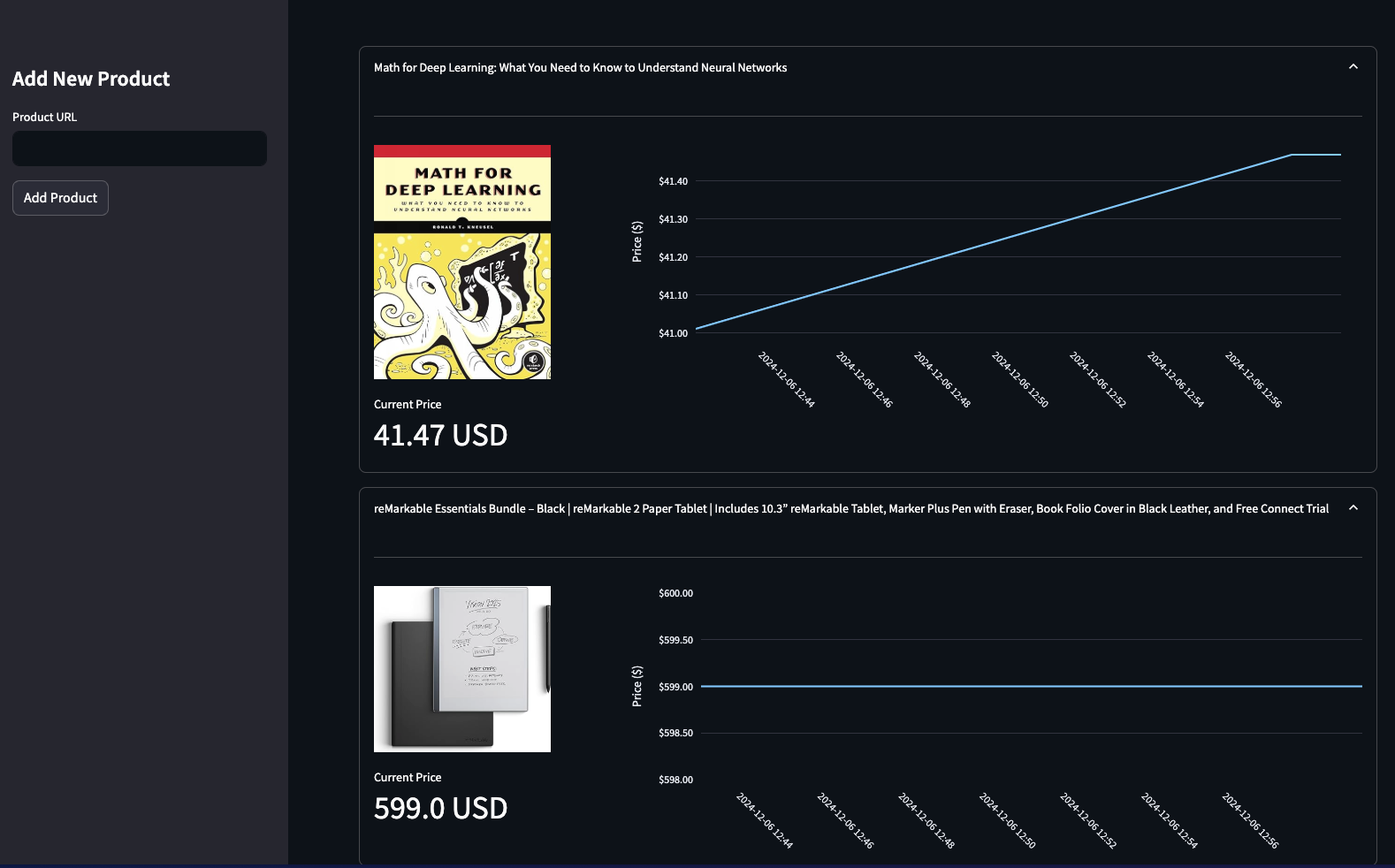

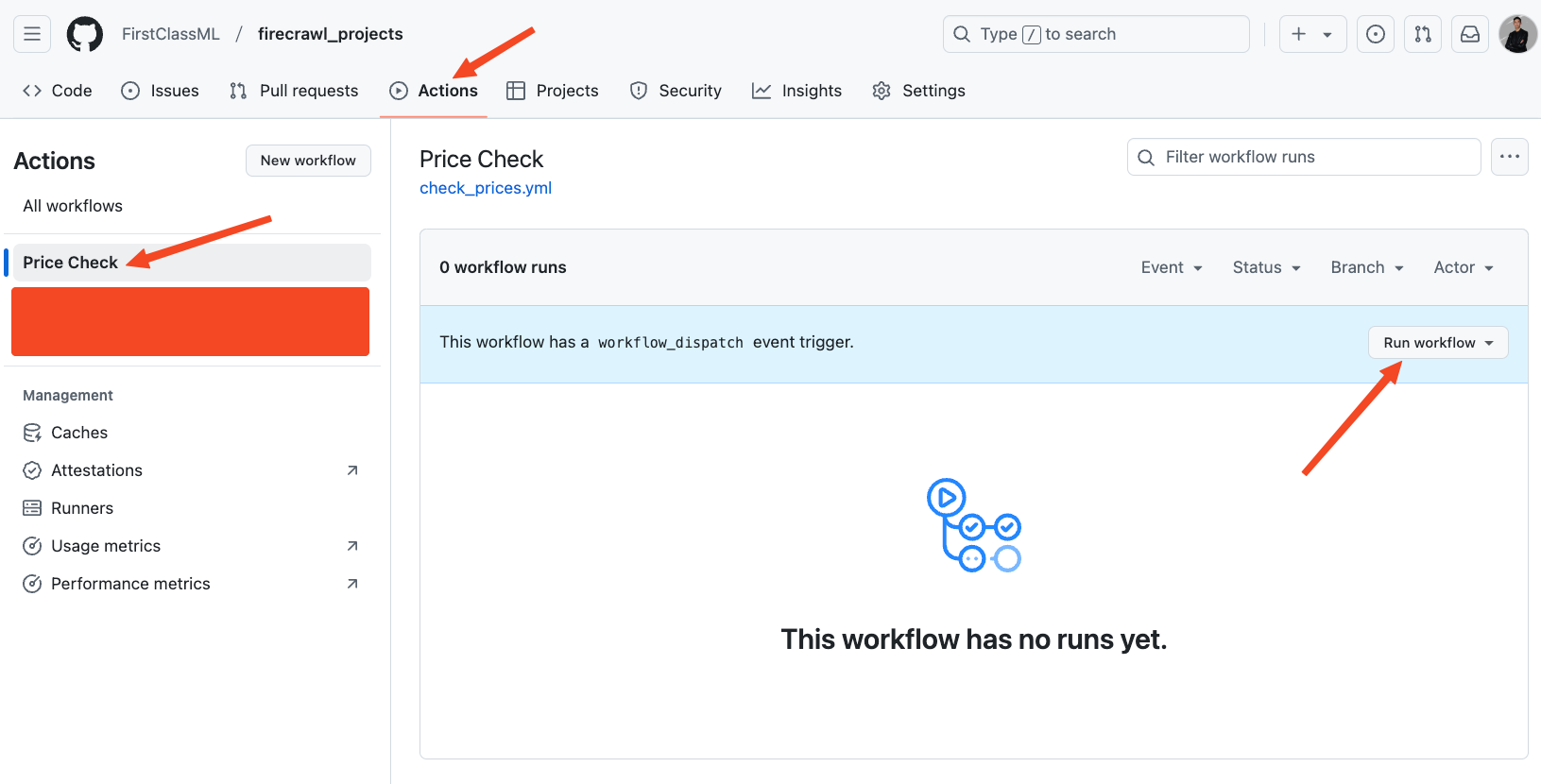

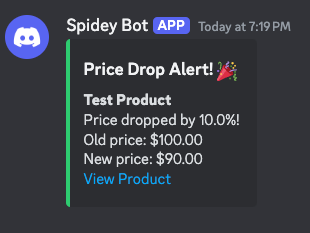

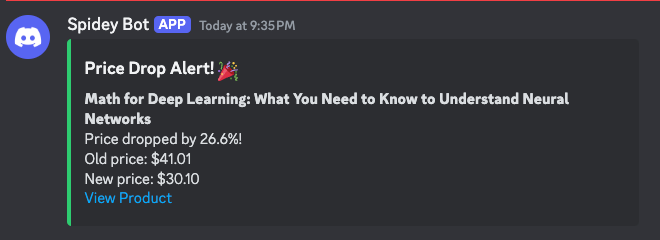

[\\

**How to Build an Automated Competitor Price Monitoring System with Python** \\

Learn how to build an automated competitor price monitoring system in Python that tracks prices across e-commerce sites, provides real-time comparisons, and maintains price history using Firecrawl, Streamlit, and GitHub Actions.\\

\\

By Bex TuychievJan 6, 2025](https://www.firecrawl.dev/blog/automated-competitor-price-scraping)

[\\

**How Stack AI Uses Firecrawl to Power AI Agents** \\

Discover how Stack AI leverages Firecrawl to seamlessly feed agentic AI workflows with high-quality web data.\\

\\

By Jonathan KleimanJan 3, 2025](https://www.firecrawl.dev/blog/how-stack-ai-uses-firecrawl-to-power-ai-agents)

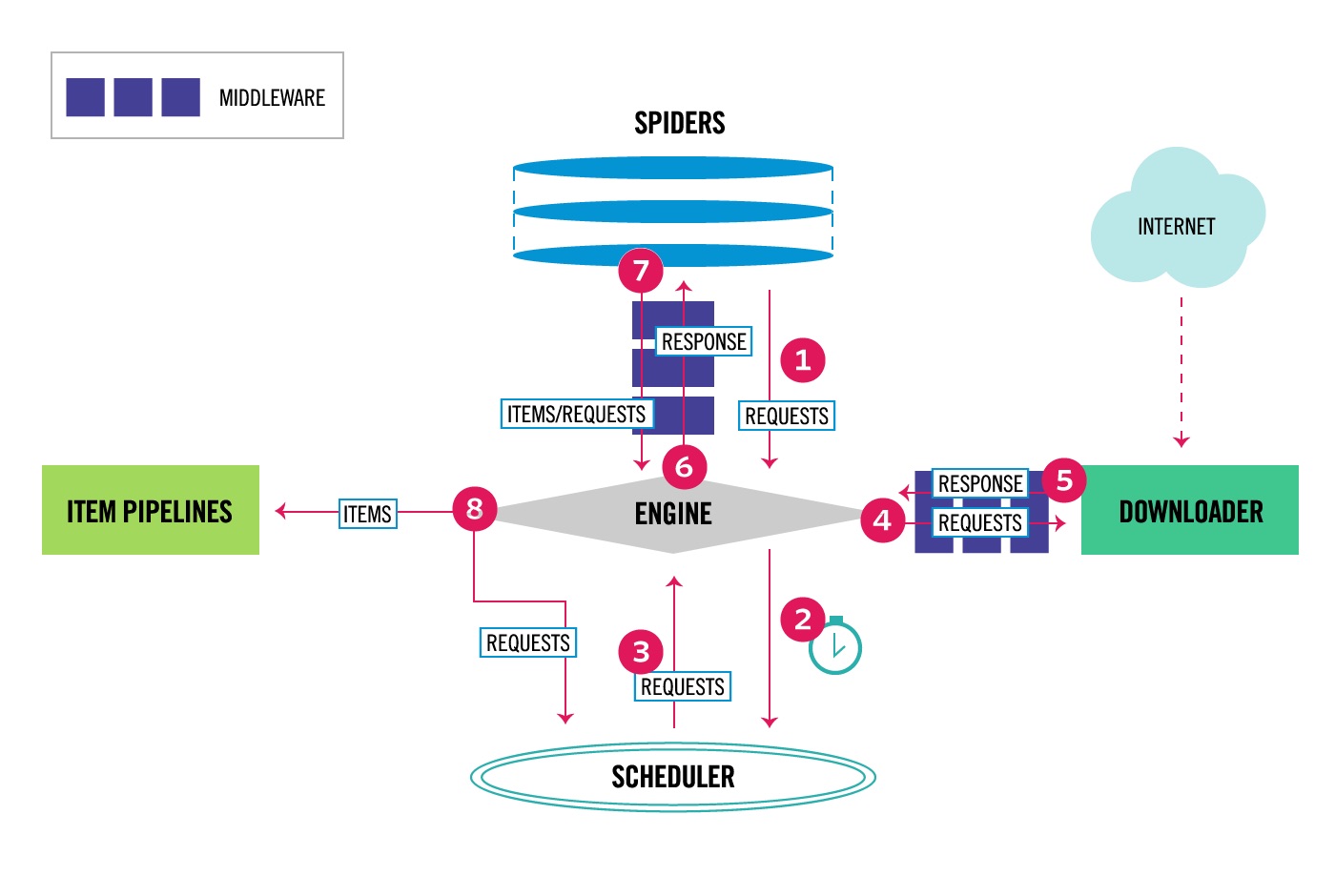

[\\

**BeautifulSoup4 vs. Scrapy - A Comprehensive Comparison for Web Scraping in Python** \\

Learn the key differences between BeautifulSoup4 and Scrapy for web scraping in Python. Compare their features, performance, and use cases to choose the right tool for your web scraping needs.\\

\\

By Bex TuychievDec 24, 2024](https://www.firecrawl.dev/blog/beautifulsoup4-vs-scrapy-comparison)

[\\

**15 Python Web Scraping Projects: From Beginner to Advanced** \\

Explore 15 hands-on web scraping projects in Python, from beginner to advanced level. Learn essential concepts like data extraction, concurrent processing, and distributed systems while building real-world applications.\\

\\

By Bex TuychievDec 17, 2024](https://www.firecrawl.dev/blog/python-web-scraping-projects)

[\\

**How to Deploy Python Web Scrapers** \\

Learn how to deploy Python web scrapers using GitHub Actions, Heroku, PythonAnywhere and more.\\

\\

By Bex TuychievDec 16, 2024](https://www.firecrawl.dev/blog/deploy-web-scrapers)

[\\

**Why Companies Need a Data Strategy for Generative AI** \\

Learn why a well-defined data strategy is essential for building robust, production-ready generative AI systems, and discover practical steps for curation, maintenance, and integration.\\

\\

By Eric CiarlaDec 15, 2024](https://www.firecrawl.dev/blog/why-companies-need-a-data-strategy-for-generative-ai)

[\\

**Data Enrichment: A Complete Guide to Enhancing Your Data Quality** \\

Learn how to enrich your data quality with a comprehensive guide covering data enrichment tools, best practices, and real-world examples. Discover how to leverage modern solutions like Firecrawl to automate data collection, validation, and integration for better business insights.\\

\\

By Bex TuychievDec 14, 2024](https://www.firecrawl.dev/blog/complete-guide-to-data-enrichment)

[\\

**A Complete Guide Scraping Authenticated Websites with cURL and Firecrawl** \\

Learn how to scrape login-protected websites using cURL and Firecrawl API. Step-by-step guide covering basic auth, tokens, and cookies with real examples.\\

\\

By Rudrank RiyamDec 13, 2024](https://www.firecrawl.dev/blog/complete-guide-to-curl-authentication-firecrawl-api)

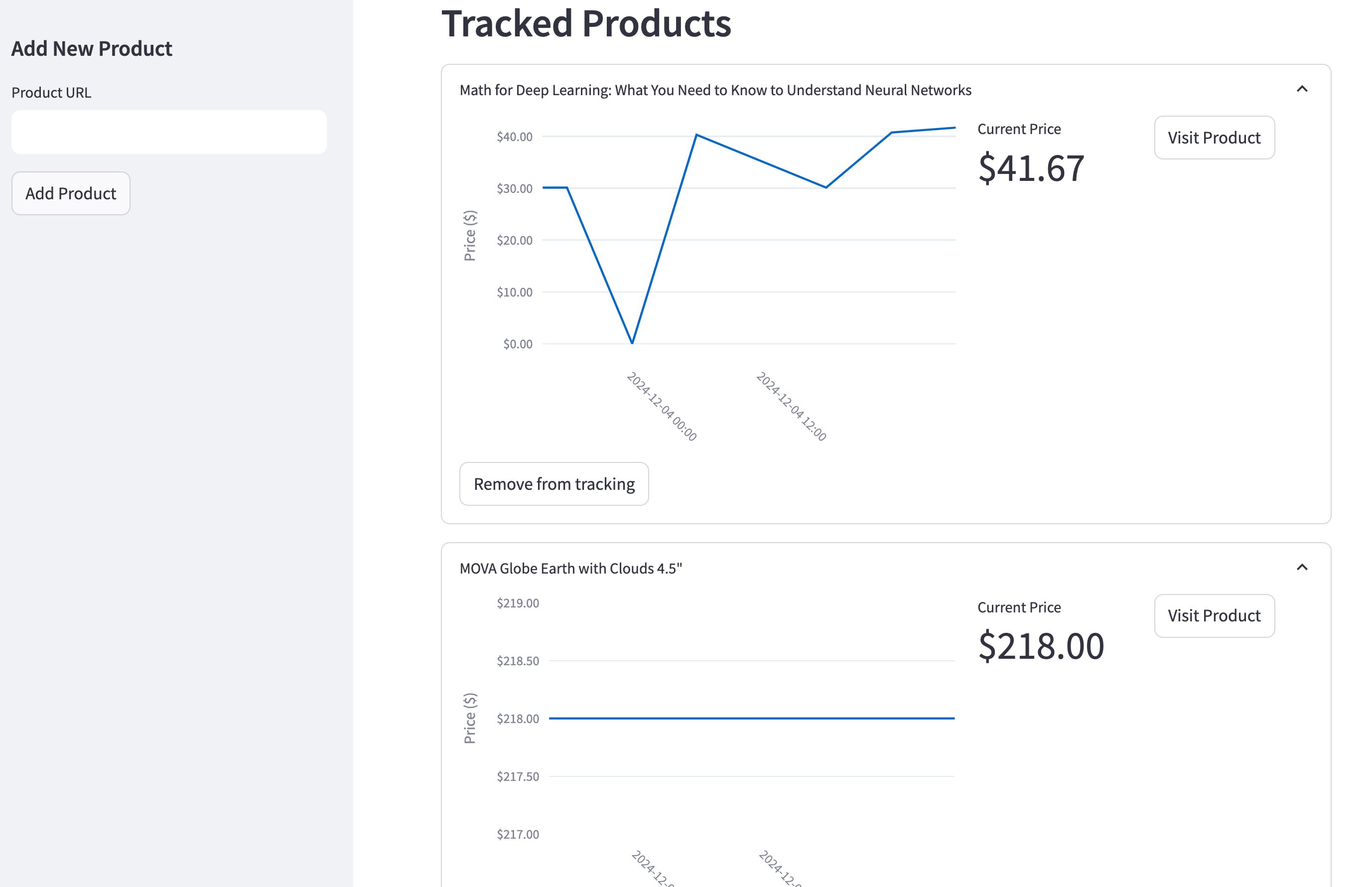

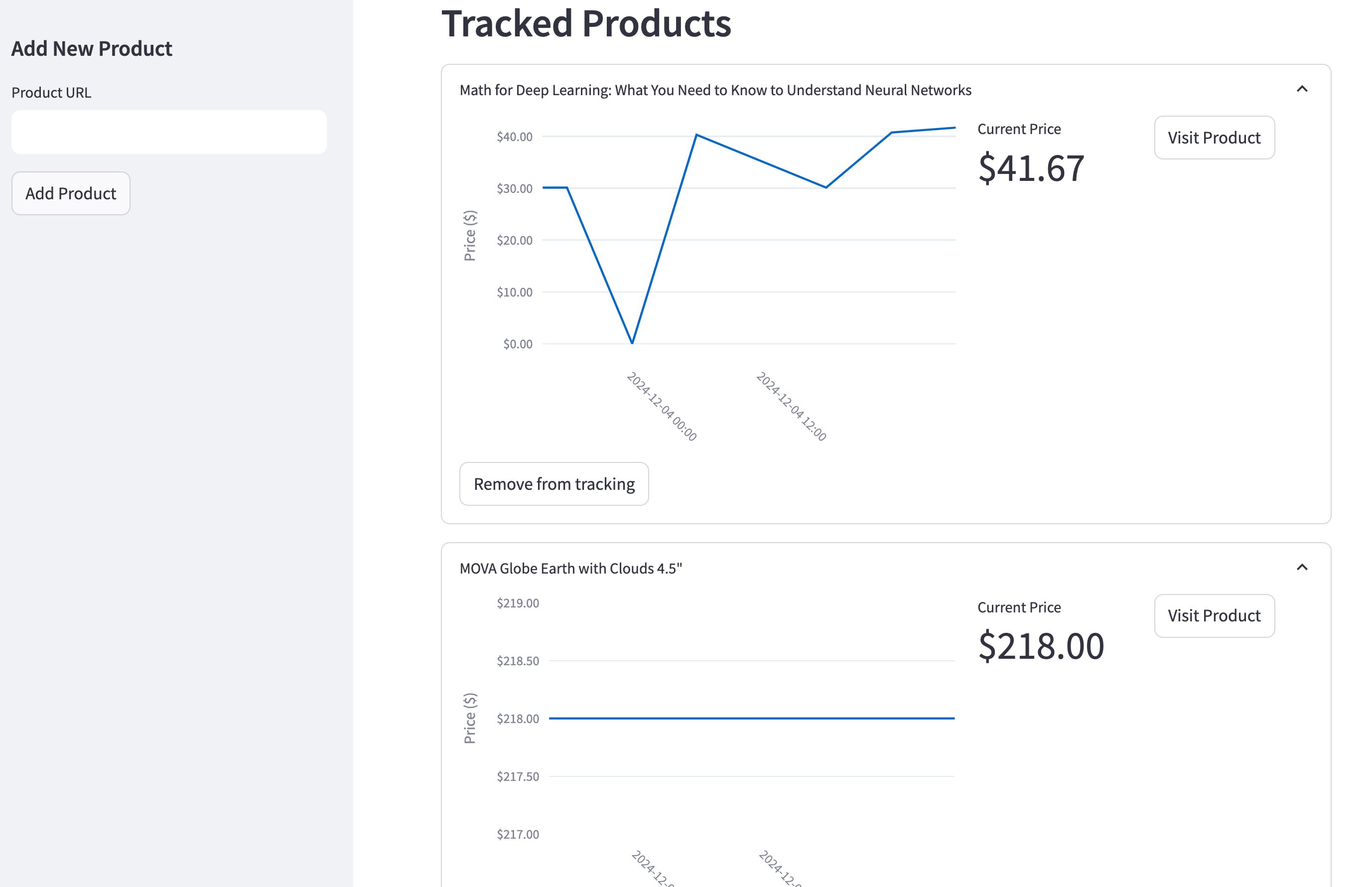

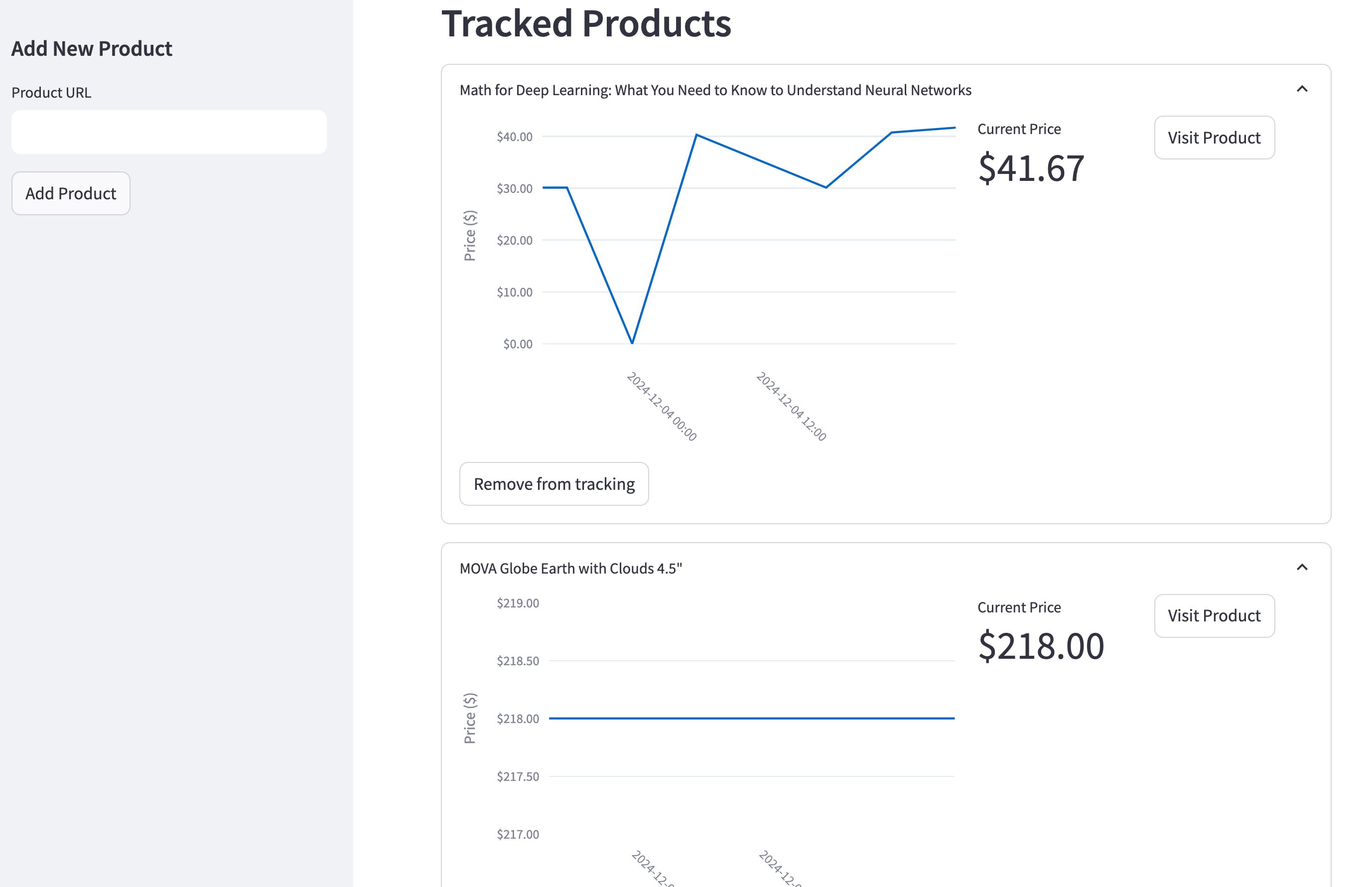

[\\

**Building an Automated Price Tracking Tool** \\

Build an automated e-commerce price tracker in Python. Learn web scraping, price monitoring, and automated alerts using Firecrawl, Streamlit, PostgreSQL.\\

\\

By Bex TuychievDec 9, 2024](https://www.firecrawl.dev/blog/automated-price-tracking-tutorial-python)

[\\

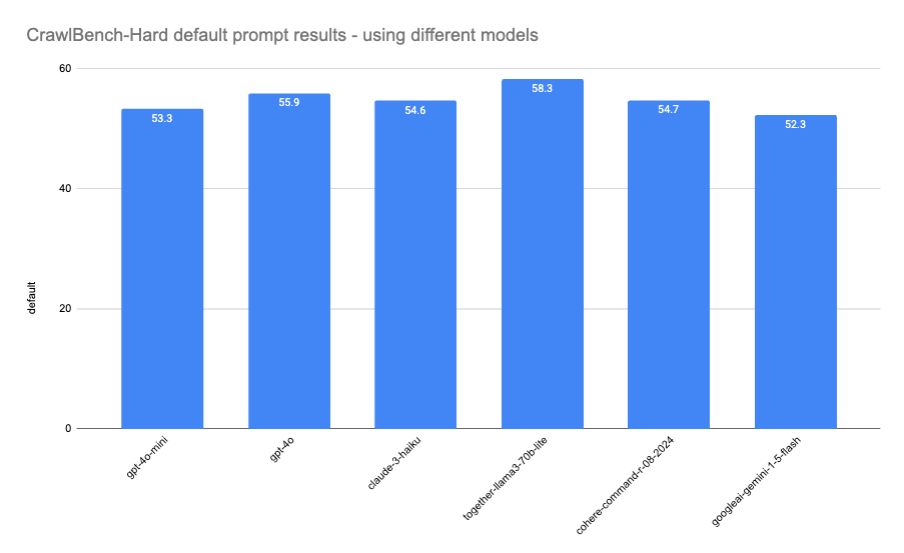

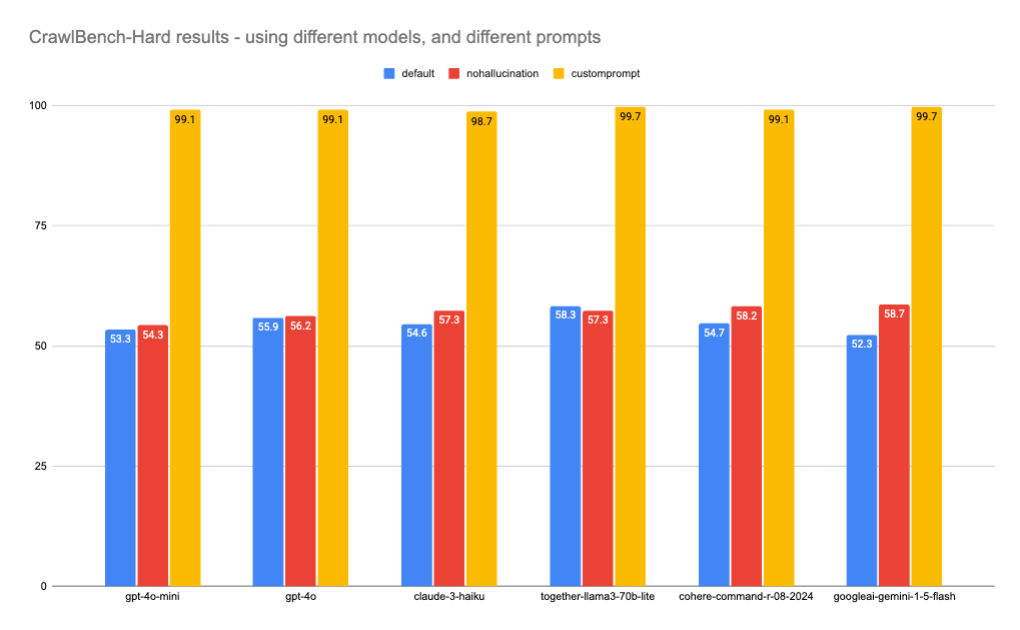

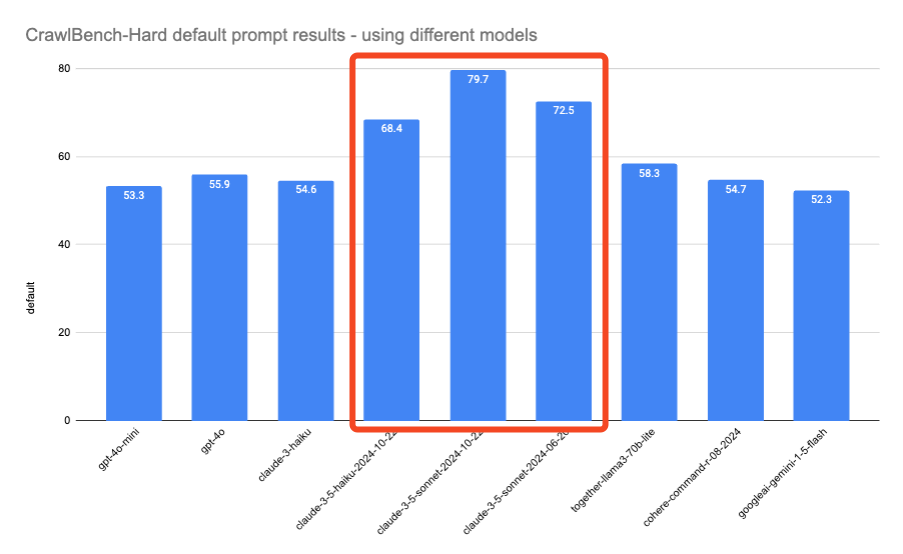

**Evaluating Web Data Extraction with CrawlBench** \\

An in-depth exploration of CrawlBench, a benchmark for testing LLM-based web data extraction.\\

\\

By SwyxDec 9, 2024](https://www.firecrawl.dev/blog/crawlbench-llm-extraction)

[\\

**How Cargo Empowers GTM Teams with Firecrawl** \\

See how Cargo uses Firecrawl to instantly analyze webpage content and power Go-To-Market workflows for their users.\\

\\

By Tariq MinhasDec 6, 2024](https://www.firecrawl.dev/blog/how-cargo-empowers-gtm-teams-with-firecrawl)

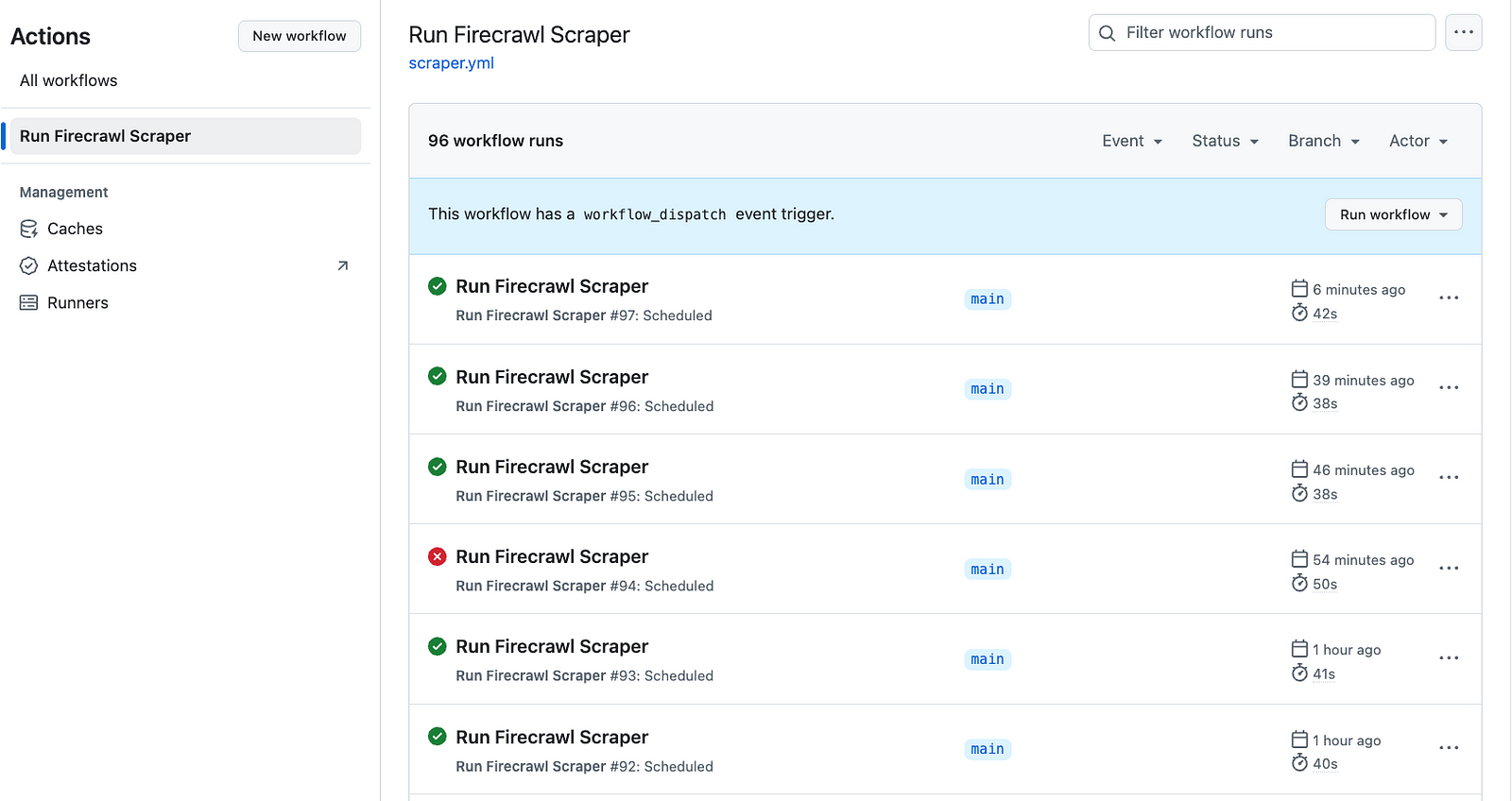

[\\

**Web Scraping Automation: How to Run Scrapers on a Schedule** \\

Learn how to automate web scraping in Python using free tools like schedule, asyncio, cron jobs and GitHub Actions. This comprehensive guide covers local and cloud-based scheduling methods to run scrapers reliably in 2025.\\

\\

By Bex TuychievDec 5, 2024](https://www.firecrawl.dev/blog/automated-web-scraping-free-2025)

[\\

**How to Generate Sitemaps Using Firecrawl's /map Endpoint: A Complete Guide** \\

Learn how to generate XML and visual sitemaps using Firecrawl's /map endpoint. Step-by-step guide with Python code examples, performance comparisons, and interactive visualization techniques for effective website mapping.\\

\\

By Bex TuychievNov 29, 2024](https://www.firecrawl.dev/blog/how-to-generate-sitemaps-using-firecrawl-map-endpoint)

[\\

**How to Use Firecrawl's Scrape API: Complete Web Scraping Tutorial** \\

Learn how to scrape websites using Firecrawl's /scrape endpoint. Master JavaScript rendering, structured data extraction, and batch operations with Python code examples.\\

\\

By Bex TuychievNov 25, 2024](https://www.firecrawl.dev/blog/mastering-firecrawl-scrape-endpoint)

[\\

**How to Create an llms.txt File for Any Website** \\

Learn how to generate an llms.txt file for any website using the llms.txt Generator and Firecrawl.\\

\\

By Eric CiarlaNov 22, 2024](https://www.firecrawl.dev/blog/How-to-Create-an-llms-txt-File-for-Any-Website)

[\\

**Mastering Firecrawl's Crawl Endpoint: A Complete Web Scraping Guide** \\

Learn how to use Firecrawl's /crawl endpoint for efficient web scraping. Master URL control, performance optimization, and integration with LangChain for AI-powered data extraction.\\

\\

By Bex TuychievNov 18, 2024](https://www.firecrawl.dev/blog/mastering-the-crawl-endpoint-in-firecrawl)

[\\

**Getting Started with OpenAI's Predicted Outputs for Faster LLM Responses** \\

A guide to leveraging Predicted Outputs to speed up LLM tasks with GPT-4o models.\\

\\

By Eric CiarlaNov 5, 2024](https://www.firecrawl.dev/blog/getting-started-with-predicted-outputs-openai)

[\\

**Launch Week II Recap** \\

Recapping all the exciting announcements from Firecrawl's second Launch Week.\\

\\

By Eric CiarlaNovember 4, 2024](https://www.firecrawl.dev/blog/launch-week-ii-recap)

[\\

**Launch Week II - Day 7: Introducing Faster Markdown Parsing** \\

Our new HTML to Markdown parser is 4x faster, more reliable, and produces cleaner Markdown, built from the ground up for speed and performance.\\

\\

By Eric CiarlaNovember 3, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-7-introducing-faster-markdown-parsing)

[\\

**Launch Week II - Day 6: Introducing Mobile Scraping and Mobile Screenshots** \\

Interact with sites as if from a mobile device using Firecrawl's new mobile device emulation.\\

\\

By Eric CiarlaNovember 2, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-6-introducing-mobile-scraping)

[\\

**Launch Week II - Day 5: Introducing New Actions** \\

Capture page content at any point and wait for specific elements with our new Scrape and Wait for Selector actions.\\

\\

By Eric CiarlaNovember 1, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-5-introducing-two-new-actions)

[\\

**Launch Week II - Day 4: Advanced iframe Scraping** \\

We are thrilled to announce comprehensive iframe scraping support in Firecrawl, enabling seamless handling of nested iframes, dynamically loaded content, and cross-origin frames.\\

\\

By Eric CiarlaOctober 31, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-4-advanced-iframe-scraping)

[\\

**Launch Week II - Day 3: Introducing Credit Packs** \\

Easily top up your plan with Credit Packs to keep your web scraping projects running smoothly. Plus, manage your credits effortlessly with our new Auto Recharge feature.\\

\\

By Eric CiarlaOctober 30, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-3-introducing-credit-packs)

[\\

**Launch Week II - Day 2: Introducing Location and Language Settings** \\

Specify country and preferred languages to get relevant localized content, enhancing your web scraping results with region-specific data.\\

\\

By Eric CiarlaOctober 29, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-2-introducing-location-language-settings)

[\\

**Launch Week II - Day 1: Introducing the Batch Scrape Endpoint** \\

Our new Batch Scrape endpoint lets you scrape multiple URLs simultaneously, making bulk data collection faster and more efficient.\\

\\

By Eric CiarlaOctober 28, 2024](https://www.firecrawl.dev/blog/launch-week-ii-day-1-introducing-batch-scrape-endpoint)

[\\

**Getting Started with Grok-2: Setup and Web Crawler Example** \\

A detailed guide on setting up Grok-2 and building a web crawler using Firecrawl.\\

\\

By Nicolas CamaraOct 21, 2024](https://www.firecrawl.dev/blog/grok-2-setup-and-web-crawler-example)

[\\

**OpenAI Swarm Tutorial: Create Marketing Campaigns for Any Website** \\

A guide to building a multi-agent system using OpenAI Swarm and Firecrawl for AI-driven marketing strategies\\

\\

By Nicolas CamaraOct 12, 2024](https://www.firecrawl.dev/blog/openai-swarm-agent-tutorial)

[\\

**Using OpenAI's Realtime API and Firecrawl to Talk with Any Website** \\

Build a real-time conversational agent that interacts with any website using OpenAI's Realtime API and Firecrawl.\\

\\

By Nicolas CamaraOct 11, 2024](https://www.firecrawl.dev/blog/How-to-Talk-with-Any-Website-Using-OpenAIs-Realtime-API-and-Firecrawl)

[\\

**Scraping Job Boards Using Firecrawl Actions and OpenAI** \\

A step-by-step guide to scraping job boards and extracting structured data using Firecrawl and OpenAI.\\

\\

By Eric CiarlaSept 27, 2024](https://www.firecrawl.dev/blog/scrape-job-boards-firecrawl-openai)

[\\

**Build a Full-Stack AI Web App in 12 Minutes** \\

Build a Full-Stack AI Web App in 12 minutes with Cursor, OpenAI o1, V0, Firecrawl & Patched\\

\\

By Dev DigestSep 18, 2024](https://www.firecrawl.dev/blog/Build-a-Full-Stack-AI-Web-App-in-12-Minutes)

[\\

**How to Use OpenAI's o1 Reasoning Models in Your Applications** \\

Learn how to harness OpenAI's latest o1 series models for complex reasoning tasks in your apps.\\

\\

By Eric CiarlaSep 16, 2024](https://www.firecrawl.dev/blog/how-to-use-openai-o1-reasoning-models-in-applications)

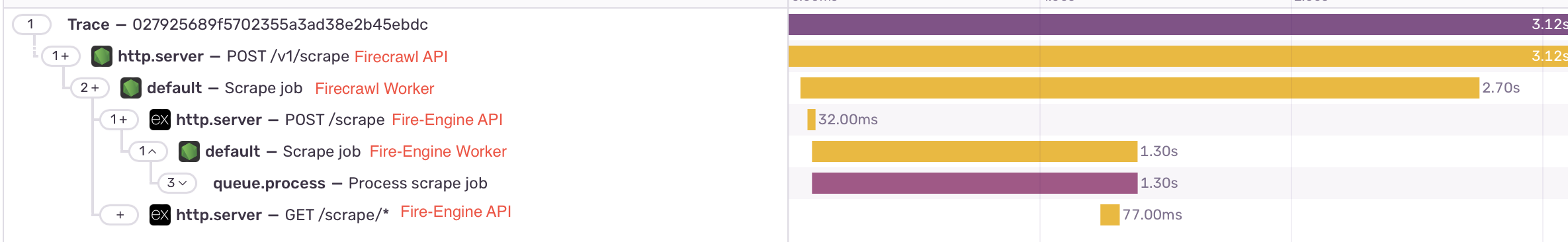

[\\

**Handling 300k requests per day: an adventure in scaling** \\

Putting out fires was taking up all our time, and we had to scale fast. This is how we did it.\\

\\

By Gergő Móricz (mogery)Sep 13, 2024](https://www.firecrawl.dev/blog/an-adventure-in-scaling)

[\\

**How Athena Intelligence Empowers Enterprise Analysts with Firecrawl** \\

Discover how Athena Intelligence leverages Firecrawl to fuel its AI-native analytics platform for enterprise analysts.\\

\\

By Ben ReillySep 10, 2024](https://www.firecrawl.dev/blog/how-athena-intelligence-empowers-analysts-with-firecrawl)

[\\

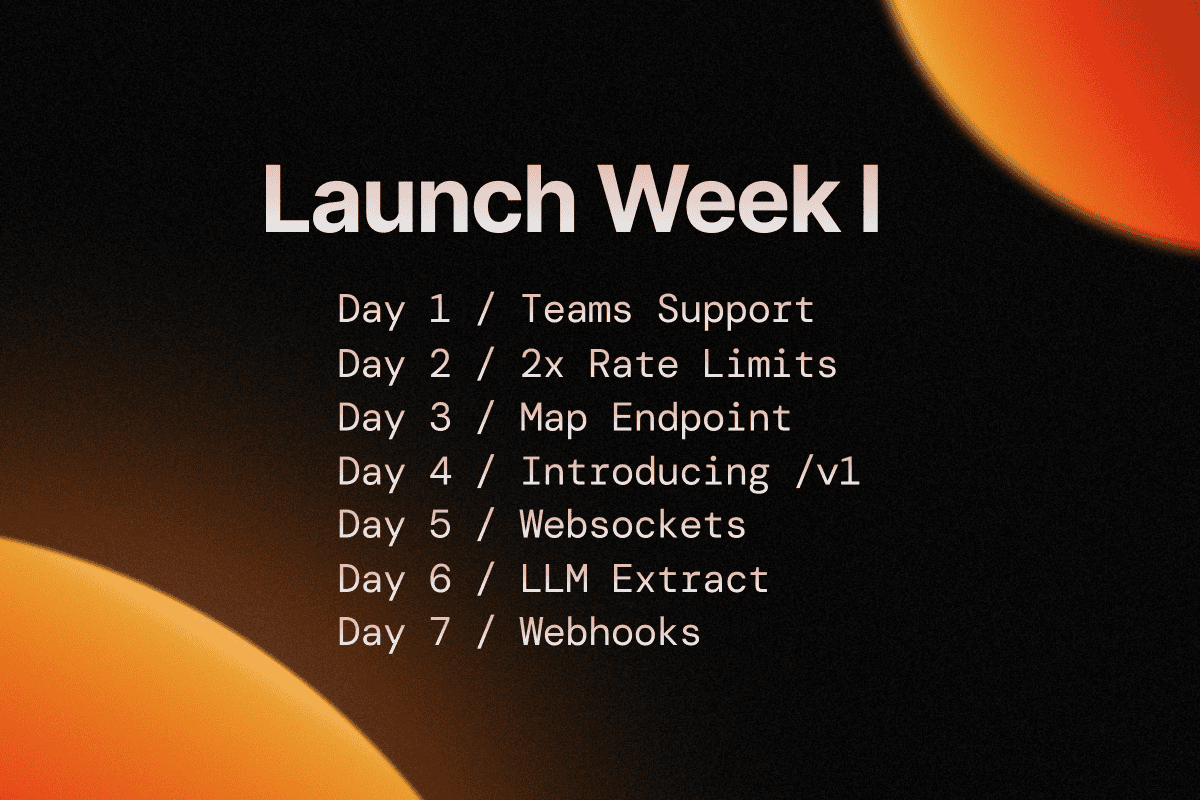

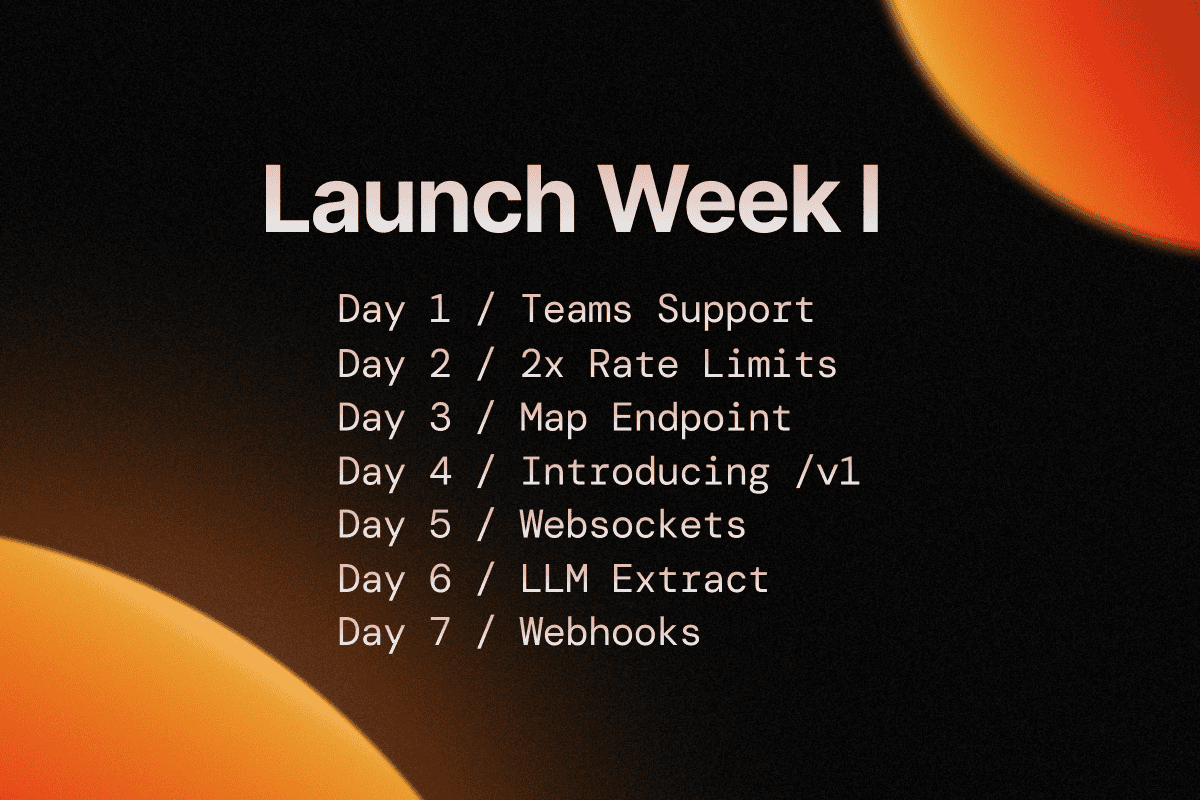

**Launch Week I Recap** \\

A look back at the new features and updates introduced during Firecrawl's inaugural Launch Week.\\

\\

By Eric CiarlaSeptember 2, 2024](https://www.firecrawl.dev/blog/firecrawl-launch-week-1-recap)

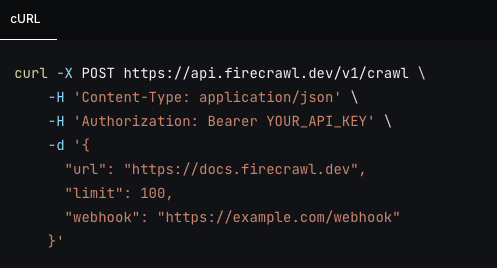

[\\

**Launch Week I / Day 7: Crawl Webhooks (v1)** \\

New /crawl webhook support. Send notifications to your apps during a crawl.\\

\\

By Nicolas CamaraSeptember 1, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-7-webhooks)

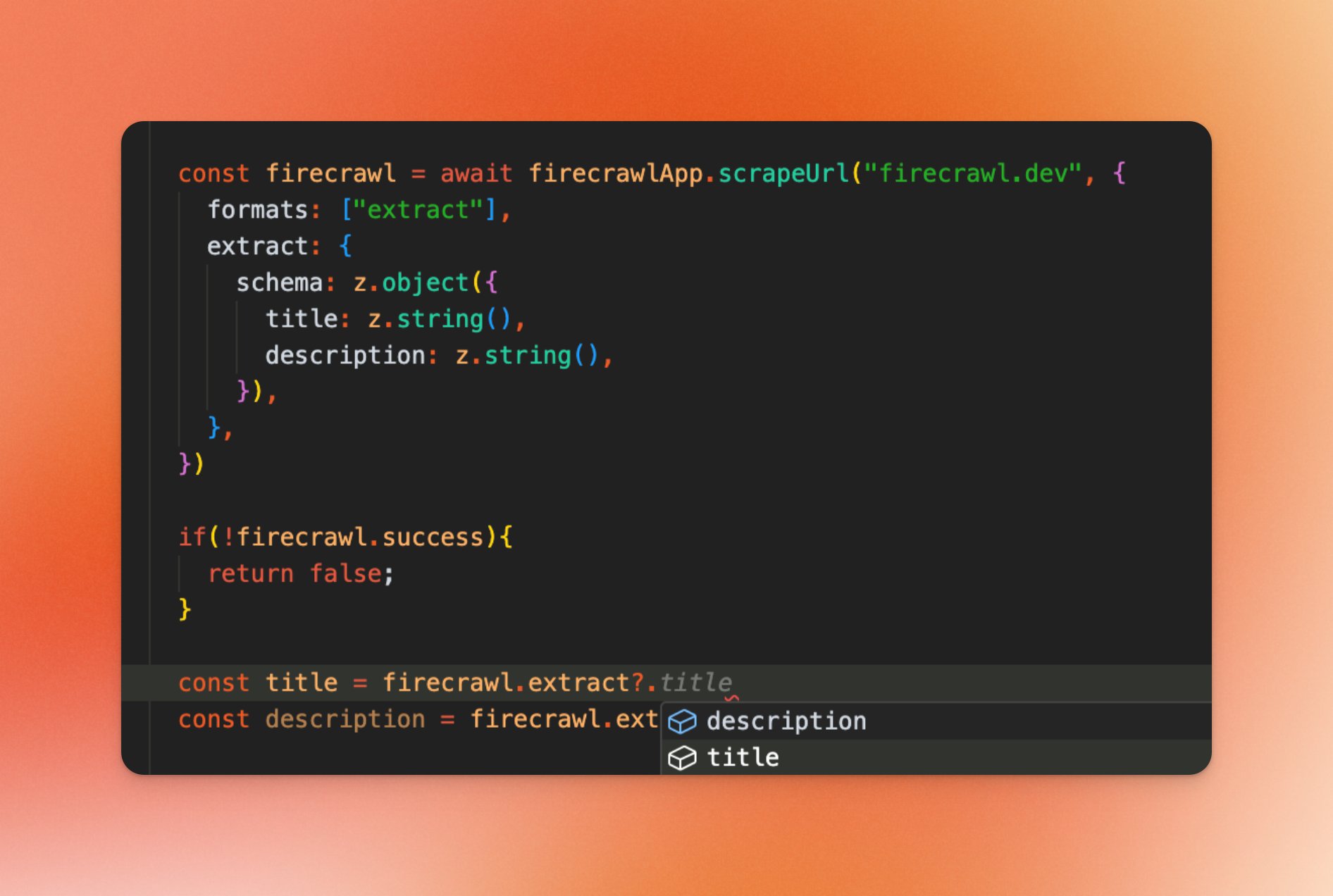

[\\

**Launch Week I / Day 6: LLM Extract (v1)** \\

Extract structured data from your web pages using the extract format in /scrape.\\

\\

By Nicolas CamaraAugust 31, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-6-llm-extract)

[\\

**Launch Week I / Day 5: Real-Time Crawling with WebSockets** \\

Our new WebSocket-based method for real-time data extraction and monitoring.\\

\\

By Eric CiarlaAugust 30, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-5-real-time-crawling-websockets)

[\\

**Launch Week I / Day 4: Introducing Firecrawl /v1** \\

Our biggest release yet - v1, a more reliable and developer-friendly API for seamless web data gathering.\\

\\

By Eric CiarlaAugust 29, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-4-introducing-firecrawl-v1)

[\\

**Launch Week I / Day 3: Introducing the Map Endpoint** \\

Our new Map endpoint enables lightning-fast website mapping for enhanced web scraping projects.\\

\\

By Eric CiarlaAugust 28, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-3-introducing-map-endpoint)

[\\

**Launch Week I / Day 2: 2x Rate Limits** \\

Firecrawl doubles rate limits across all plans, supercharging your web scraping capabilities.\\

\\

By Eric CiarlaAugust 27, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-2-doubled-rate-limits)

[\\

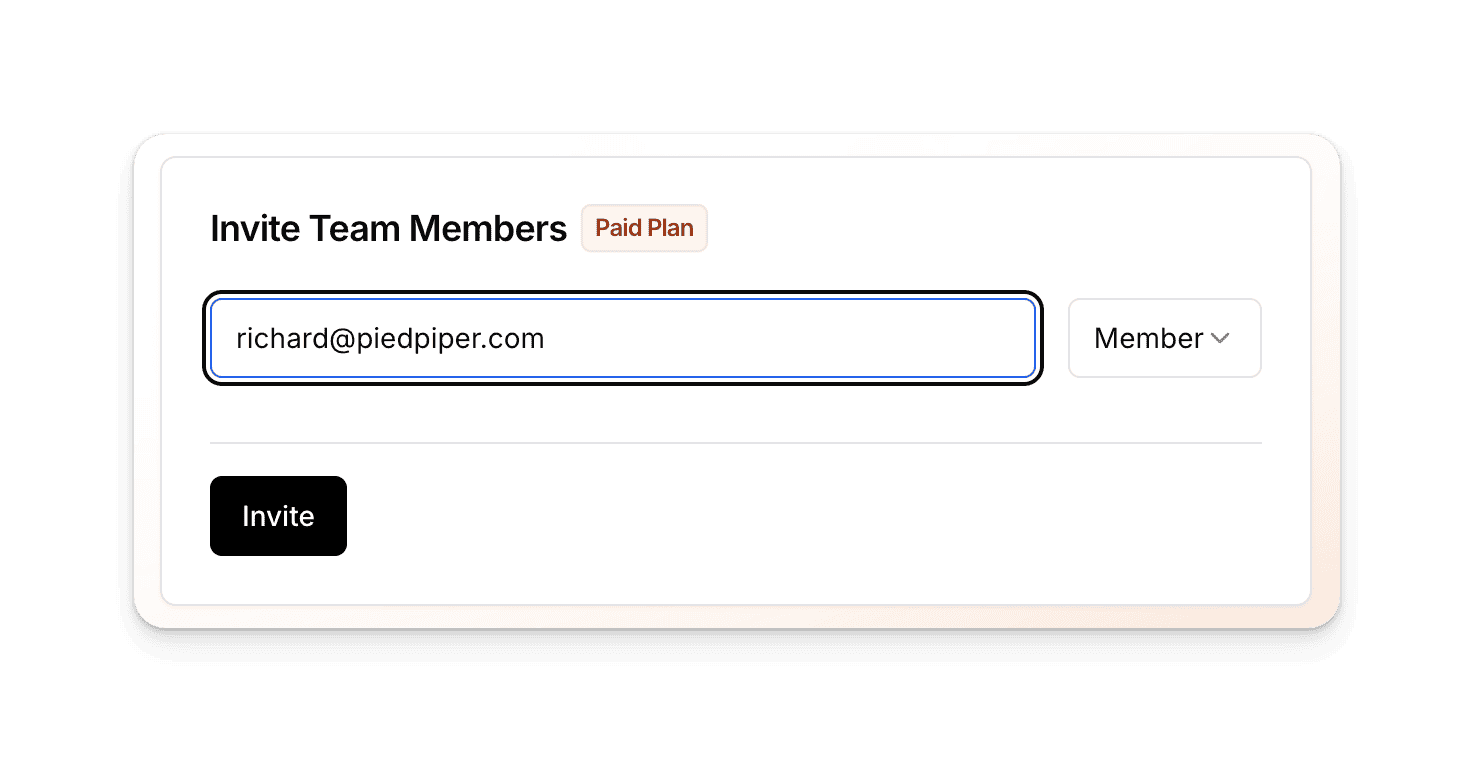

**Launch Week I / Day 1: Introducing Teams** \\

Our new Teams feature, enabling seamless collaboration on web scraping projects.\\

\\

By Eric CiarlaAugust 26, 2024](https://www.firecrawl.dev/blog/launch-week-i-day-1-introducing-teams)

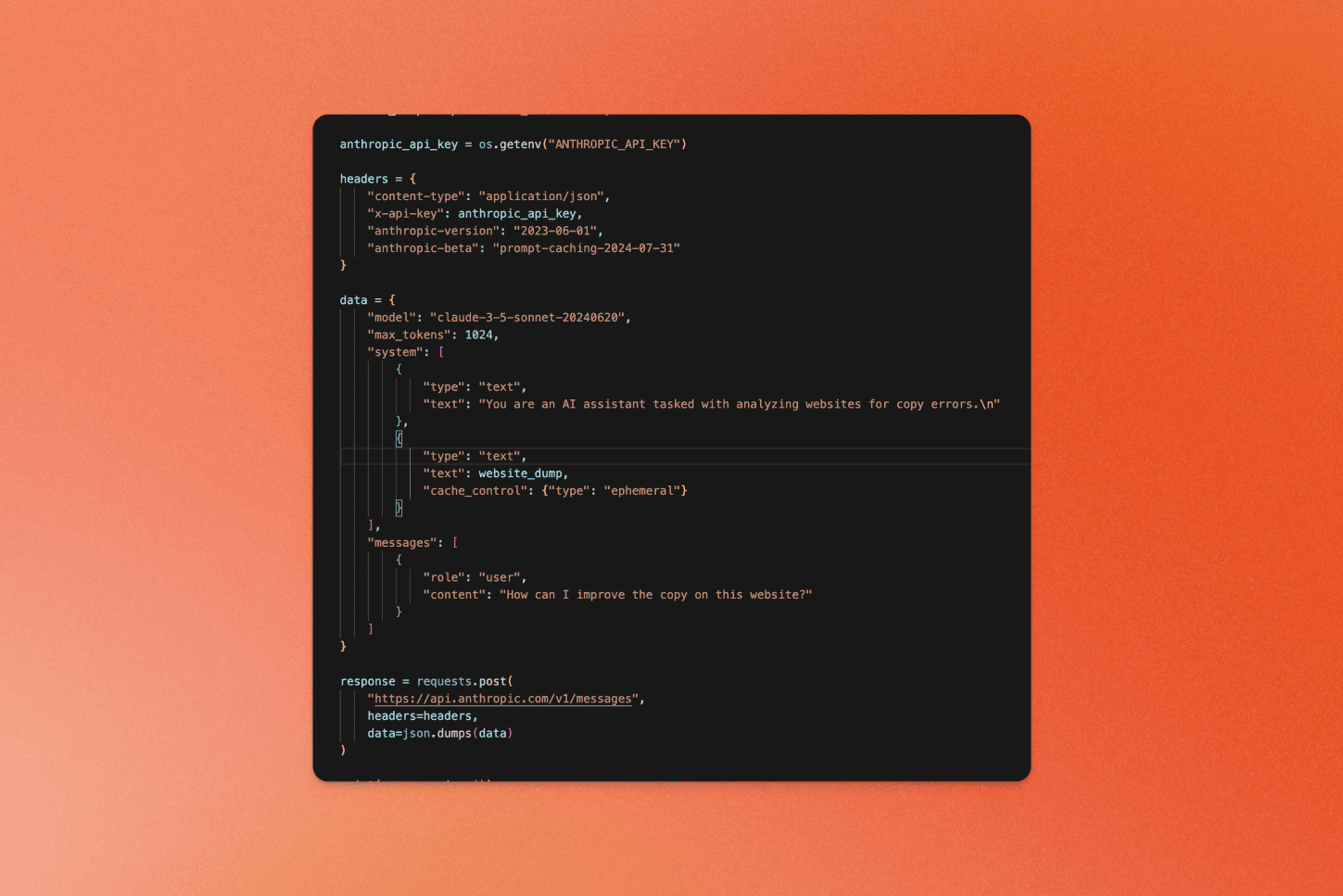

[\\

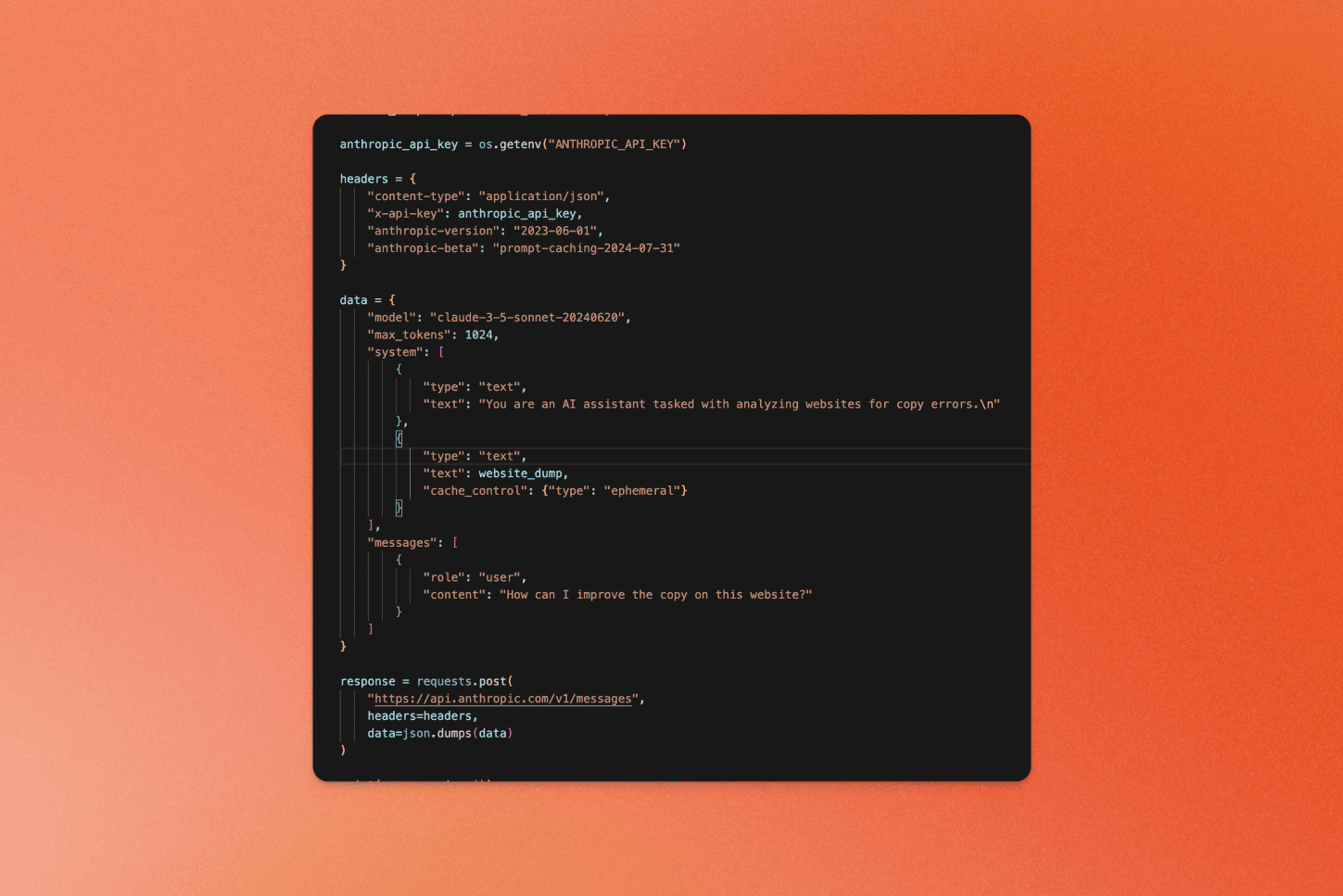

**How to Use Prompt Caching and Cache Control with Anthropic Models** \\

Learn how to cache large context prompts with Anthropic Models like Opus, Sonnet, and Haiku for faster and cheaper chats that analyze website data.\\

\\

By Eric CiarlaAug 14, 2024](https://www.firecrawl.dev/blog/using-prompt-caching-with-anthropic)

[\\

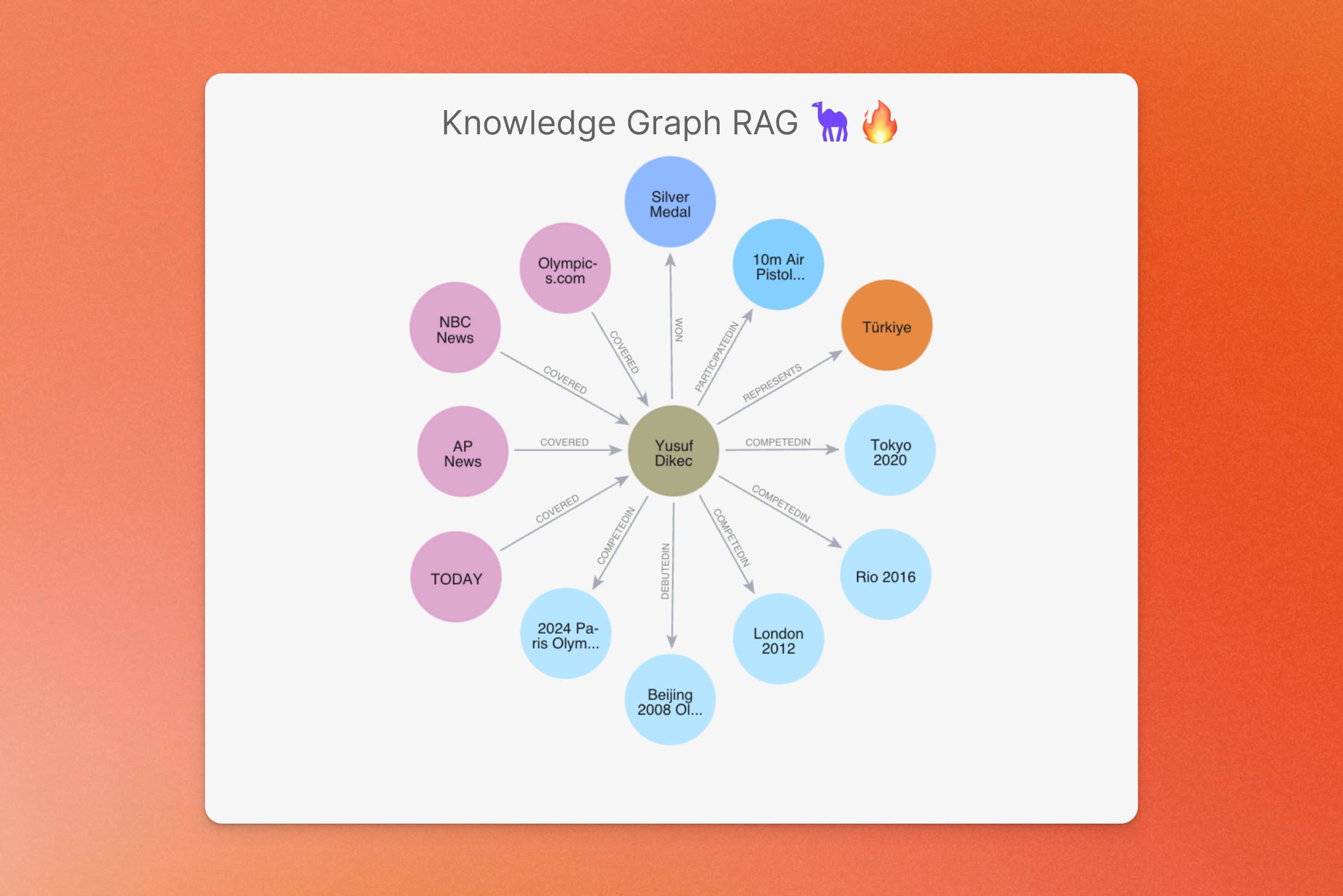

**Building Knowledge Graphs from Web Data using CAMEL-AI and Firecrawl** \\

A guide on constructing knowledge graphs from web pages using CAMEL-AI and Firecrawl\\

\\

By Wendong FanAug 13, 2024](https://www.firecrawl.dev/blog/building-knowledge-graphs-from-web-data-camelai-firecrawl)

[\\

**How Gamma Supercharges Onboarding with Firecrawl** \\

See how Gamma uses Firecrawl to instantly generate websites and presentations to 20+ million users.\\

\\

By Jon NoronhaAug 8, 2024](https://www.firecrawl.dev/blog/how-gamma-supercharges-onboarding-with-firecrawl)

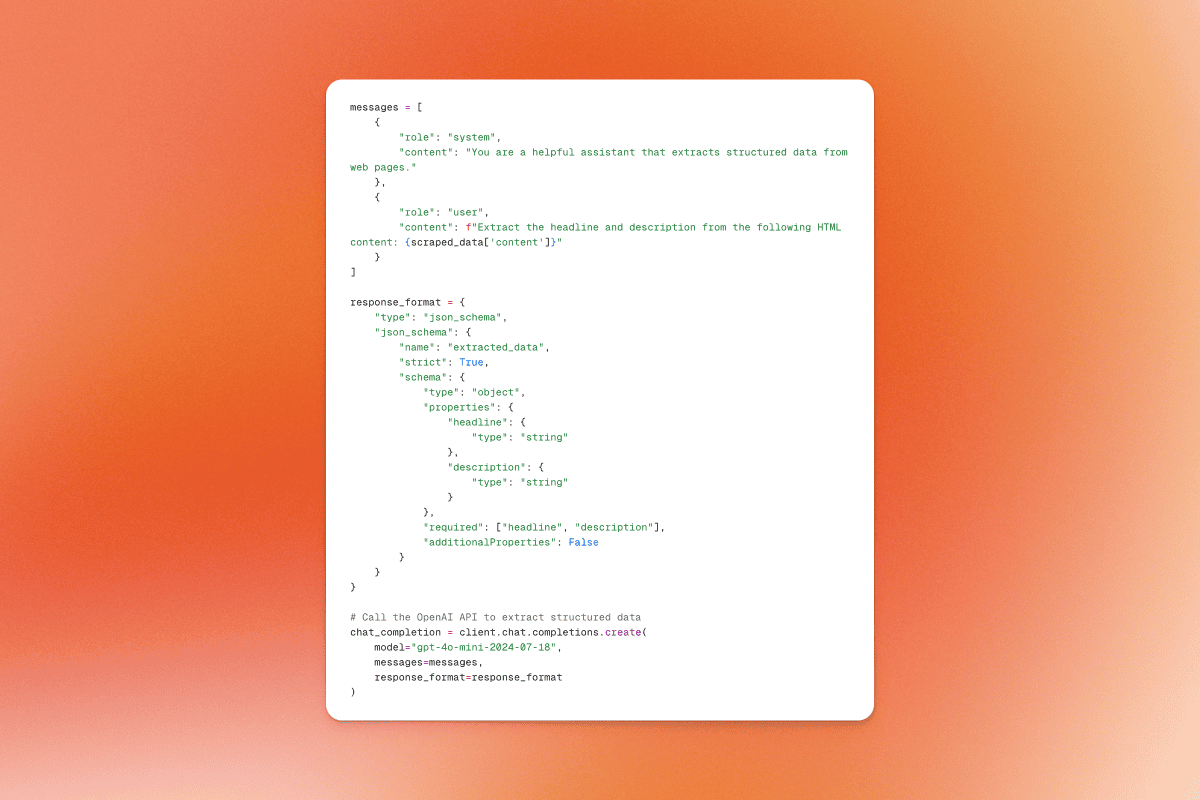

[\\

**How to Use OpenAI's Structured Outputs and JSON Strict Mode** \\

A guide for getting structured data from the latest OpenAI models.\\

\\

By Eric CiarlaAug 7, 2024](https://www.firecrawl.dev/blog/using-structured-output-and-json-strict-mode-openai)

[\\

**Introducing Fire Engine for Firecrawl** \\

The most scalable, reliable, and fast way to get web data for Firecrawl.\\

\\

By Eric CiarlaAug 6, 2024](https://www.firecrawl.dev/blog/introducing-fire-engine-for-firecrawl)

[\\

**Firecrawl July 2024 Updates** \\

Discover the latest features, integrations, and improvements in Firecrawl for July 2024.\\

\\

By Eric CiarlaJuly 31, 2024](https://www.firecrawl.dev/blog/firecrawl-july-2024-updates)

[\\

**Firecrawl June 2024 Updates** \\

Discover the latest features, integrations, and improvements in Firecrawl for June 2024.\\

\\

By Nicolas CamaraJune 30, 2024](https://www.firecrawl.dev/blog/firecrawl-june-2024-updates)

[\\

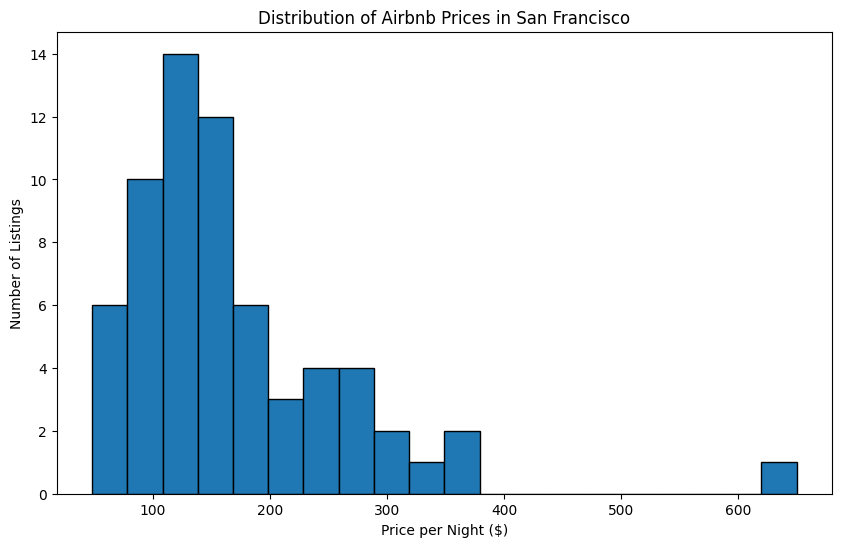

**Scrape and Analyze Airbnb Data with Firecrawl and E2B** \\

Learn how to scrape and analyze Airbnb data using Firecrawl and E2B in a few lines of code.\\

\\

By Nicolas CamaraMay 23, 2024](https://www.firecrawl.dev/blog/scrape-analyze-airbnb-data-with-e2b)

[\\

**Build a 'Chat with website' using Groq Llama 3** \\

Learn how to use Firecrawl, Groq Llama 3, and Langchain to build a 'Chat with your website' bot.\\

\\

By Nicolas CamaraMay 22, 2024](https://www.firecrawl.dev/blog/chat-with-website)

[\\

**Using LLM Extraction for Customer Insights** \\

Using LLM Extraction for Insights and Lead Generation using Make and Firecrawl.\\

\\

By Caleb PefferMay 21, 2024](https://www.firecrawl.dev/blog/lead-gen-business-insights-make-firecrawl)

[\\

**Extract website data using LLMs** \\

Learn how to use Firecrawl and Groq to extract structured data from a web page in a few lines of code.\\

\\

By Nicolas CamaraMay 20, 2024](https://www.firecrawl.dev/blog/data-extraction-using-llms)

[\\

**Build an agent that checks for website contradictions** \\

Using Firecrawl and Claude to scrape your website's data and look for contradictions.\\

\\

By Eric CiarlaMay 19, 2024](https://www.firecrawl.dev/blog/contradiction-agent)

[🔥](https://www.firecrawl.dev/)

## Ready to _Build?_

Start scraping web data for your AI apps today.

No credit card needed.

Get Started

## Firecrawl Changelog Updates

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

## ChangelogNew

- Feb 20, 2025

## Self Host Overhaul - v1.5.0

### Self-Host Fixes

- **Reworked Guide:** The `SELF_HOST.md` and `docker-compose.yaml` have been updated for clarity and compatibility

- **Kubernetes Imporvements:** Updated self-hosted Kubernetes deployment examples for compatibility and consistency (#1177)

- **Self-Host Fixes:** Numerous fixes aimed at improving self-host performance and stability (#1207)

- **Proxy Support:** Added proxy support tailored for self-hosted environments (#1212)

- **Playwright Integration:** Added fixes and continuous integration for the Playwright microservice (#1210)

- **Search Endpoint Upgrade:** Added SearXNG support for the `/search` endpoint (#1193)

### Core Fixes & Enhancements

- **Crawl Status Fixes:** Fixed various race conditions in the crawl status endpoint (#1184)

- **Timeout Enforcement:** Added timeout for scrapeURL engines to prevent hanging requests (#1183)

- **Query Parameter Retention:** Map function now preserves query parameters in results (#1191)

- **Screenshot Action Order:** Ensured screenshots execute after specified actions (#1192)

- **PDF Scraping:** Improved handling for PDFs behind anti-bot measures (#1198)

- **Map/scrapeURL Abort Control:** Integrated AbortController to stop scraping when the request times out (#1205)

- **SDK Timeout Enforcement:** Enforced request timeouts in the SDK (#1204)

### New Features & Additions

- **Proxy & Stealth Options:** Introduced a proxy option and stealthProxy flag (#1196)

- **Deep Research (Alpha):** Launched an alpha implementation of deep research (#1202)

- **LLM Text Generator:** Added a new endpoint for llms.txt generation (#1201)

### Docker & Containerization

- **Production Ready Docker Image:** A streamlined, production ready Docker image is now available to simplify self-hosted deployments.

- Feb 14, 2025

## v1.4.4

### Features & Enhancements

- Scrape API: Added action & wait time validation ( [#1146](https://github.com/mendableai/firecrawl/pull/1146))

- Extraction Improvements:

- Added detection of PDF/image sub-links & extracted text via Gemini ( [#1173](https://github.com/mendableai/firecrawl/pull/1173))

- Multi-entity prompt enhancements for extraction ( [#1181](https://github.com/mendableai/firecrawl/pull/1181))

- Show sources out of \_\_experimental in extraction ( [#1180](https://github.com/mendableai/firecrawl/pull/1180))

- Environment Setup: Added Serper & Search API env vars to docker-compose ( [#1147](https://github.com/mendableai/firecrawl/pull/1147))

- Credit System Update: Now displays “tokens” instead of “credits” when out of tokens ( [#1178](https://github.com/mendableai/firecrawl/pull/1178))

### Examples

- Gemini 2.0 Crawler: Implemented new crawling example ( [#1161](https://github.com/mendableai/firecrawl/pull/1161))

- Gemini TrendFinder: [https://github.com/mendableai/gemini-trendfinder](https://github.com/mendableai/gemini-trendfinder)

- Normal Search to Open Deep Research: [https://github.com/nickscamara/open-deep-research](https://github.com/nickscamara/open-deep-research)

### Fixes

- HTML Transformer: Updated free\_string function parameter type ( [#1163](https://github.com/mendableai/firecrawl/pull/1163))

- Gemini Crawler: Updated library & improved PDF link extraction ( [#1175](https://github.com/mendableai/firecrawl/pull/1175))

- Crawl Queue Worker: Only reports successful page count in num\_docs ( [#1179](https://github.com/mendableai/firecrawl/pull/1179))

- Scraping & URLs:

- Fixed relative URL conversion ( [#584](https://github.com/mendableai/firecrawl/pull/584))

- Enforced scrape rate limit in batch scraping ( [#1182](https://github.com/mendableai/firecrawl/pull/1182))

- Feb 7, 2025

## Examples Week - v1.4.3

### Summary of changes

- Open Deep Research: An open source version of OpenAI Deep Research. See here: [https://github.com/nickscamara/open-deep-research](https://github.com/nickscamara/open-deep-research)

- R1 Web Extractor Feature: New extraction capability added.

- O3-Mini Web Crawler: Introduces a lightweight crawler for specific use cases.

- Updated Model Parameters: Enhancements to o3-mini\_company\_researcher.

- URL Deduplication: Fixes handling of URLs ending with /, index.html, index.php, etc.

- Improved URL Blocking: Uses tldts parsing for better blocklist management.

- Valid JSON via rawHtml in Scrape: Ensures valid JSON extraction.

- Product Reviews Summarizer: Implements summarization using o3-mini.

- Scrape Options for Extract: Adds more configuration options for extracting data.

- O3-Mini Job Resource Extractor: Extracts job-related resources using o3-mini.

- Cached Scrapes for Extract evals: Improves performance by using cached data for extractions evals.

- Jan 31, 2025

## Extract & API Improvements - v1.4.2

We’re excited to announce several new features and improvements:

### New Features

- Added web search capabilities to the extract endpoint via the `enableWebSearch` parameter

- Introduced source tracking with `__experimental_showSources` parameter

- Added configurable webhook events for crawl and batch operations

- New `timeout` parameter for map endpoint

- Optional ad blocking with `blockAds` parameter (enabled by default)

### Infrastructure & UI

- Enhanced proxy selection and infrastructure reliability

- Added domain checker tool to cloud platform

- Redesigned LLMs.txt generator interface for better usability

- Jan 24, 2025

## Extract Improvements - v1.4.1

We’ve significantly enhanced our data extraction capabilities with several key updates:

- Extract now returns a lot more data

- Improved infrastructure reliability

- Migrated from Cheerio to a high-performance Rust-based parser for faster and more memory-efficient parsing

- Enhanced crawl cancellation functionality for better control over running jobs

- Jan 7, 2025

## /extract changes

We have updated the `/extract` endpoint to now be asynchronous. When you make a request to `/extract`, it will return an ID that you can use to check the status of your extract job. If you are using our SDKs, there are no changes required to your code, but please make sure to update the SDKs to the latest versions as soon as possible.

For those using the API directly, we have made it backwards compatible. However, you have 10 days to update your implementation to the new asynchronous model.

For more details about the parameters, refer to the docs sent to you.

- Jan 3, 2025

## v1.2.0

### Introducing /v1/search

The search endpoint combines web search with Firecrawl’s scraping capabilities to return full page content for any query.

Include `scrapeOptions` with `formats: ["markdown"]` to get complete markdown content for each search result otherwise it defaults to getting SERP results (url, title, description).

More info here: [v1/search docs](https://docs.firecrawl.dev/api-reference/endpoint/search)

### Fixes and improvements

- Fixed LLM not following the schema in the python SDK for `/extract`

- Fixed schema json not being able to be sent to the `/extract` endpoint through the Node SDK

- Prompt is now optional for the `/extract` endpoint

- Our fork of [MinerU](https://github.com/mendableai/mineru-api) is now default for PDF Parsing

- Dec 27, 2024

## v1.1.0

### Changelog Highlights

#### Feature Enhancements

- **New Features**:

- Geolocation, mobile scraping, 4x faster parsing, better webhooks,

- Credit packs, auto-recharges and batch scraping support.

- Iframe support and query parameter differentiation for URLs.

- Similar URL deduplication.

- Enhanced map ranking and sitemap fetching.

#### Performance Improvements

- Faster crawl status filtering and improved map ranking algorithm.

- Optimized Kubernetes setup and simplified build processes.

- Sitemap discoverability and performance improved

#### Bug Fixes

- Resolved issues:

- Badly formatted JSON, scrolling actions, and encoding errors.

- Crawl limits, relative URLs, and missing error handlers.

- Fixed self-hosted crawling inconsistencies and schema errors.

#### SDK Updates

- Added dynamic WebSocket imports with fallback support.

- Optional API keys for self-hosted instances.

- Improved error handling across SDKs.

#### Documentation Updates

- Improved API docs and examples.

- Updated self-hosting URLs and added Kubernetes optimizations.

- Added articles: mastering `/scrape` and `/crawl`.

#### Miscellaneous

- Added new Firecrawl examples

- Enhanced metadata handling for webhooks and improved sitemap fetching.

- Updated blocklist and streamlined error messages.

- Oct 28, 2024

## Introducing Batch Scrape

You can now scrape multiple URLs simultaneously with our new Batch Scrape endpoint.

- Read more about the Batch Scrape endpoint [here](https://www.firecrawl.dev/blog/launch-week-ii-day-1-introducing-batch-scrape-endpoint).

- Python SDK (1.4.x) and Node SDK (1.7.x) updated with batch scrape support.

- Oct 10, 2024

## Cancel Crawl in the SDKs, More Examples, Improved Speed

- Added crawl cancellation support for the Python SDK (1.3.x) and Node SDK (1.6.x)

- OpenAI Voice + Firecrawl example added to the repo

- CRM lead enrichment example added to the repo

- Improved our Docker images

- Limit and timeout fixes for the self hosted playwright scraper

- Improved speed of all scrapes

- Sep 27, 2024

## Fixes + Improvements (no version bump)

- Fixed 500 errors that would happen often in some crawled websites and when servers were at capacity

- Fixed an issue where v1 crawl status wouldn’t properly return pages over 10mb

- Fixed an issue where `screenshot` would return undefined

- Push improvements that reduce speed times when a scraper fails

- Sep 24, 2024

## Introducing Actions

Interact with pages before extracting data, unlocking more data from every site!

Firecrawl now allows you to perform various actions on a web page before scraping its content. This is particularly useful for interacting with dynamic content, navigating through pages, or accessing content that requires user interaction.

- Version 1.5.x of the Node SDK now supports type-safe Actions.

- Actions are now available in the REST API and Python SDK (no version bumps required!).

Here is a python example of how to use actions to navigate to google.com, search for Firecrawl, click on the first result, and take a screenshot.

```python

from firecrawl import FirecrawlApp

app = FirecrawlApp(api_key="fc-YOUR_API_KEY")

# Scrape a website:

scrape_result = app.scrape_url('firecrawl.dev',

params={

'formats': ['markdown', 'html'],

'actions': [\

{"type": "wait", "milliseconds": 2000},\

{"type": "click", "selector": "textarea[title=\"Search\"]"},\

{"type": "wait", "milliseconds": 2000},\

{"type": "write", "text": "firecrawl"},\

{"type": "wait", "milliseconds": 2000},\

{"type": "press", "key": "ENTER"},\

{"type": "wait", "milliseconds": 3000},\

{"type": "click", "selector": "h3"},\

{"type": "wait", "milliseconds": 3000},\

{"type": "screenshot"}\

]

}

)

print(scrape_result)

```

For more examples, check out our [API Reference](https://docs.firecrawl.dev/api-reference/endpoint/scrape).

- Sep 23, 2024

## Mid-September Updates

### Typesafe LLM Extract

- E2E Type Safety for LLM Extract in Node SDK version 1.5.x.

- 10x cheaper in the cloud version. From 50 to 5 credits per extract.

- Improved speed and reliability.

### Rust SDK v1.0.0

- Rust SDK v1 is finally here! Check it out [here](https://crates.io/crates/firecrawl/1.0.0).

### Map Improved Limits

- Map smart results limits increased from 100 to 1000.

### Faster scrape

- Scrape speed improved by 200ms-600ms depending on the website.

### Launching changelog

- For now on, for every new release, we will be creating a changelog entry here.

### Improvements

- Lots of improvements pushed to the infra and API. For all Mid-September changes, refer to the commits [here](https://github.com/mendableai/firecrawl/commits/main/).

- Sep 8, 2024

## September 8, 2024

### Patch Notes (No version bump)

- Fixed an issue where some of the custom header params were not properly being set in v1 API. You can now pass headers to your requests just fine.

- Aug 29, 2024

## Firecrawl V1 is here! With that we introduce a more reliable and developer friendly API.

### Here is what’s new:

- Output Formats for /scrape: Choose what formats you want your output in.

- New /map endpoint: Get most of the URLs of a webpage.

- Developer friendly API for /crawl/id status.

- 2x Rate Limits for all plans.

- Go SDK and Rust SDK.

- Teams support.

- API Key Management in the dashboard.

- onlyMainContent is now default to true.

- /crawl webhooks and websocket support.

Learn more about it [here](https://docs.firecrawl.dev/v1).

Start using v1 right away at [https://firecrawl.dev](https://firecrawl.dev/)

## Web Data Extraction Tool

Introducing **/extract** \- Now in open beta

# Get web data with a prompt

Turn entire websites into structured data with AI

From firecrawl.dev, get the pricing.

Try for Free

From **firecrawl.dev** find the company name, mission and whether it's open source.

{

"company\_name":"Firecrawl",

"company\_mission":"...",

"is\_open\_source":true,

}

A milestone in scraping

## Web scraping was hard – now effortless

Scraping the internet had everything to do with broken scripts, bad data, wasted time. With Extract, you can get any data in any format effortlessly – in a single API call.

### No more manual scraping

Extract structured data from any website using natural language prompts.

page = urlopen(url)

html = page.read().decode("utf-8")

start\_index = html.find("") + len("<title>")

end\_index = html.find("")

title = html\[start\_index:end\_index\]

>>\> title

PromptBuild a B2B lead list from these company websites.

### Stop rewriting broken scripts

Say goodbye to fragile scrapers that break with every site update. Our AI understands content semantically and adapts automatically.

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

page = urlopen(url)

html = page.read().decode("utf-8")

start\_idx = html.find("") + len("<title>")

end\_idx = html.find("")

title = html\[start\_idx:end\_idx\]

>>> title

await firecrawl.extract(\[\

\

'https://firecrawl.dev/',\

\

\], {

prompt: "Extract mission.",

schema: z.object({

mission: z.string()

})

});

### Extract entire websites in a single API call

Get the data you need with a simple API call, whether it's one page or thousands.

Try adding a wildcard /\* to the URL.It will extract information across the site.It will find and extract information across the entire website.\> app.extract(\['https://firecrawl.dev/\*'\])

### Forget fighting context windows

No context window limits. Extract thousands of results effortlessly while we handle the complex LLM work.

Extracting

Video Demo

## Use Extract for everything

From lead enrichment to AI onboarding to KYB – and more. Watch a demo of how Extract can help you get more out of your data.

Enrichment Integrations

## Enrich data anywhere you work

Integrate Extract with your favorite tools and get enriched data where you need it.

Datasets

## Build datasets spread across websites

Gather datasets from any website and use them for any enrichment task.

| | Name | Contact | Email |

| --- | --- | --- | --- |

| 1 | Sarah Johnson | +1 (555) 123-4567 | sarah.j@example.com |

| 2 | Michael Chen | +1 (555) 234-5678 | m.chen@example.com |

| 3 | Emily Williams | +1 (555) 345-6789 | e.williams@example.com |

| 4 | James Wilson | +1 (555) 456-7890 | j.wilson@example.com |

[Integrate with Zapier](https://zapier.com/apps/firecrawl/integrations)

Simple, transparent pricing

## Pricing that scales with your business

Monthly

Yearly

Save 10%\+ Get All Credits Upfront

### Free

$0

One-time

Tokens / year500,000

Rate limit10 per min

SupportCommunity

Sign Up

### Starter

$89/mo

$1,188/yr$1,068/yr(Billed annually)

Tokens / year18 million

Rate limit20 per min

SupportEmail

Subscribe

All credits granted upfront

Most Popular 🔥

### Explorer

$359/mo

$4,788/yr$4,308/yr(Billed annually)

Tokens / year84 million

Rate limit100 per min

SupportSlack

Subscribe

All credits granted upfront

Best Value

### Pro

$719/mo

$9,588/yr$8,628/yr(Billed annually)

Tokens / year192 million

Rate limit1000 per min

SupportSlack + Priority

Subscribe

All credits granted upfront

### Enterprise

Custom

Billed annually

Tokens / yearNo limits

Rate limitCustom

SupportCustom (SLA, dedicated engineer)

Talk to us

Tokens / year

500,000

18 million

84 million

192 million

No limits

Rate limit

10 per min

20 per min

100 per min

1000 per min

Custom

Support

Community

Email

Slack

Slack + Priority

Custom (SLA, dedicated engineer)

All requests have a base cost of 300 tokens + [output tokens - View token calculator](https://www.firecrawl.dev/pricing?extract-pricing=true#token-calculator)

## Get started for free

500K free tokens – no credit card required!

From firecrawl.dev, get the pricing.

Try for Free

FAQ

## Frequently Asked

Everything you need to know about Extract's powerful web scraping capabilities

### How much does Extract cost?

### What is a token and how many do I need?

### How does Extract handle JavaScript-heavy websites?

### What programming languages and frameworks are supported?

### How many pages can I process in a single API call?

### How can I integrate Extract with my existing workflow?

### Does Extract work with password-protected pages?

### Can I schedule regular extractions for monitoring changes?

### What happens if a website's structure changes?

### How fresh is the extracted data?

### Can Extract handle multiple languages and international websites?

### Can I use Extract for competitor monitoring?

### How does Extract handle dynamic content like prices or inventory?

### Is Extract suitable for real-time data needs?

/extract returns a JSON in your desired format

## Web Data Playground

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

# Preview

Take a look at the API response (Preview limited to 5 pages)

Single URL(/scrape)

Crawl(/crawl)

Map(/map)

Extract(/extract)Beta

Scrape

URL

Get CodeRun

### Options

Start exploring with our playground!

## Sign In Page

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

🔥

### Sign In

EmailPassword

Sign in

[Forgot your password?](https://www.firecrawl.dev/signin/forgot_password)

[Sign in via magic link](https://www.firecrawl.dev/signin/email_signin)

[Don't have an account? Sign up](https://www.firecrawl.dev/signin/signup)

OAuth sign-in

GitHubGoogle

## Privacy Policy Overview

Introducing /extract - Get web data with a prompt [Try now](https://www.firecrawl.dev/extract)

# PRIVACY POLICY

Date of last revision: December 26, 2024

1. **Who We Are?**

The name of our company is SideGuide Technologies, Inc. d/b/a Firecrawl (“Firecrawl”), and we’re registered as a corporation in Delaware. Firecrawl is a tool for collecting and enhancing LLM-ready data.

2. **What Is This?**

This is a privacy policy and the reason we have it is to tell you how we collect, manage, store, and use your information.

Just so we’re clear, whenever we say \*\*\*\*“we,” “us,” “our,” or “ourselves”, we’re talking about Firecrawl and whenever we say “you” or “your,” we’re talking about the person or business who has decided to use our services, or even potentially a third party. When we talk about our services, we mean any of our platforms, websites, or apps; or any features, products, graphics, text, images, photos, audio, video, or similar things we use.

3. **Why Are We Showing You This?**

We value and respect your privacy. That is why we strive to only use your information when we think that doing so improves your experience in using our services. If you feel that we could improve in this mission in any way, or if you have a complaint or concern, please let us know by sending us your feedback to the following email address: help@firecrawl.com.

Our goal is to be as transparent and open about our use of information and data as possible, so that our users can benefit from both the way they provide information and how we use it.

This privacy policy should be read along with our Terms of Use, posted at [https://www.firecrawl.dev/terms-of-use](https://www.firecrawl.dev/terms-of-use). That’s another big part of what we do, so please review it and follow its process for questions or concerns about what it says there.

4. **Information Collection and Use**

In using the services, you may be asked to provide us a variety of information– some of which can personally identify you and some that cannot. We may collect, store, and share this personal information with third parties, but only in the ways we explain in this policy. Here’s how we do it and why we do it:

1. **Personally Identifiable Information: How we collect it.**

Personally identifiable information (also, “PII”) is data that can be used to contact or identify a single person. Examples include your name, your phone number, your email, your address, and your IP address. We collect the following categories of information

- name

- email address

- payment information, including credit card information

- company Information

- IP addresses

- browser information

- timestamps

- page views,

- load times

- referrers

- device type and browser information

- information that is collected on behalf of our clients

2. **Personally Identifiable Information: How we use it.**

We use your personal information in the following ways:

- To provide you our services;

- Caching and indexing;

- To contact you via email to inform you of service issues, new features, updates, offers, and billing issues;

- To improve our website performance;

- To tailor our services to your needs and the way you use our services;

- To process payments;

- To determine how to improve our product;

- To market our services to interested customers;

- We use cookies to track unauthenticated user activity on our site;

- For advertising purposes.

3. **Who We Share Your Information With and Why**

We only share your information with third parties in the following ways and for the following purposes:

- **Stripe, Inc.** We share your email, credit cardholder name, and card number and related information to run the initial and subsequent payments for our services. This information is sent directly to Stripe through a plugin on our website. Your credit card information is stored with Stripe for subsequently billing; we do not retain your credit card information internally. Their privacy policy is here: https://stripe.com/privacy.

- **Posthog.** We share your data with Posthog to better understand user interactions (e.g., clicks, page views, events); device information (IP address, browser type); location data (based on IP). Their privacy policy is provided here: https://posthog.com/privacy

- **Crisp Chatbot.** We share names, emails, and phone numbers if provided by users; messages sent in the chat widget; IP addresses, browser information, and timestamp. We do this to communicate with our customers. Their privacy policy is provided here. https://crisp.chat/en/privacy/

- **Vercel Analytics.** We share IP addresses (used to determine visitor location); information related to referrers; Device type and browser information. This is for product and marketing analytics. Their privacy policy is provided here: https://vercel.com/legal/privacy-policy

- We will share all collected information to the extent necessary and as required by law or to comply with any legal obligations, including defense of our company.

4. **Your Choices in What Information You Share**

For users who do not register for our services or a business account, we will not collect that user’s personally identifying information—unless that personally identifiable information is information of a customer of one of our business clients, which is shares by that business through permission obtained by the business directly from that customer.

5. **Non-Personally Identifiable Information**

Non-personally identifiable information includes general details about your device and connection (including the type of computer/mobile device, operating system, web-browser or other software, language preference, and hardware); general information from the app store or referring website; the date and time of visit or use; and, internet content provider information. We may collect this type of information.

6. **How Long We Keep Your Information**

We will retain your personally identifiable information until you request in writing that we delete or otherwise remove your personally identifiable information as part of our normal business processes. We may develop or amend a policy for deleting PII on a recurring timeline at some point in the future, but we do not currently have such a policy.

7. **Where We Keep and Transfer Your Information**

Our business is operated in the United States and, as far as we are aware, third parties with whom we share your information are as well. Our servers are located in the United States and this is where your data and information will be stored. Due to the nature of internet communications, however, such data could pass through other countries as part of the transmission process; this is also true for our clients outside the United States.

Please be aware if you are a citizen of another country, and if you live in Europe in particular, that your information will be transferred out of your home country and into the United States. The United States might not have the same level of data protection as your country provides.

Our processing of personal data from individuals is not targeted to reveal race; ethnicity; political, religious, or philosophical beliefs; trade union memberships; health; sexual activity; or, sexual orientation.

If you would like more information about this, please email us at help@firecrawl.com.

8. **EU Rights to Information**

According to the laws of the European Union (except for limited exceptions, where applicable), anyone in those countries has the right to:

- Be informed about their data and its processing;

- Have access to their data;

- Correct any errors in their data;

- Erase data from our records;

- Restrict processing and use of data;

- Data portability;

- Object to the use of their data, including for the purpose of automated profiling and direct marketing;

- Make decisions about automated decision making and profiling

We respect each of these rights for all of our users, regardless of citizenship. If you have any questions or concerns about any of these rights, or if you would like to assert any of these rights at any time, please contact help@firecrawl.com.

9. **California Residents**

The California Consumer Privacy Act (“CCPA”) provides California residents specific rights to restrict, access, and delete their collected information. All requests under this section should be provide to help@firecrawl.com. Subject to the requirements and limitations under the CCPA, these rights include:

- Upon your written request, up to 2 times during a 12 month period, we will provide you a summary of the personal information we have for you for your review.

- Upon your written request, and absent a legal need under to retain such information, we will delete your personal information we have collected.

We may be required to make further inquiry to verify the identity of the individual requesting any action above to confirm that person’s identity prior to processing that request.

5. **Protecting Your Information**

1. **Keeping it Safe**

We make reasonable and commercially feasible efforts to keep your information safe. Though we are a small business, we have appropriate security measures in place to prevent your information from being accidentally lost, used, or accessed in an unauthorized way. We restrict access to your personal information to those who need to know it, are subject to contractual confidentiality obligations in the case of internal personnel and third-party providers, and may be disciplined or terminated if they fail to meet these obligations in terms of contractors and internal personnel. Those processing your information are tasked to do so in an authorized manner and are subject to a duty of confidentiality. We encrypt data during transit via TLS and at rest if requested.

That said, no organization or business can guarantee 100% data protection. With that in mind, we also have procedures in place to deal with any suspected data security breach. We will inform both you and any applicable authorities of a suspected data security breach, as and when required by law.

2. **Third-Party Providers**

As articulated in this privacy policy, our services utilize third-party providers, as well as providing an integration with Stripe, Inc.

We do not control those policies and terms. You should visit those providers to acquaint yourself with their policies and terms, as previously provided in this policy document. If you have any issue or concern with those terms or policies, you should address those concerns with that third-party provider.

3. **Posting Content**

If you share content with another party, including messaging customers, that information may become public through your actions or the actions of the other party. Additionally, if you post any information or content on social media, you are making that information public. You can always ask us to delete information in our possession, but we cannot force anyone else to erase your information. F

4. **Do Not Track Signals/Cookies**

Some technologies, such as web browsers or mobile devices, provide a setting that when turned on sends a Do Not Track (DNT) signal when browsing a website or app. There is currently no common standard for responding to DNT Signals or even in the DNT signal itself. We recognize and respect DNT signals.

5. **Minors’ Data**

We do not intentionally collect minors’ data.

If you are a parent, and you believe we have accidentally collected your child’s data, you have the right to contact us and require that we: remove and delete the personal information provided. To do so, upon you contacting us, we must take reasonable steps to confirm you are the parent. You may contact us for such a request at any time at help@firecrawl.com.

6. **Compliance with Regulations**

We regularly review our privacy policy to do our best to ensure it complies with any applicable laws. Ours is a small business, but when we receive formal written complaints, we will contact the person who made the complaint to follow up as soon as practicable. We will work with relevant regulatory authorities to resolve any complaints or concerns that we cannot resolve with our users directly.

You also have the right to file a complaint with the supervisory authority of your home country, where available, relating to the processing of any personal data you feel may have violated local regulations.

6. **General Information**

1. **No Unsolicited Personal Information Requests**

We will never ask you for your personal information in an unsolicited letter, call, or email. If you contact us, we will only use your personal information if necessary to fulfill your request.

2. **Changes**

Our business and the services we provide are constantly evolving. We may change our privacy policy at any time. If we change our policy, we will notify you of any updates to our policy. We will not reduce your rights under this policy without your consent.

3. **Complaints**

We respect the rights of all of our users, regardless of location or citizenship. If you have any questions or concerns about any of these rights, or if you would like to assert any of these rights at any time, please contact help@firecrawl.com.

4. **Questions about Policy**

If you have any questions about this privacy policy, contact us at: help@firecrawl.com. By accessing any of our services or content, you are affirming that you understand and agree with the terms of our privacy policy.

## Firecrawl Launch Week II

Oct 28 to Nov 3

# Launch Week II

Follow us on your favorite platform to hear about every newFirecrawllaunch during the week!

[X](https://x.com/firecrawl_dev)

[LinkedIn](https://www.linkedin.com/company/firecrawl)

[GitHub](https://github.com/mendableai/firecrawl) [X\\

X](https://x.com/firecrawl_dev) [LinkedIn\\

LinkedIn](https://www.linkedin.com/company/firecrawl)

[\\

\\

November 4, 2024\\

\\

**Launch Week II Recap** \\

\\

Recapping all the exciting announcements from Firecrawl's second Launch Week.\\

\\

By Eric Ciarla](https://www.firecrawl.dev/blog/launch-week-ii-recap)

[\\

\\

November 3, 2024\\

\\

**Day 7: Introducing Faster Markdown Parsing** \\

\\