What is Gemma 3?

Just a couple of years ago, Google was being openly mocked for being too late to enter the Gen AI race. Even after joining the competition, its Gemini models were, to put it very mildly, not on par with OpenAI’s flagship LLMs. But those days seem to be long over as Google recently announced truly impressive models like Gemini 2.0 Flash Thinking and Gemma 3.

Gemma 3 deserves special attention as Google’s most ambitious open-weight model family to date. Released in March 2025, it represents a dramatic evolution from previous Gemma iterations, coming in four sizes (1B, 4B, 12B, and 27B parameters) with both pre-trained and instruction-tuned variants. While the 1B model focuses on text-only processing, the larger models bring true multimodality to the table, capable of handling both images and text with impressive sophistication - a first for the Gemma series.

What’s particularly striking about Gemma 3 is how it punches above its weight class. According to benchmark testing, the Gemma-3-27B-IT model outperforms Gemini 1.5-Pro across various benchmarks despite requiring dramatically fewer computational resources. The technical improvements are substantial: context windows up to 128K tokens (compared to Gemma 2’s 8K), support for 140+ languages in the larger models, and vision capabilities powered by Google’s SigLIP image encoder.

These impressive capabilities make Gemma 3 an ideal candidate for specialized fine-tuning. In this tutorial, we’ll explore how to use Firecrawl to build a custom dataset and then use Unsloth to efficiently fine-tune Gemma 3 for a specific real-world use case. By the end, you’ll have hands-on experience creating a specialized AI assistant using one of Google’s most capable open models, all without breaking the bank on computational resources.

How to Find a Dataset For Fine-tuning Gemma 3?

Finding the right dataset is crucial for successful fine-tuning of any language model, including Gemma 3. The dataset should align with your intended use case and contain high-quality examples that demonstrate the behavior you want the model to learn.

Here are key considerations when selecting or creating a dataset for fine-tuning:

- Relevance: The dataset should closely match your target use case

- Quality: Data should be clean, well-formatted, and free of errors

- Size: While bigger isn’t always better, aim for at least a few thousand examples

- Diversity: Include various examples covering different aspects of the desired behavior

- Format: Data should follow a consistent instruction-response format

Public datasets

There are already hundreds of high-quality open-source datasets to fine-tune multimodal models like Gemma 3. For example, by searching “multimodal” datasets on HuggingFace Hub, you will get over 500 results:

Custom datasets

Despite the high availability of public datasets, there are many scenarios where you might need to create your own datasets to fine-tune models for specific tasks or domains. Here are approaches to build custom datasets:

-

Manual creation:

- Write your own instruction-response pairs

- Most time-consuming but highest quality control

- Ideal for specialized domains

-

Semi-automated generation:

- Use existing LLMs to generate initial examples

- Human review and editing for quality

- Faster than manual creation while maintaining quality

-

Data collection:

- Convert existing documentation or Q&A pairs

- Extract from customer interactions

- Transform domain-specific content into instruction format

Whichever approach you choose, you will need a web scraping solution to curate initial raw data from the web. This is where Firecrawl comes in.

Firecrawl provides web scraping and crawling capabilities through an API interface. Rather than using CSS selectors or XPath expressions which can break when websites change, it uses AI-powered extraction to identify and collect data using natural language descriptions. It can also convert entire websites into a single text document under the LLMs.txt standard, which is an ideal format to generate high-quality datasets for LLMs.

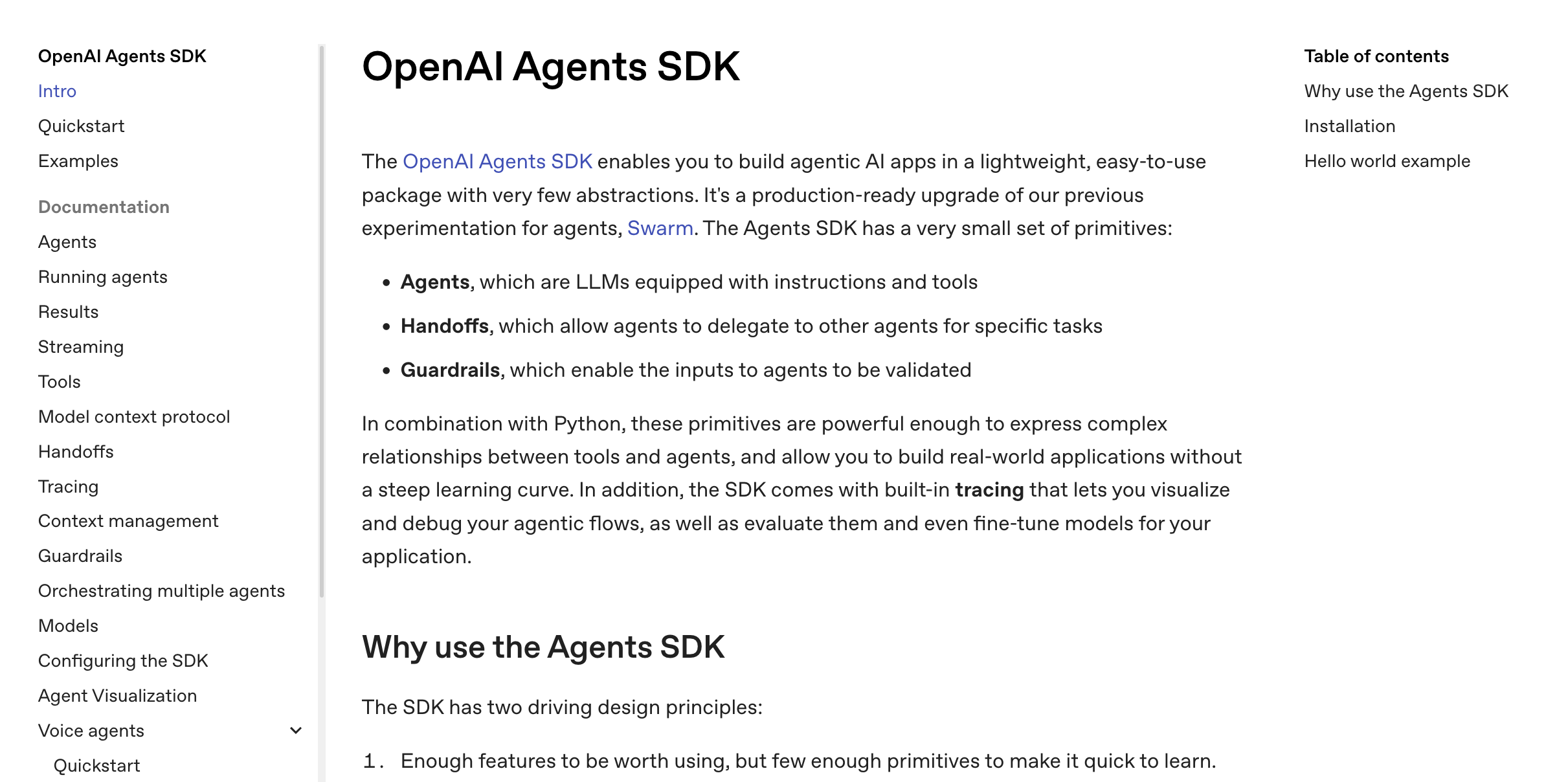

In this article, we’ll use that exact feature to convert the documentation of the new OpenAI Agents SDK framework into a custom instruction dataset and fine-tune Gemma 3 on it (for details, see the upcoming section).

The Tech Stack For Any Supervised LLM Fine-tuning Task

To successfully fine-tune powerful models like Gemma 3, we need a robust and efficient technical infrastructure. Now that we understand Gemma 3’s capabilities and why it’s an ideal candidate for specialized fine-tuning, let’s examine the underlying tools and libraries that make this process possible.

-

unsloth: A library that optimizes LLM fine-tuning by reducing memory usage and training time. It provides QLoRA and other memory-efficient techniques out of the box. We will use it throughout the fine-tuning process. -

huggingface_hub: Enables seamless integration with Hugging Face’s model hub for downloading pre-trained models and uploading fine-tuned ones. Essential for model sharing and versioning. -

datasets: Provides efficient data loading and preprocessing utilities. Handles large datasets through memory mapping and streaming, with built-in support for common ML dataset formats. -

transformers: The core library for working with transformer models. Offers model architectures, tokenizers, and training utilities with a unified API across different model types. -

trl: (Transformer Reinforcement Learning) Specializes in fine-tuning language models through techniques like supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF). -

torch: The deep learning framework that powers the training process. Provides tensor operations, automatic differentiation, and GPU acceleration essential for model training. -

wandb: (Weights & Biases) A powerful experiment tracking tool that monitors training metrics, visualizes results, and helps compare different training runs. Invaluable for debugging and optimization. -

accelerate: A library that simplifies distributed training across multiple GPUs and machines. It handles device placement, mixed precision training, and gradient synchronization automatically. -

bitsandbytes: Provides 8-bit optimizers and quantization methods to reduce model memory usage. Essential for training large models on consumer hardware through techniques like Int8 and FP8 quantization. -

peft: (Parameter-Efficient Fine-Tuning) Implements memory-efficient fine-tuning methods like LoRA, Prefix Tuning, and P-Tuning. Allows fine-tuning billion-parameter models on consumer GPUs by training only a small subset of parameters.

Let’s examine how these libraries work together to enable LLM fine-tuning:

The process starts with PyTorch (torch) as the foundation - it provides the core tensor operations and automatic differentiation needed for neural network training. On top of this, the transformers library implements the actual model architectures and training logic specific to transformer models like Gemma 3.

When loading a pre-trained model, huggingface_hub handles downloading the weights and configuration files from Hugging Face’s model repository. The model is then loaded into memory through transformers, while bitsandbytes applies quantization to reduce the memory footprint.

For efficient fine-tuning, peft implements parameter-efficient methods like LoRA that modify only a small subset of model weights. This works in conjunction with unsloth, which further optimizes memory usage and training speed through techniques like QLoRA.

The datasets library manages the training data, using memory mapping to efficiently handle large datasets without loading everything into RAM. It feeds batches of data to the training loop, where trl implements the supervised fine-tuning logic.

accelerate coordinates the training process across available hardware resources, managing device placement and mixed precision training. It works with PyTorch’s built-in distributed training capabilities to enable multi-GPU training when available.

Throughout training, wandb tracks and visualizes metrics like loss and accuracy in real-time, storing this data for later analysis and comparison between different training runs.

This integrated stack allows you to fine-tune billion-parameter models on consumer hardware by:

- Loading and quantizing the base model efficiently

- Applying parameter-efficient fine-tuning techniques

- Managing training data and hardware resources

- Tracking training progress and results

Each component handles a specific aspect of the fine-tuning process while working seamlessly with the others through standardized APIs and data formats. As you continue with the tutorial, you will see most of these libraries used to fine-tune our own Gemma 3 model.

Creating a Custom QA Dataset For Gemma

Fine-tuning process always starts with a dataset. If you couldn’t find a public dataset for your specific use-case, we will show you how to build a custom one in this section. The idea is that we want to go from a data source such as a documentation website:

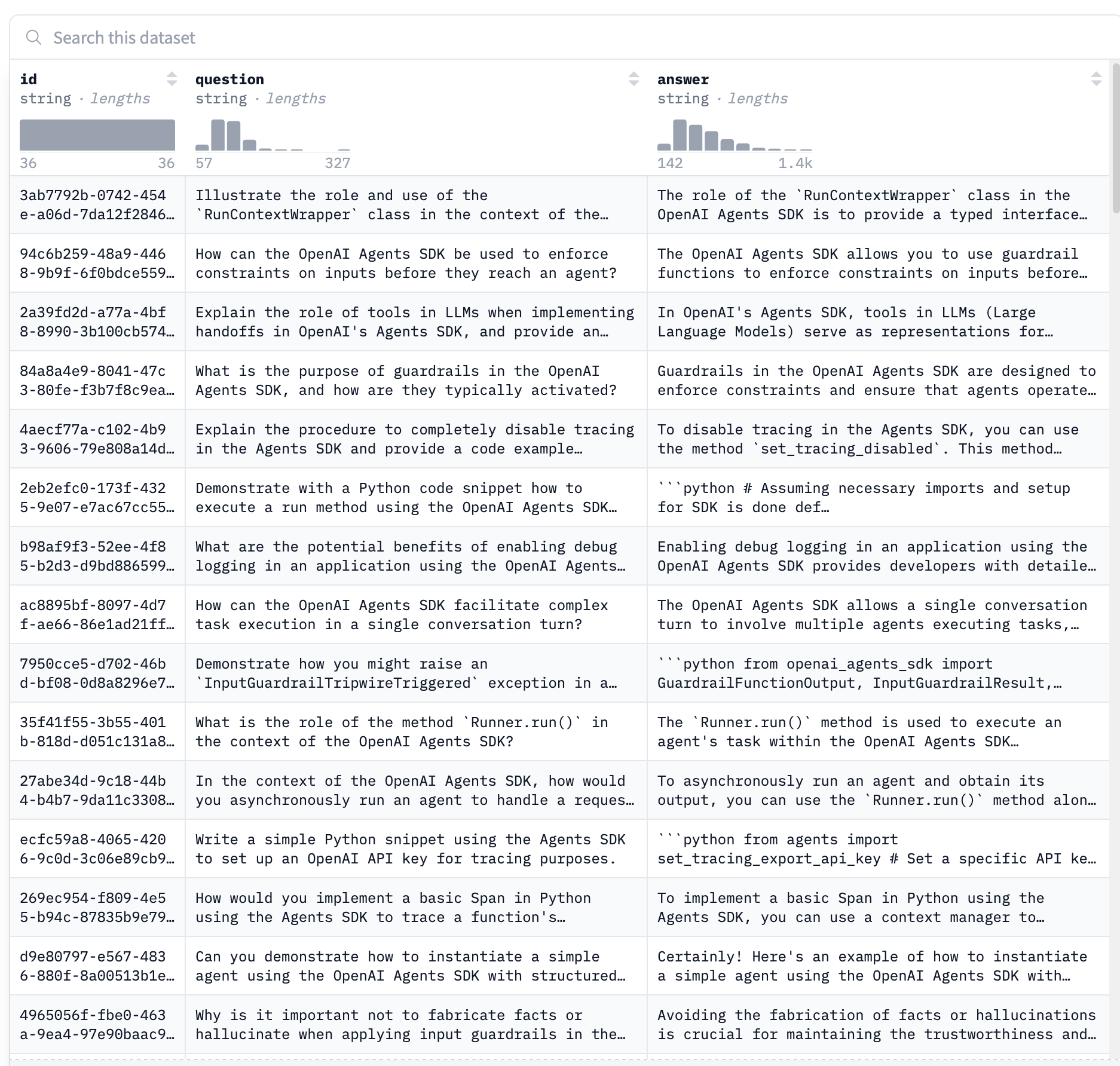

to a flat JSON file containing question-answer pairs that teach an LLM how to answer questions about our custom data source:

As you can see, we’ve already captured the Agents SDK’s documentation in the form of hundreds of question-answer pairs and uploaded it as a dataset to HuggingFace Hub. Each pair will teach Gemma a key piece of information about the documentation and the framework’s syntax.

You can find the full code of how we implemented this process from our GitHub repository. Here, we will only explore a high-level overview of the steps we took.

Generating LLMs.txt

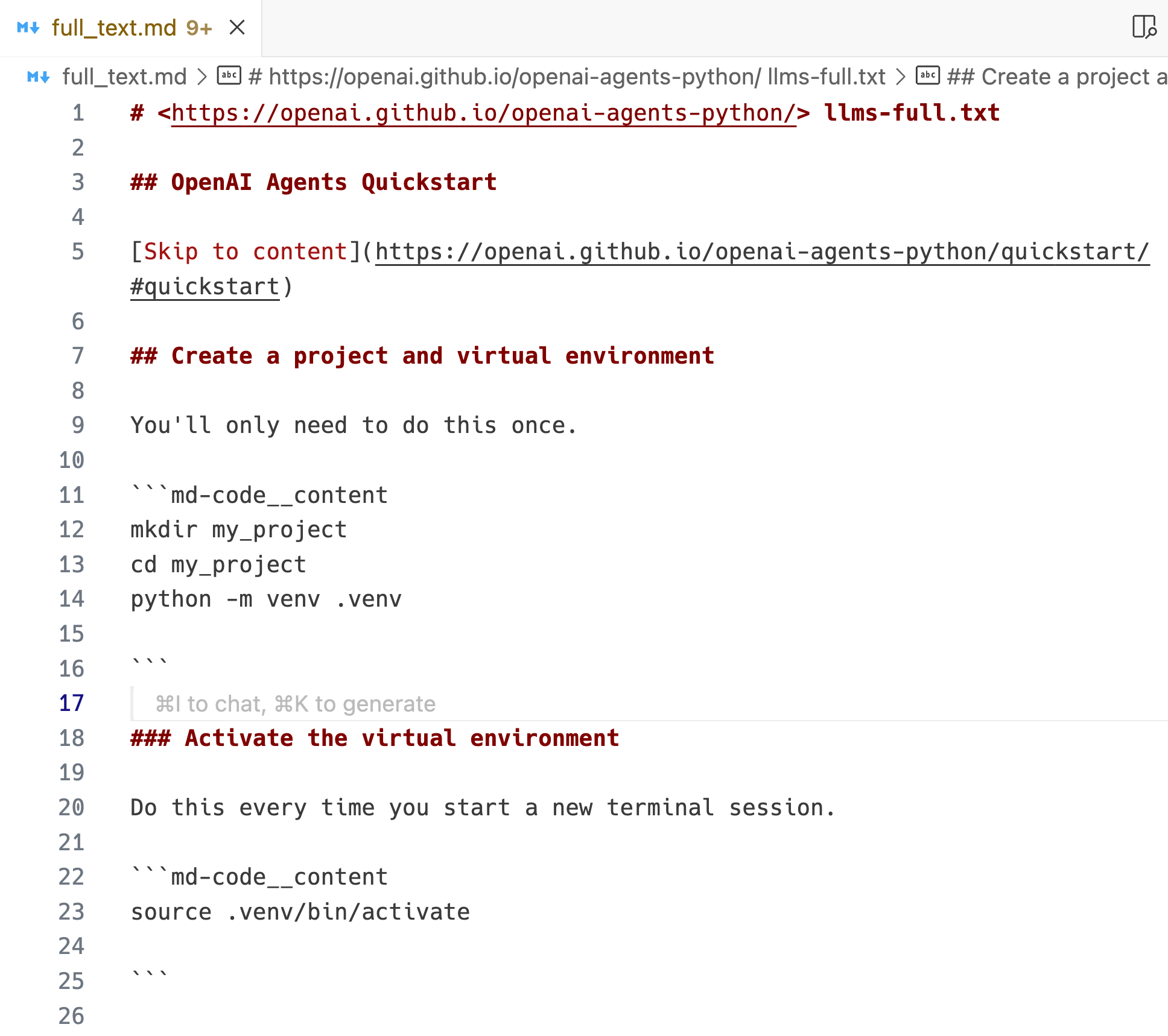

Our first script, scrape.py, takes a website URL as input:

# ... the rest of the script

if __name__ == "__main__":

# Example usage

url = "https://openai.github.io/openai-agents-python/"

output_file = "full_text.md"

result = scrape_website(url, output_file, max_urls=100)

and converts all its contents to a single markdown document that looks like this:

The core functionality of the script is the async_generate_llms_text function of the Firecrawl API:

from firecrawl import FirecrawlApp

# Initialize the client

firecrawl = FirecrawlApp(api_key="your_api_key")

params = {

"maxUrls": 10, # Maximum URLs to analyze

"showFullText": True # Include full text in results

}

# Create async job

job = firecrawl.async_generate_llms_text(

url="https://example.com",

params=params

)

if job['success']:

job_id = job['id']

# Check LLMs.txt generation status

status = firecrawl.check_generate_llms_text_status("job_id")

# Print current status

print(f"Status: {status['status']}")

if status['status'] == 'completed':

print("LLMs.txt Content:", status['data']['llmstxt'])

if 'llmsfulltxt' in status['data']:

print("Full Text Content:", status['data']['llmsfulltxt'])

print(f"Processed URLs: {len(status['data']['processedUrls'])}")

The above example shows how to use this method to scrape 10 pages of a sample URL. In our script, we set the maximum number of URLs to 100 to capture the entire documentation website.

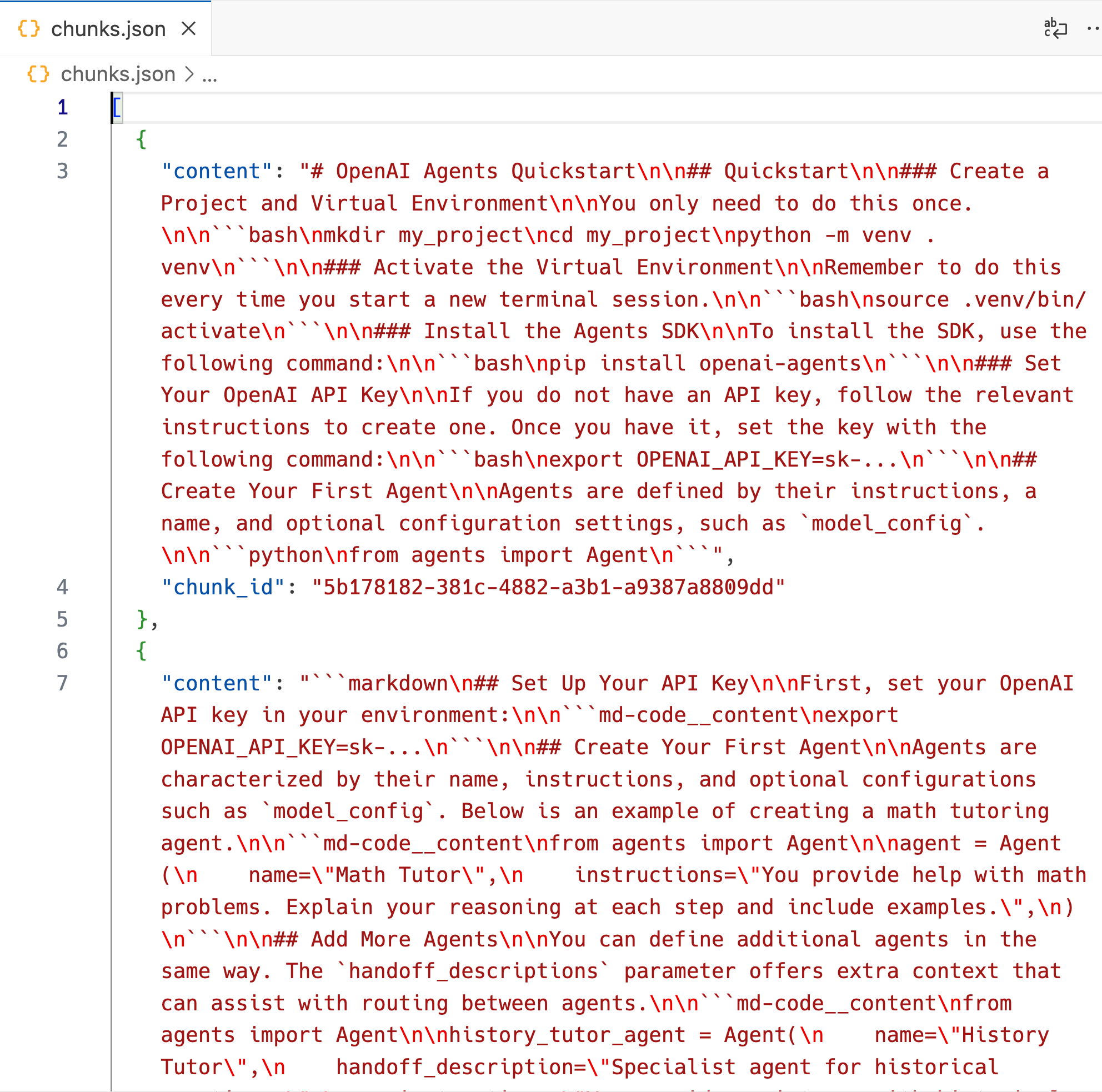

Chunking the dataset

In this step, we take the markdown file generated by the scraper and chunk it into a JSON dataset that looks like this (see chunk.py from our repository):

These chunks are generated by splitting the markdown document using LangChain’s RecursiveCharacterSplitter:

from langchain_text_splitters import RecursiveCharacterTextSplitter

# Load example document

with open("full_text.md") as f:

state_of_the_union = f.read()

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1024,

chunk_overlap=256,

)

The created chunks are not perfect - they may contain half-sentences, incorrect formatting, and unnecessary characters. For this reason, we pass them to OpenAI’s GPT-4o-mini model to improve their coherence. Here is a function we use for this task in chunk.py:

def improve_chunk_coherence(...):

prompt = f"""

You are a helpful assistant that improves the coherence of text.

Please improve the coherence of the following text:

{text}

If the text contains code blocks, don't change the code. Wrap it in triple backticks

along with the programming language. Don't make up facts or hallucinate. Your job is

to make the text more coherent and easier to understand, only.

If the text contains URLs or hyperlinks, remove them while preserving their text. For example:

[This is a link](https://www.example.com) -> This is a link

Only return the improved text, no other text or commentary.

"""

try:

response = client.responses.create(

model=model,

input=prompt,

)

return response.output_text

except Exception as e:

logger.error(f"Error improving chunk coherence: {e}")

# Return original text if improvement fails

return text

In this function, the most important step is the correct use of a system prompt.

Then, we process the chunks in parallel using multiple threads to speed up the execution. The final result is the JSON file you saw in the beginning containing the chunk text and a unique identifier:

[

{

"content": "# OpenAI Agents Quickstart\n\n#...",

"chunk_id": "5b178182-381c-4882-a3b1-a9387a8809dd"

},

...

]

Generating QA pairs

After we have a chunked dataset, we want to create 5 QA pairs from each chunk. These pairs must teach something new to the LLM about the Agents SDK in a compatible format.

In practice, the logic of the chunking and QA pair generation steps is the most important aspect of your entire fine-tuning process. You must consider various factors like:

- The correct chunking strategy, including chunk size and quality

- The system prompt for chunking

- The system prompt for QA generation

- The number of QA pairs to generate for each chunk

- The overall dataset size

and so on. I would recommend that you spend most of your time figuring out these details as the quality of the dataset is crucial for getting a high-performing fine-tuned model.

In generate_qa_pairs.py script, we choose these configurations mostly at random for demonstration purposes.

After we run the script, we get a new JSON file containing our final dataset:

Here is the system prompt we used for QA pair generation:

PROMPT = """

You are an expert at generating question-answer pairs from a given text. For each given text, you must generate 5 QA pairs.

Your generated questions-answer pairs will be fed into an LLM to train it on how to use the new OpenAI Agents SDK Python library.

In each question-answer pair, you must try to teach as much about the Agents SDK as possible to the LLM. Generate the pairs in a way that you would want be taught this new Agents SDK Python library.

Additional instructions:

- Your questions must not contain references to the text itself.

- Keep the difficulty of questions to hard.

- If the text contains code blocks, you must generate pairs that contain coding question-answers as we want the LLM to learn how to use the Agents SDK in Python.

- Don't generate pairs about the specific variables, functions or classes in code examples in the given text. Instead, generate coding snippets that are generic and aid the LLM's understanding of the Agents SDK's Python syntax.

- Wrap the code snippets in triple backticks with the programming language.

Here is the text:

{text}

"""

Besides, the script also implements rate-limiting and retry mechanisms to respect OpenAI’s servers and ensure fail-proof pair generation.

In the final upload_to_hf.py script, we pushed the dataset to HuggingFace under the name “bexgboost/openai-agents-python-qa-firecrawl”. You can explore it here.

If you want to dive deeper into how this process of creating instruction datasets work, you can check out our separate article on our blog.

Step-by-step guide to fine-tuning Gemma 3 with Unsloth AI

This section walks you through the complete process of fine-tuning Google’s Gemma 3 language model using Unsloth AI’s optimization techniques. We’ll cover environment setup, model loading, dataset preprocessing, trainer configuration, and finally training and testing the model. I recommend following along the code in Google Colab as it offers T4 GPUs with 16GB vRAM.

1. Environment setup

The first step involves installing all the necessary libraries for our fine-tuning task:

%%capture

!pip install -U datasets

!pip install -U accelerate

!pip install -U peft

!pip install -U trl

!pip install -U bitsandbytes

!pip install git+https://github.com/huggingface/transformers@v4.49.0-Gemma-3

!pip install -q unsloth

!pip install -q --force-reinstall --no-cache-dir --no-deps git+https://github.com/unslothai/unsloth.git

Here’s what each package does:

- datasets: HuggingFace’s library for efficiently loading, processing, and managing datasets

- accelerate: Simplifies distributed training across multiple GPUs and machines

- peft: Parameter-Efficient Fine-Tuning library that implements memory-efficient methods like LoRA

- trl: Transformer Reinforcement Learning library for fine-tuning language models

- bitsandbytes: Provides quantization techniques to reduce model memory usage

- transformers: HuggingFace’s main library for working with transformer models

- unsloth: A specialized library that optimizes LLM fine-tuning by reducing memory usage and training time

Note that we’re installing a specific version of transformers compatible with Gemma 3, and installing unsloth directly from its GitHub repository to ensure we have the latest features.

Next, we authenticate with the HuggingFace Hub to access the Gemma 3 model:

from huggingface_hub import login

hf_token = "hf_YOUR_TOKEN"

login(hf_token)

The login() function authenticates your HuggingFace credentials, allowing you to download models that require authentication, like Gemma 3. You can generate your own token from your HuggingFace settings page.

2. Loading the model and the tokenizer

Now we’ll load the Gemma 3 model and its tokenizer using Unsloth’s optimized loading:

from unsloth import FastModel

model, tokenizer = FastModel.from_pretrained(

model_name = "unsloth/gemma-3-12b-it",

max_seq_length = 2048, # Choose any for long context

load_in_4bit = True, # 4 bit quantization to reduce memory

full_finetuning = False,

token=hf_token

)

Let’s break down what’s happening:

- FastModel.from_pretrained(): Unsloth’s optimized method for loading models faster and with less memory than standard HuggingFace loading

- model_name: We’re using the instruction-tuned (it) version of Gemma 3 with 12 billion parameters from Unsloth. Due to usage limits in Colab, we can’t choose the full 27B version

- max_seq_length: Sets the maximum sequence length to 2048 tokens, which determines how much context the model can handle at once

- load_in_4bit: Enables 4-bit quantization, dramatically reducing memory requirements at the cost of minimal precision loss

- full_finetuning: Set to False to enable parameter-efficient fine-tuning instead of updating all model weights

- token: Your HuggingFace token for model access

This function returns two important objects:

- model: The loaded Gemma 3 model

- tokenizer: The tokenizer that converts text to tokens and back

The 4-bit quantization is particularly important as it allows fine-tuning large models like Gemma 3 (12B parameters) on consumer GPUs with limited VRAM.

3. Preprocessing the dataset

Next, we load our custom dataset and prepare it for fine-tuning:

from datasets import load_dataset

dataset_name = "bexgboost/openai-agents-python-qa-firecrawl"

dataset = load_dataset(

dataset_name, split = "train", trust_remote_code=True

)

- load_dataset(): HuggingFace’s function to load datasets from their hub

- dataset_name: The path to our custom dataset on HuggingFace Hub

- split: We’re using only the “train” split of the dataset

- trust_remote_code: Allows execution of code that might be included with the dataset

Now we need to format our dataset into the structure expected by Gemma 3:

EOS_TOKEN = tokenizer.eos_token

def format_instruction(example):

prompt = """Below is an instruction that describes a task, paired with an input that provides further context.

Write a response that appropriately completes the request.

Before answering, think carefully about the question and create a step-by-step chain of thoughts to ensure a logical and accurate response.

### Instruction:

You are a web scraping expert with advanced knowledge in Firecrawl, which is an AI-based web-scraping engine.

Please answer the following question about Firecrawl.

### Question:

{}

### Response:

{}"""

return {

"text": prompt.format(example['question'], example['answer']) + EOS_TOKEN

}

dataset = dataset.map(format_instruction)

This code does several important things:

- EOS_TOKEN: Gets the end-of-sequence token from the tokenizer, which signals the model to stop generating text

- format_instruction(): A function that transforms each dataset example into a structured instruction format

- prompt: The template that formats our data in a way that Gemma 3 understands, with placeholders for questions and answers

- dataset.map(): Applies our formatting function to every example in the dataset

The prompt template follows a specific structure that Gemma 3 was instruction-tuned to understand, with clear sections for instructions, questions, and responses.

Finally, we tokenize the formatted dataset:

def tokenize_function(examples):

return tokenizer(examples["text"], padding=False)

# Manually preprocess and tokenize

processed_dataset = dataset.map(

tokenize_function,

batched=True,

remove_columns=["text"], # Remove original text column

desc="Tokenizing dataset"

)

- tokenize_function(): Converts text examples into token IDs that the model understands

- tokenizer(): The actual tokenization function from the tokenizer we loaded earlier

- padding=False: No padding is applied at this stage (will be handled by the trainer)

- batched=True: Process examples in batches for efficiency

- remove_columns=[“text”]: Removes the original text column to save memory

- desc: Description for the progress bar

After this step, our dataset contains tokenized examples ready for training.

4. Setting up the SFTTrainer

Now we prepare our model for Parameter-Efficient Fine-Tuning (PEFT) using LoRA:

model = FastModel.get_peft_model(

model,

r=8,

target_modules=[

"q_proj",

"k_proj",

"v_proj",

"o_proj",

"gate_proj",

"up_proj",

"down_proj",

],

finetune_vision_layers = False, # Turn off for just text!

finetune_language_layers = True, # Should leave on!

finetune_attention_modules = True, # Attention good for GRPO

finetune_mlp_modules = True, # Should leave on always!

lora_alpha=8,

lora_dropout=0,

bias="none",

use_gradient_checkpointing="unsloth", # True or "unsloth" for very long context

random_state=1000,

use_rslora=False

)

This code configures LoRA (Low-Rank Adaptation), a parameter-efficient fine-tuning technique:

- r=8: The rank of the LoRA matrices (lower values = fewer parameters but less expressivity)

- target_modules: The specific model layers to apply LoRA to, focusing on attention and MLP components

- finetune_vision_layers: Disabled because we’re only working with text

- finetune_language_layers: Enabled to fine-tune the language understanding capabilities

- finetune_attention_modules: Enabled to fine-tune attention mechanisms (important for question answering)

- finetune_mlp_modules: Enabled to fine-tune multilayer perceptrons

- lora_alpha: Scaling factor for the LoRA updates (higher values = stronger updates)

- lora_dropout: Dropout rate for LoRA layers (0 = no dropout)

- bias: Whether to train bias terms (“none” = don’t train)

- use_gradient_checkpointing: Memory optimization technique, set to Unsloth’s implementation

- random_state: Random seed for reproducibility

- use_rslora: Whether to use rank-stabilized LoRA (disabled here)

Next, we set up the Supervised Fine-Tuning Trainer:

from trl import SFTTrainer, SFTConfig

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = processed_dataset,

eval_dataset = None, # Can set up evaluation!

args = SFTConfig(

dataset_text_field = "text",

dataset_kwargs = {"skip_prepare_dataset": True},

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

num_train_epochs = 2,

learning_rate = 2e-4,

logging_steps = 5,

optim = "adamw_8bit",

weight_decay = 0.01,

lr_scheduler_type = "linear",

seed = 3407,

report_to = "none",

),

)

The SFTTrainer handles the fine-tuning process:

- model: Our PEFT-configured model

- tokenizer: Our tokenizer

- train_dataset: Our processed dataset

- eval_dataset: No evaluation dataset provided (could be added for monitoring)

- args: Configuration for training via SFTConfig:

- dataset_text_field: The name of the text field in our dataset

- dataset_kwargs: Additional dataset processing arguments

- per_device_train_batch_size: Number of examples per GPU (small due to model size)

- gradient_accumulation_steps: Accumulate gradients across multiple batches to simulate larger batches

- warmup_steps: Number of steps to gradually increase learning rate

- num_train_epochs: Total training epochs (2 is often sufficient for LoRA fine-tuning)

- learning_rate: Learning rate for optimization (2e-4 is relatively high for LoRA fine-tuning)

- logging_steps: How often to log training metrics

- optim: Optimizer to use (adamw_8bit for memory efficiency)

- weight_decay: L2 regularization strength

- lr_scheduler_type: Learning rate schedule type

- seed: Random seed for reproducibility

- report_to: Disable external reporting

These parameters are carefully balanced to provide effective fine-tuning while managing memory constraints and preventing overfitting.

5. Model training and testing

With everything set up, we can now train our model:

trainer.train()

This single line kicks off the entire training process. The train() method will:

- Prepare batches from our dataset

- Feed them through the model

- Calculate loss based on prediction errors

- Backpropagate gradients

- Update the LoRA parameters

- Log progress and metrics

The training will run for 2 epochs as specified, with all the configuration we’ve set up previously.

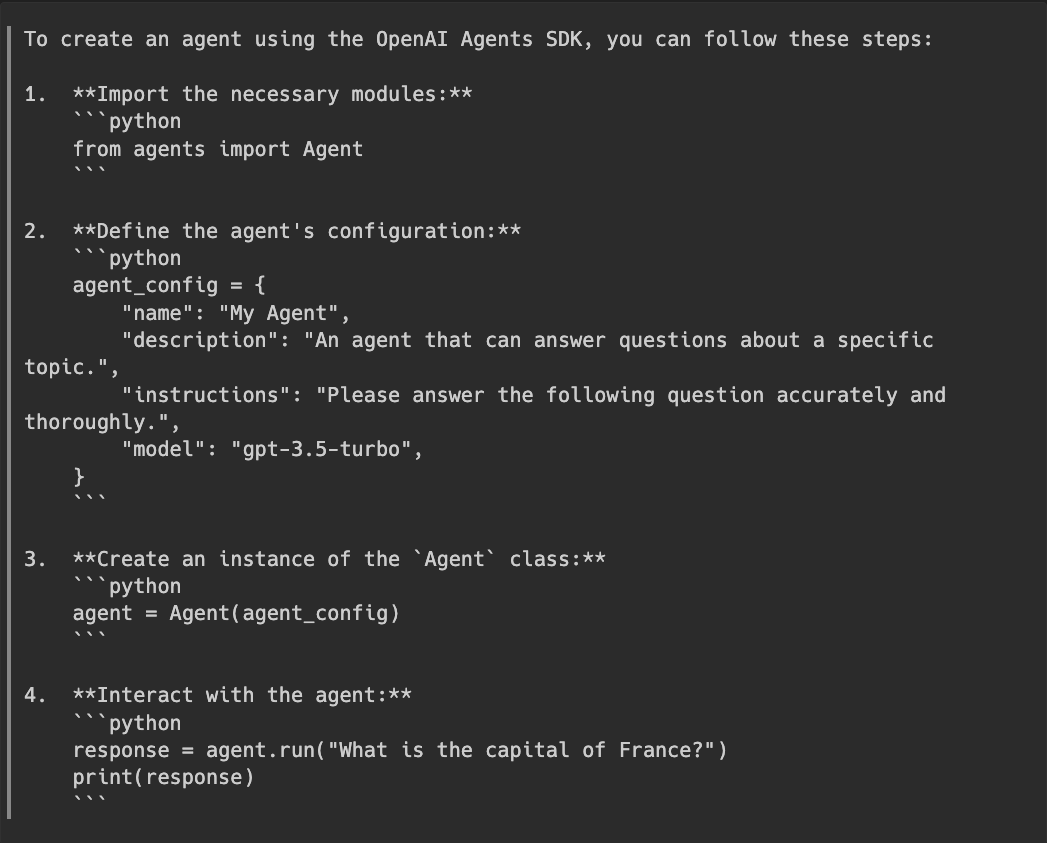

After training completes, we can test our fine-tuned model:

from transformers import TextStreamer

prompt = """Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

{}

### Response:

{}

"""

FastModel.for_inference(model)

instruction = "How do you create an agent using OpenAI Agents SDK?"

message = prompt.format(instruction, "")

inputs = tokenizer([message], return_tensors="pt").to("cuda")

text_streamer = TextStreamer(tokenizer)

_ = model.generate(**inputs, streamer=text_streamer, max_new_tokens=512, use_cache=True)

This test code:

- FastModel.for_inference(): Prepares the model for efficient inference

- prompt: Creates a template similar to our training format

- instruction: A test question about the OpenAI Agents SDK

- message: Formats our instruction into the prompt template

- inputs: Tokenizes the message and moves it to the GPU

- TextStreamer: Creates a streamer that will print tokens as they’re generated

- model.generate(): Runs text generation with our model

- streamer: Uses the text streamer to display results in real time

- max_new_tokens: Limits generation to 512 new tokens

- use_cache: Enables key-value caching for faster generation

Here is the model’s response to our question:

Unfortunately, the model is widely off-the-mark here. The output is not great, the model now correctly recognizes the Agents framework but the syntax is incorrect. This can be due to various factors such as low dataset quality, smaller dataset size, bad tuning parameters, and other issues we didn’t spend much time optimizing. These limitations highlight the importance of high-quality training data and careful parameter selection when fine-tuning language models.

Conclusion

This guide has walked you through the complete process of fine-tuning Gemma 3 using Unsloth AI’s optimization techniques. By using parameter-efficient methods like LoRA and memory-saving approaches like 4-bit quantization, we can fine-tune large language models even on consumer hardware.

The key advantages of this approach include:

- Reduced memory requirements through quantization and gradient checkpointing

- Faster training through Unsloth’s optimizations

- Minimal parameters to update through LoRA

- Simple workflow through integrated libraries like TRL

While our example focused on a specific dataset about the OpenAI Agents SDK, this same approach can be applied to any domain-specific dataset for creating specialized AI assistants. Here are some resources for further exploration:

- Colab notebook used during fine-tuning

- The entire code for this article

- Firecrawl documentation and blog

Thank you for reading!

data from the web