Introduction

Web scraping and data extraction have become essential tools as businesses race to convert unprecedented amounts of online data into LLM-friendly formats. Firecrawl's powerful web scraping API streamlines this process with enterprise-grade automation and scalability features.

This guide focuses on Firecrawl's most powerful feature: the crawl method, which makes automated website scraping possible at scale. You'll learn how to:

- Recursively traverse website sub-pages

- Handle dynamic JavaScript-based content

- Handle complex web infrastructure reliably

- Extract clean, structured data for AI/ML applications

Table of Contents

- Introduction

- Web Scraping vs Web Crawling: Understanding the Key Differences

- Step-by-Step Guide to Web Crawling with Firecrawl's API

- Advanced Web Scraping Configuration and Best Practices

- Asynchronous Web Crawling with Firecrawl

- What Is New in Crawl v2?

- How to Save and Store Web Crawling Results

- Building AI-Powered Web Crawlers with Firecrawl and LangChain Integration

- Conclusion

Web Scraping vs Web Crawling: Understanding the Key Differences

What's the Difference?

Web scraping refers to extracting specific data from individual web pages, such as a Wikipedia article or technical tutorial. It's primarily used when you need specific information from pages with known URLs. For detailed guidance on single-page extraction, see our complete guide to Firecrawl's scrape endpoint.

Web crawling, on the other hand, involves systematically browsing and discovering web pages by following links, focusing on website navigation and URL discovery.

For example, to build a chatbot that answers questions about Stripe's documentation, you would need:

- Web crawling to discover and traverse all pages in Stripe's documentation site

- Web scraping to extract the actual content from each discovered page

This is exactly what we demonstrate in our guide on turning any website into a chatbot. For more website-to-agent conversions, see our website to agent guide and Firestarter announcement. To prepare website data for LLMs, check How to Create an llms.txt File.

How Firecrawl Combines Both

Firecrawl's crawl method combines both capabilities:

- URL analysis: Identifies links through sitemap or page traversal (you can also use the map endpoint to discover URLs first)

- Recursive traversal: Follows links to discover sub-pages

- Content scraping: Extracts clean content from each page

- Results compilation: Converts everything to structured data

When you pass the URL https://docs.stripe.com/api to the method, it automatically discovers and crawls all documentation sub-pages. The method returns the content in your preferred format (markdown, HTML, screenshots, links, or metadata).

Step-by-Step Guide to Web Crawling with Firecrawl's API

Firecrawl is a web scraping engine exposed as a REST API. You can use it from the command line with cURL or through one of its language SDKs for Python, Node, Go, or Rust. This tutorial focuses on the Python SDK.

To get started:

- Sign up at firecrawl.dev and copy your API key

- Save the key as an environment variable:

export FIRECRAWL_API_KEY='fc-YOUR-KEY-HERE'Or use a dot-env file:

touch .env

echo "FIRECRAWL_API_KEY='fc-YOUR-KEY-HERE'" >> .envThen use the Python SDK:

from firecrawl import Firecrawl # pip install firecrawl-py

from dotenv import load_dotenv

import os

load_dotenv()

app = Firecrawl()Once your API key is loaded, the Firecrawl class uses it to establish a connection to the Firecrawl API engine.

Note that you may hit API rate limits if you make many requests in a short period of time if you're on the free plan.

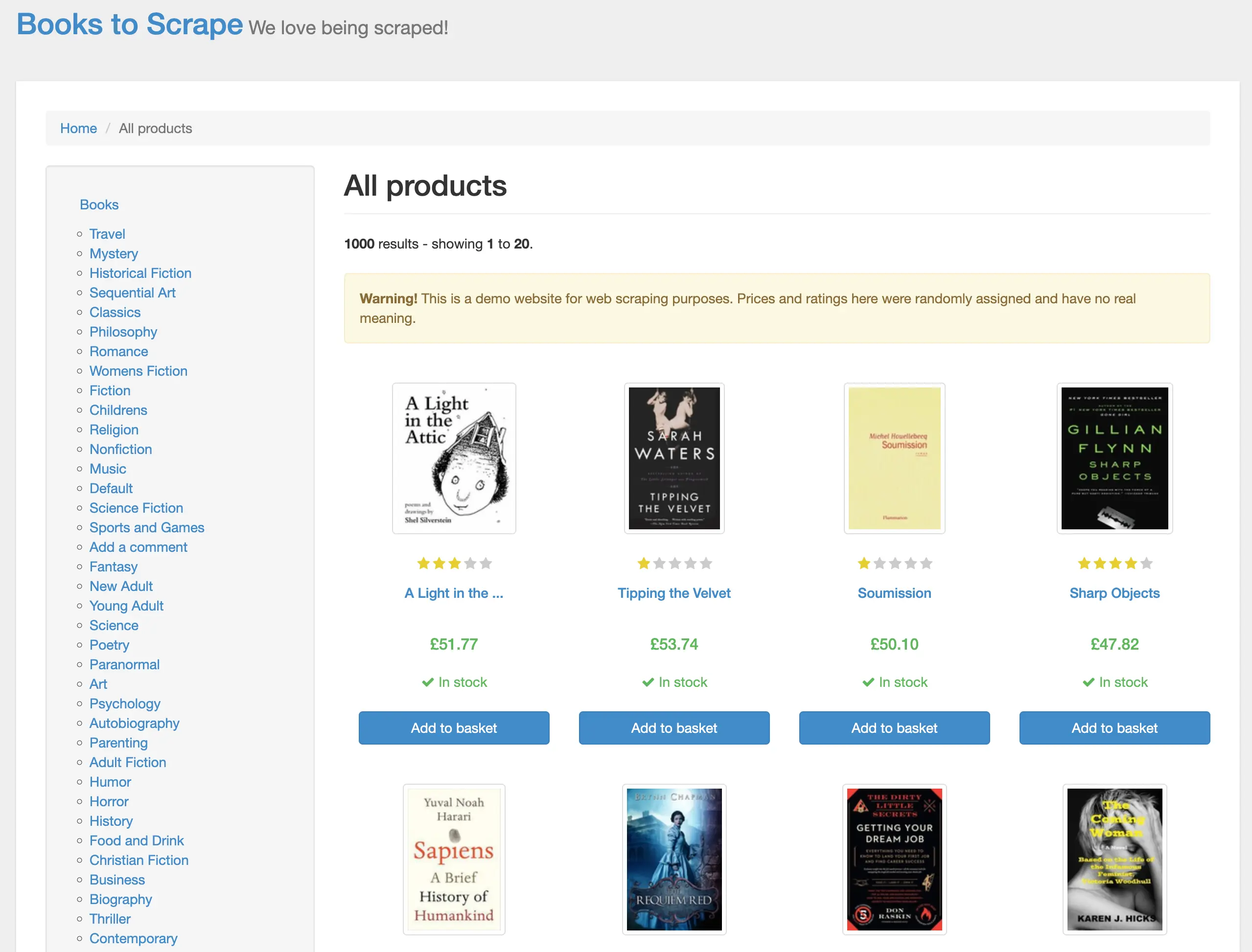

First, we'll crawl the https://books.toscrape.com/ website, which is designed for web scraping practice:

Instead of writing dozens of lines of code with libraries like beautifulsoup4 or lxml to parse HTML elements, handle pagination, and retrieve data, Firecrawl's crawl method accomplishes this in a single line:

base_url = "https://books.toscrape.com/"

crawl_result = app.crawl(url=base_url, limit=20)The result is a dictionary with the following keys:

print(f"Status: {crawl_result.status}")

print(f"Total pages: {crawl_result.total}")

print(f"Credits used: {crawl_result.credits_used}")

print(f"Completed: {crawl_result.completed}")Status: completed

Total pages: 3

Credits used: 3

Completed: 3First, we are interested in the status of the crawl job:

crawl_result.status'completed'If it is completed, let's see how many pages were scraped:

crawl_result.total33 pages (this took about 8 seconds on my machine; speed varies based on your connection and website complexity). Let's examine one of the elements in the data list:

sample_page = crawl_result.data[0]

markdown_content = sample_page.markdown

print(markdown_content[:500])- [Home](https://books.toscrape.com/index.html)

- All products

# All products

**1000** results - showing **1** to **20**.

**Warning!** This is a demo website for web scraping purposes. Prices and ratings here were randomly assigned and have no real meaning.

01. [](https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html)Firecrawl also includes page metadata in each element's dictionary:

sample_page.metadata.model_dump(){

'title': '\n All products | Books to Scrape - Sandbox\n',

'description': '',

'url': 'https://books.toscrape.com/',

'language': 'en-us',

'keywords': None,

'robots': 'NOARCHIVE,NOCACHE',

...

}One thing we haven't mentioned is how Firecrawl handles pagination. If you scroll to the bottom of Books-to-Scrape, you'll see that it has a "next" button.

Before moving to sub-pages like books.toscrape.com/category, Firecrawl first scrapes all sub-pages from the homepage. If a sub-page includes links to already scraped pages, those links are ignored.

Advanced Web Scraping Configuration and Best Practices

Firecrawl offers several types of parameters to configure how the crawl method works with websites. We will outline them here with their use-cases.

Scrape Options

In real-world projects, you'll adjust this parameter most frequently. It controls how webpage content is saved. Firecrawl supports the following formats:

- Markdown (default)

- HTML

- Raw HTML (complete webpage copy)

- Links

- Screenshot

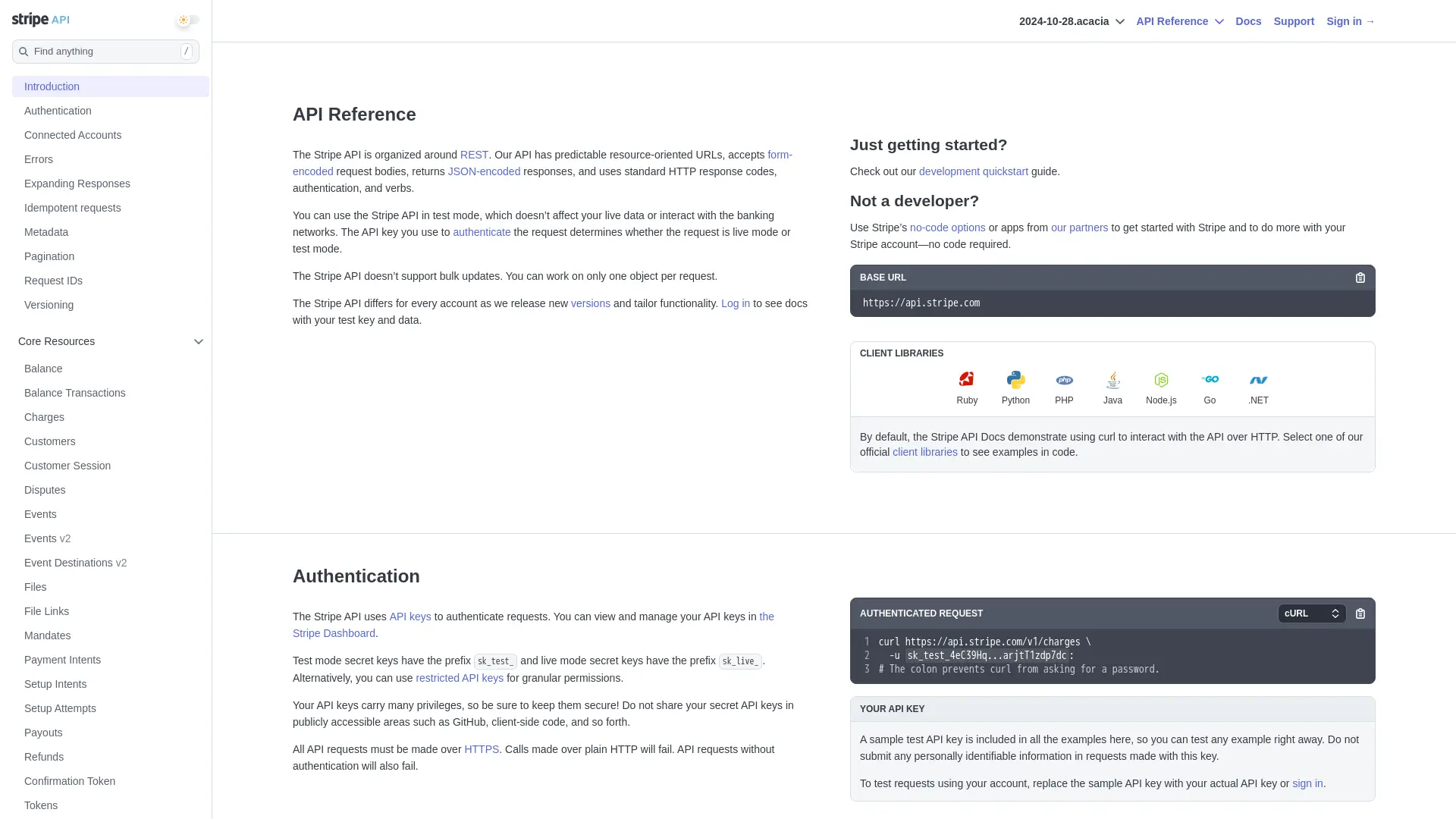

Here's an example request to crawl the Stripe API in multiple formats:

from firecrawl.v2.types import ScrapeOptions

# Crawl the first 2 pages of the stripe API documentation

stripe_crawl_result = app.crawl(

url="https://docs.stripe.com/api",

limit=2, # Only scrape the first 2 pages including the base-url

scrape_options=ScrapeOptions(

formats=["markdown", "html", "links", "screenshot"] # Multiple formats for testing

)

)When you specify multiple formats, each webpage's data contains separate keys for each format's content:

# Check available attributes in the first result

sample = stripe_crawl_result.data[0]

print(f"Has markdown: {hasattr(sample, 'markdown')}")

print(f"Has html: {hasattr(sample, 'html')}")

print(f"Has links: {hasattr(sample, 'links')}")

print(f"Has screenshot: {hasattr(sample, 'screenshot')}")

if hasattr(sample, 'links') and sample.links:

print(f"Number of links found: {len(sample.links)}")

print(f"First few links: {sample.links[:3]}")Has markdown: True

Has html: True

Has links: True

Has screenshot: True

Number of links found: 55

First few links: ['https://www.hcaptcha.com/what-is-hcaptcha-about?ref=b.stripecdn.com&utm_campaign=5034f7f0-a742-48aa-89e2-062ece60f0d6&utm_medium=challenge&hl=en', 'https://docs.stripe.com/api-v2-overview#main-content', 'https://dashboard.stripe.com/register']The value of the screenshot key is a temporary link to a PNG file stored on Firecrawl's servers and expires within 24 hours. Here is what it looks like for Stripe's API documentation homepage:

# In Jupyter Notebook

from IPython.display import Image

Image(stripe_crawl_result.data[0].screenshot)

Note that specifying more formats for page content transformation can considerably slow down the process.

Another time-consuming operation is scraping entire page contents instead of just the elements you need. For such scenarios, Firecrawl lets you control which webpage elements are scraped using the only_main_content, include_tags, and exclude_tags parameters. For AI-powered structured data extraction, consider using the extract endpoint which can automatically identify and extract specific data fields.

Enabling the only_main_content parameter (disabled by default) excludes navigation, headers, and footers:

stripe_crawl_result = app.crawl(

url="https://docs.stripe.com/api",

limit=5,

scrape_options=ScrapeOptions(

formats=["markdown", "html"],

only_main_content=True,

)

)include_tags and exclude_tags accept lists of whitelisted/blacklisted HTML tags, classes, and IDs:

# Crawl the first 5 pages of the stripe API documentation

stripe_crawl_result = app.crawl(

url="https://docs.stripe.com/api",

limit=5,

scrape_options=ScrapeOptions(

formats=["markdown", "html"],

include_tags=["code", "#page-header"],

exclude_tags=["h1", "h2", ".main-content"],

)

)Crawling large websites can take considerable time, and when appropriate, these small tweaks can greatly impact runtime.

URL Control

Beyond scraping configurations, you have three main options to specify URL patterns for inclusion or exclusion during crawling:

include_paths- targeting specific sectionsexclude_paths- avoiding unwanted contentallow_external_links- managing external content

Here's a sample request that uses these parameters:

# Example of URL control parameters

url_control_result = app.crawl(

url="https://docs.stripe.com/",

# Only crawl pages under the /payments path

include_paths=["/payments/*"],

# Skip the terminal and financial-connections sections

exclude_paths=["/terminal/*", "/financial-connections/*"],

# Don't follow links to external domains

allow_external_links=False,

scrape_options=ScrapeOptions(

formats=["html"]

)

)

# Print the total number of pages crawled

print(f"Total pages crawled: {url_control_result.total}")Total pages crawled: 5In this example, we're crawling the Stripe documentation website with specific URL control parameters:

- The crawler starts at https://docs.stripe.com/ and only crawls pages under the

"/payments/*"path - It explicitly excludes the

"/terminal/*"and"/financial-connections/*"sections - External links are ignored (

allowExternalLinks: false) - The scraping is configured to only capture HTML content

URL control focuses the crawl on relevant content while avoiding unnecessary pages, making it more efficient and focused on specific documentation sections.

Another important parameter is max_discovery_depth, which controls how many levels deep the crawler traverses from the starting URL. For example, a max_discovery_depth of 2 means it will crawl the initial page and pages linked from it, but won't go deeper.

Here's another sample request for the Stripe API docs that illustrate this parameter:

# Example of URL control parameters

url_control_result = app.crawl(

url="https://docs.stripe.com/",

limit=100,

max_discovery_depth=2,

allow_external_links=False,

scrape_options=ScrapeOptions(

formats=["html"]

)

)

# Print the total number of pages crawled

print(f"Total pages crawled: {url_control_result.total}")Total pages crawled: 10Note: When a page has pagination (e.g. pages 2, 3, 4), these paginated pages are not counted as additional depth levels when using max_discovery_depth.

Performance & Limits

The limit parameter, which we've used in previous examples, is important for controlling the scope of web crawling. It sets a maximum number of pages that will be scraped, which is especially important when crawling large websites or when external links are enabled. Without this limit, the crawler could potentially traverse an endless chain of connected pages, consuming unnecessary resources and time.

While the limit parameter helps control the breadth of crawling, you may also need to ensure the quality and completeness of each page crawled. To make sure all desired content is scraped, you can enable a waiting period to let pages fully load. For example, some websites use JavaScript to handle dynamic content, have iFrames for embedding content, or heavy media elements like videos or GIFs:

stripe_crawl_result = app.crawl(

url="https://docs.stripe.com/api",

limit=5,

scrape_options=ScrapeOptions(

formats=["markdown", "html"],

wait_for=1000, # wait for a second for pages to load

timeout=10000, # timeout after 10 seconds

)

)The above code also sets the timeout parameter to 10000 milliseconds (10 seconds), which ensures that if a page takes too long to load, the crawler will move on rather than getting stuck.

Note: The wait_for duration applies to all pages the crawler encounters.

Asynchronous Web Crawling with Firecrawl

Even after following the tips and best practices from the previous section, the crawling process can take considerable time for large websites with thousands of pages. To handle this well, Firecrawl provides asynchronous crawling capabilities that allow you to start a crawl and monitor its progress without blocking your application. This is especially useful when building web applications or services that need to remain responsive while crawling is in progress.

Asynchronous programming in a nutshell

First, let's understand asynchronous programming with a real-world analogy:

Asynchronous programming is like a restaurant server taking multiple orders at once. Instead of waiting at one table until customers finish their meal before moving to the next, they can take orders from multiple tables, submit them to the kitchen, and handle other tasks while food is being prepared.

In programming terms, this means your code can initiate multiple operations (such as web requests or database queries) and continue executing other tasks while waiting for responses, rather than processing everything sequentially.

This approach is especially valuable in web crawling, where most time is spent waiting for network responses. Instead of freezing the entire application while waiting for each page to load, async programming allows you to process multiple pages concurrently, dramatically improving efficiency.

Using start_crawl method

Firecrawl offers an intuitive asynchronous crawling method via start_crawl:

app = Firecrawl(api_key=os.getenv('FIRECRAWL_API_KEY'))

crawl_status = app.start_crawl(url="https://docs.stripe.com")

print(crawl_status)CrawlResponse(id='177e4b5c-5739-4b20-b3f7-435065a4e207', url='https://api.firecrawl.dev/v2/crawl/177e4b5c-5739-4b20-b3f7-435065a4e207')It accepts the same parameters and scrape options as crawl but returns a CrawlResponse object with the job ID and monitoring URL.

We're primarily interested in the crawl job id and can use it to check the process status using get_crawl_status (note: the job ID is passed as a positional argument, not a keyword argument):

checkpoint = app.get_crawl_status(crawl_status.id)

print(len(checkpoint.data))5get_crawl_status returns the same CrawlJob object as crawl but only includes the pages scraped so far. You can run it multiple times and see the number of scraped pages increasing.

If you want to cancel the job, you can use cancel_crawl passing the job id (it returns True if successful):

final_result = app.cancel_crawl(crawl_status.id)

print(final_result)TrueBenefits of asynchronous crawling

There are many advantages to using start_crawl over crawl:

- You can create multiple crawl jobs without waiting for each to complete.

- You can monitor progress and manage resources more easily.

- Perfect for batch processing or parallel crawling tasks.

- Applications can remain responsive while crawling happens in background

- Users can monitor progress instead of waiting for completion

- Allows for implementing progress bars or status updates

- Easier to integrate with message queues or job schedulers

- Can be part of larger automated workflows

- Better suited for microservices architectures

In practice, asynchronous crawling is almost always used for large websites.

What Is New in Crawl v2?

Firecrawl v2 introduces natural language prompts for crawling, a powerful new feature that lets you describe what you want to crawl in plain English rather than configuring complex parameters manually.

Natural Language Crawl Prompts

Instead of specifying include_paths, exclude_paths, and other technical parameters, you can now use conversational descriptions:

# Instead of this v1 approach:

crawl_result = app.crawl(

url="https://docs.stripe.com",

include_paths=["/api/*", "/docs/*", "/reference/*"],

exclude_paths=["/blog/*"],

max_discovery_depth=5

)

# You can now do this in v2:

crawl_result = app.crawl(

url="https://docs.stripe.com",

prompt="Extract API documentation and reference guides"

)Crawl Parameters Preview

Before running your actual crawl, you can preview how Firecrawl interprets your prompt using the new /crawl/params-preview endpoint:

import requests

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer fc-YOUR-KEY-HERE'

}

data = {

"url": "https://docs.stripe.com/api",

"prompt": "Extract API documentation and reference guides"

}

response = requests.post(

"https://api.firecrawl.dev/v2/crawl/params-preview",

headers=headers,

json=data

)This returns the derived parameters that Firecrawl will use:

{

"success": true,

"data": {

"url": "https://docs.stripe.com/api",

"includePaths": ["api/.*", "docs/.*", "reference/.*"],

"maxDepth": 5,

"crawlEntireDomain": false,

"allowExternalLinks": false,

"allowSubdomains": false,

"sitemap": "include",

"ignoreQueryParameters": true,

"deduplicateSimilarURLs": true

}

}How Different Prompts Affect Crawling

Different prompts generate different parameter sets. Here are some examples:

Prompt: "Get payment processing guides and examples"

{

"includePaths": ["payment-processing/guides/.*", "payment-processing/examples/.*"]

}Prompt: "Crawl authentication and webhook documentation"

{

"includePaths": ["authentication/.*", "webhook/.*"]

}This feature makes Firecrawl more accessible to users who want to focus on their data needs rather than learning crawling configuration details. The params-preview endpoint is especially useful for understanding and refining your prompts before committing to a full crawl.

How to Save and Store Web Crawling Results

When crawling large websites, it's important to save results persistently. Firecrawl provides crawled data in a structured format that can be easily saved to various storage systems. Let's look at some common approaches.

Local file storage

The simplest approach is saving to local files. Here's how to save crawled content in different formats:

import json

from pathlib import Path

def save_crawl_results(crawl_result, output_dir="firecrawl_output"):

# Create output directory if it doesn't exist

Path(output_dir).mkdir(parents=True, exist_ok=True)

# Save full results as JSON

with open(f"{output_dir}/full_results.json", "w") as f:

json.dump(crawl_result, f, indent=2)

# Save just the markdown content in separate files

for idx, page in enumerate(crawl_result.data):

# Create safe filename from URL

filename = (

page.metadata.url.split("/")[-1].replace(".html", "") or f"page_{idx}"

)

# Save markdown content

if page.markdown:

with open(f"{output_dir}/{filename}.md", "w") as f:

f.write(page.markdown)Here's what the function does:

- Creates an output directory if it doesn't exist

- Saves the complete crawl results as a JSON file with proper indentation

- For each crawled page:

- Generates a filename based on the page URL

- Saves the markdown content to a separate .md file

app = Firecrawl(api_key=os.getenv('FIRECRAWL_API_KEY'))

crawl_result = app.crawl(url="https://docs.stripe.com/api", limit=10)

save_crawl_results(crawl_result)This is a basic function that requires modifications for other scraping formats.

Database storage

For more complex applications, you might want to store results in a database. Here's an example using SQLite:

import sqlite3

import json

def save_to_database(crawl_result, db_path="crawl_results.db"):

conn = sqlite3.connect(db_path)

cursor = conn.cursor()

# Create table if it doesn't exist

cursor.execute(

"""

CREATE TABLE IF NOT EXISTS pages (

url TEXT PRIMARY KEY,

title TEXT,

content TEXT,

metadata TEXT,

crawl_date TIMESTAMP DEFAULT CURRENT_TIMESTAMP

)

"""

)

# Insert pages

for page in crawl_result.data:

cursor.execute(

"INSERT OR REPLACE INTO pages (url, title, content, metadata) VALUES (?, ?, ?, ?)",

(

page.metadata.url,

page.metadata.title,

page.markdown or "",

json.dumps(page.metadata.model_dump()),

),

)

conn.commit()

print(f"Saved {len(crawl_result.data)} pages to {db_path}")

conn.close()The function creates a SQLite database with a pages table that stores crawled data. For each page, it saves the URL (as primary key), title, content (in markdown format), and metadata (as JSON). The crawl date is automatically added as a timestamp. If a page with the same URL already exists, it's replaced with new data. This provides a persistent storage solution that can be easily queried later.

save_to_database(crawl_result)Saved 10 pages to crawl_results.dbLet's query the database to double-check:

# Query the database

conn = sqlite3.connect("crawl_results.db")

cursor = conn.cursor()

cursor.execute("SELECT url, title, metadata FROM pages")

print(cursor.fetchone())

conn.close()(

'https://docs.stripe.com/api/errors',

'Errors | Stripe API Reference',

'{"title": "Errors | Stripe API Reference", "description": "Complete reference documentation for the Stripe API.", "url": "https://docs.stripe.com/api/errors", "language": "en-US", "status_code": 200}'

)Cloud storage

For production applications, you might want to store results in cloud storage. Here's an example using AWS S3:

import boto3

from datetime import datetime

def save_to_s3(crawl_result, bucket_name, prefix="crawls"):

s3 = boto3.client("s3")

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

# Save full results

full_results_key = f"{prefix}/{timestamp}/full_results.json"

s3.put_object(

Bucket=bucket_name,

Key=full_results_key,

Body=json.dumps(crawl_result, indent=2),

)

# Save individual pages

for idx, page in enumerate(crawl_result.data):

if page.markdown:

page_key = f"{prefix}/{timestamp}/pages/{idx}.md"

s3.put_object(Bucket=bucket_name, Key=page_key, Body=page.markdown)

print(f"Saved {len(crawl_result.data)} pages to {bucket_name}/{full_results_key}")Here is what the function does:

- Takes a crawl result dictionary, S3 bucket name, and optional prefix as input

- Creates a timestamped folder structure in S3 to organize the data

- Saves the full crawl results as a single JSON file

- For each crawled page that has markdown content, saves it as an individual

.mdfile - Uses boto3 to handle the AWS S3 interactions

- Preserves the hierarchical structure of the crawl data

For this function to work, you must have boto3 installed and your AWS credentials saved inside the ~/.aws/credentials file with the following format:

[default]

aws_access_key_id = your_access_key

aws_secret_access_key = your_secret_key

region = your_regionThen, you can execute the function provided that you already have an S3 bucket to store the data:

save_to_s3(crawl_result, "sample-bucket-1801", "stripe-api-docs")Saved 10 pages to sample-bucket-1801/stripe-api-docs/20241118_142945/full_results.jsonIncremental saving with async crawls

When using async crawling, you might want to save results incrementally as they come in:

import time

def save_incremental_results(app, crawl_id, output_dir="firecrawl_output"):

Path(output_dir).mkdir(parents=True, exist_ok=True)

processed_urls = set()

while True:

# Check current status

status = app.get_crawl_status(crawl_id)

# Save new pages

for page in status.data:

url = page.metadata.url

if url not in processed_urls:

filename = f"{output_dir}/{len(processed_urls)}.md"

with open(filename, "w") as f:

f.write(page.markdown or "")

processed_urls.add(url)

# Break if crawl is complete

if status.status == "completed":

print(f"Saved {len(processed_urls)} pages.")

break

time.sleep(5) # Wait before checking againHere is what the function does:

- Creates an output directory if it doesn't exist

- Maintains a set of processed URLs to avoid duplicates

- Continuously checks the crawl status until completion

- For each new page found, saves its markdown content to a numbered file

- Sleeps for 5 seconds between status checks to avoid excessive API calls

Let's use it while the app crawls the Books-to-Scrape website:

# Start the crawl

crawl_status = app.start_crawl(url="https://books.toscrape.com/")

# Save results incrementally

save_incremental_results(app, crawl_status.id)Saved 20 pages

...Building AI-Powered Web Crawlers with Firecrawl and LangChain Integration

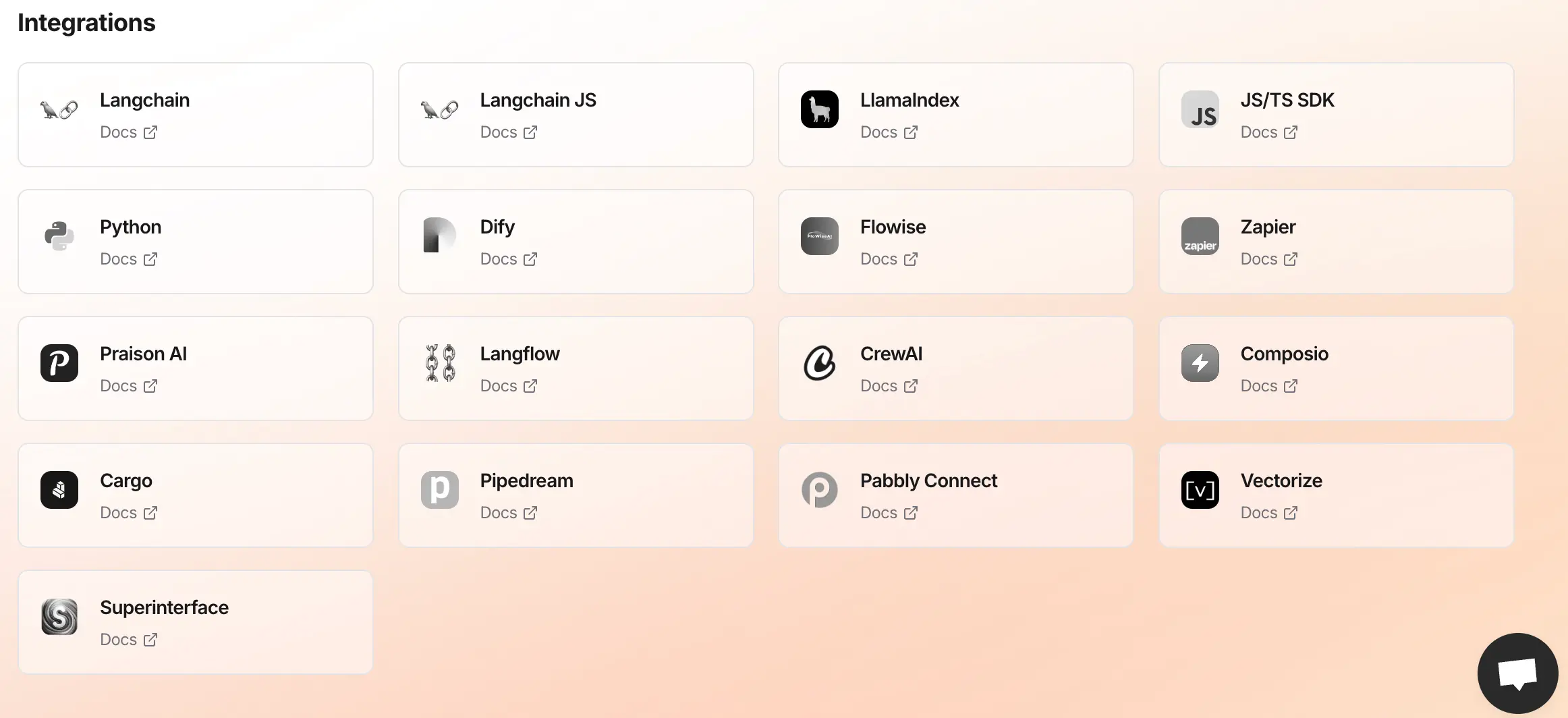

Firecrawl has integrations with popular open-source libraries like LangChain, LlamaIndex, CrewAI, and other platforms.

Note: We're actively updating the LangChain FireCrawlLoader for Firecrawl v2 compatibility. When it's ready, this section will be updated to show how to build AI-powered RAG pipelines using Firecrawl's v2 crawling capabilities with LangChain's document processing framework.

In the meantime, you can use the direct Firecrawl v2 approach shown throughout this tutorial and manually convert the results to LangChain Document format as a temporary workaround.

Ready to Crawl at Scale

In this guide, we explored Firecrawl's crawl method and its capabilities for web scraping at scale. From basic usage to advanced configurations, we covered URL control, performance tuning, and asynchronous operations. We also examined practical implementations, including data storage solutions and integrations.

If your use case is extracting structured data from repeating lists (like product grids, directories, or search results), you can also explore list crawling with Python here: List Crawling: Extract Structured Data From Websites at Scale.

The method's ability to handle JavaScript content, pagination, and various output formats makes it a versatile tool for modern web scraping needs. Whether you're building documentation chatbots or gathering training data, Firecrawl provides a solid foundation for AI crawling projects. By using the configuration options and best practices discussed, you can build efficient and scalable web scraping solutions tailored to your specific requirements.

data from the web