Introduction

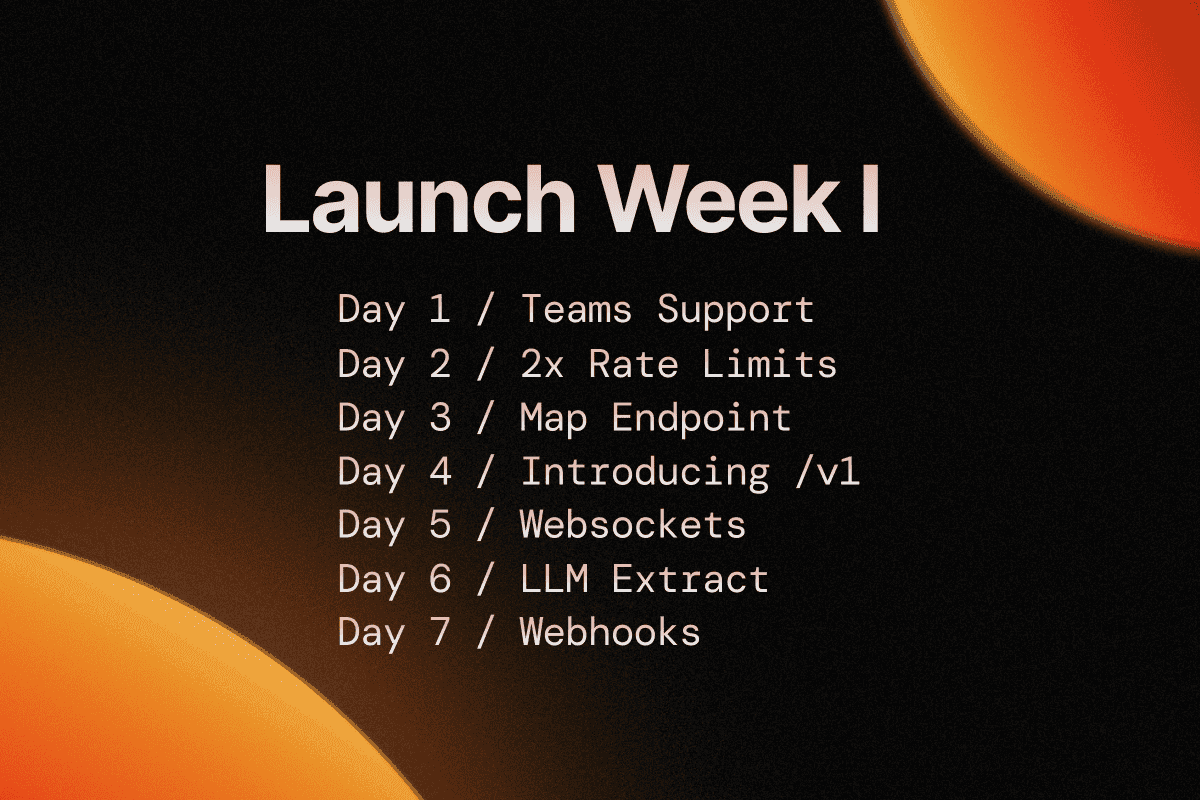

Last week marked an exciting milestone for Firecrawl as we kicked off our inaugural Launch Week, unveiling a series of new features and updates designed to enhance your web scraping experience. Let's take a look back at the improvements we introduced throughout the week.

We started Launch Week by introducing our highly anticipated Teams feature. Teams enables seamless collaboration on web scraping projects, allowing you to work alongside your colleagues and tackle complex data gathering tasks together. With updated pricing plans to accommodate teams of all sizes, Firecrawl is now an excellent platform for collaborative web scraping.

On Day 2, we improved your data collection capabilities by doubling the rate limits for our /scrape endpoint across all plans. This means you can now gather more data in the same amount of time, enabling you to take on larger projects and scrape more frequently.

Day 3 saw the unveiling of our new Map endpoint, which allows you to transform a single URL into a comprehensive map of an entire website quickly. As a fast and easy way to gather all the URLs on a website, the Map endpoint opens up new possibilities for your web scraping projects.

Day 4 marked a significant release: Firecrawl /v1. This more reliable and developer-friendly API makes gathering web data easier. With new scrape formats, improved crawl status, enhanced markdown parsing, v1 support for all SDKs (including new Go and Rust SDKs), and an improved developer experience, v1 enhances your web scraping workflow.

On Day 5, we introduced a new feature: Real-Time Crawling with WebSockets. Our WebSocket-based method, Crawl URL and Watch, enables real-time data extraction and monitoring, allowing you to process data immediately, react to errors quickly, and know precisely when your crawl is complete.

Day 6 brought v1 support for LLM Extract, enabling you to extract structured data from web pages using the extract format in /scrape. With the ability to pass a schema or just provide a prompt, LLM extraction is now more flexible and powerful.

We wrapped up Launch Week with the introduction of /crawl webhook support. You can now send notifications to your apps during a crawl, with four types of events: crawl.started, crawl.page, crawl.completed, and crawl.failed. This feature allows for more seamless integration of Firecrawl into your workflows.

Wrapping Up

Launch Week showcased our commitment to continually evolving and improving Firecrawl to meet the needs of our users. From collaborative features like Teams to performance improvements like increased rate limits, and from new endpoints like Map and Extract to real-time capabilities with WebSockets and Webhooks, we've expanded the possibilities for your web scraping projects.

We'd like to thank our community for your support, feedback, and enthusiasm throughout Launch Week and beyond. Your input drives us to innovate and push the boundaries of what's possible with web scraping.

Stay tuned for more updates as we continue to shape the future of data gathering together. Happy scraping!

data from the web